Snap Tracks Skeletal Joints (plus more from Amazon & Nvidia)

Personalized 3D animations, satellite management systems & emergency responses for self-driving cars.

Sign up to uncover the latest in emerging technology.

personalized 3D animations, satellite management systems & emergency responses for self-driving cars

1. Snap – personalized animations of 3D avatars

Snap’s patent application for animating 3D avatars based on the movements of a user captured in a video recently got revealed.

It works by tracking the skeletal joints of a body’s movement in a 2D video, creating a movement vector, and then applying that movement vector to the skeletal joints of a 3D avatar. Snap then augments the original video with the animated 3D avatar.

Why is this interesting?

Firstly, tracking the movement of skeletal joints of a person in a 2D video is extremely impressive. While we’ve seen a lot of these companies (Snap, TikTok, IG) get really good at mapping the face and applying filters over the top, Snap is uniquely ahead of others by doing 3D body tracking.

Secondly, there are fascinating use-cases of having 3D animated avatars. We’ve so far seen some applications around digital fashion, where users can start to wear digital clothes as filters – try this jacket from RTFKT. We could start to see cool bits of synthetic media where infamous movement vectors are applied to a user’s skeletal joints. For example, imagine a viral filter that takes your whole body and makes it dance like Michael Jackson. And in a world where Snap Glasses are used, maybe you’ll start to see other users wearing their digital identity (clothes or avatars) in real-time.

Ultimately, Snap’s technology is now available for the developer and creative community to run wild with. But moving from ‘face filters’ to ‘full-body filters’ will be fascinating to track.

2. Amazon – systems for managing a constellation of satellites

This patent application is interesting because it shows how some of the big tech companies are applying their expertise to new platform plays, such as space.

Amazon is looking to build a system that manages a constellation of satellites. The system will ingest data such as satellite telemetry, space weather data, and object ephemeris data about other orbital objects. Using this, Amazon will then automatically operate satellites to perform station keeping manoeuvres (i.e. staying in the same orbit as a target), maintenance activities, interference mitigation, and so forth.

Currently, operators of individual satellites tend to manually manage their own satellites. But this is hard to scale with the number of satellites, as it’s time and labour intensive to manage satellites, and manual management makes it hard to react to dynamic events.

Amazon’s CMS will mostly automate the management of satellites. If certain manoeuvres are more complicated, a human operator might be asked for confirmation.

So, why is this interesting?

The automated management of these satellites will leverage Amazon’s expertise in AI / ML. In the same way that AWS provides AI services for developers to deploy in their applications, Amazon can do the same for satellite operators. Helping with the management of satellites is an interesting way for Amazon to insert itself into the space industry, without necessarily needing to build and deploy their own satellites (even though they are also doing that for providing broadband around the world).

As new technology platforms emerge, Amazon positioning itself as an underlying infrastructure provider is a good way to maintain relevance in any upcoming platform wars.

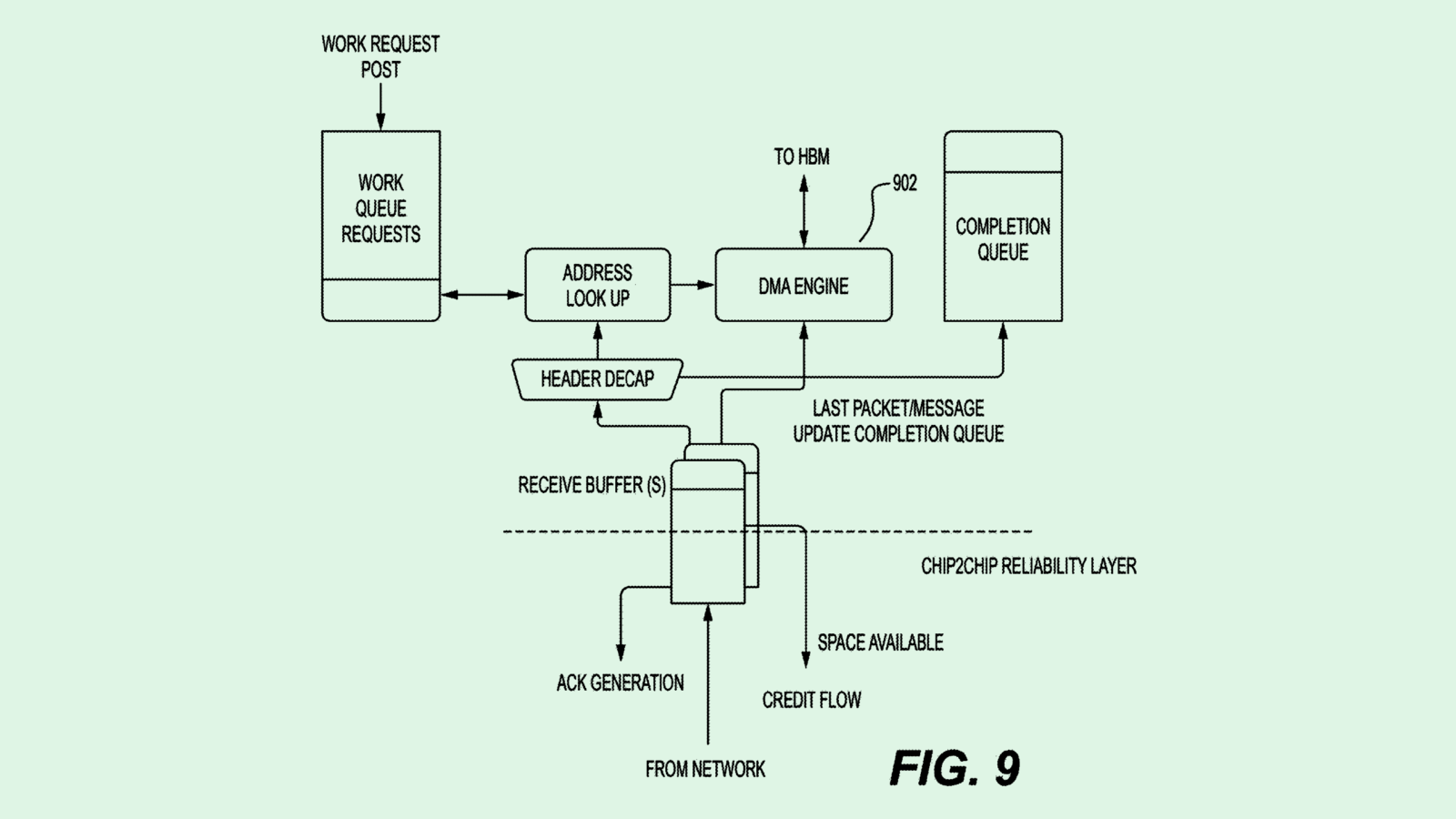

3. Nvidia – detecting emergency response vehicles for self-driving cars

Most self-driving vehicles are using LiDAR and cameras to read the environment around a car, and assist in self-driving. However, one area where the self-driving falls short is when it comes to dealing with emergency vehicles.

If an autonomous system is using LiDAR only, it might be slow to react to an emergency vehicle, especially if there are other vehicles or obstacles in the way.

Nvidia instead want to use microphones on a vehicle to detect and classify the sirens of emergency vehicles. From this, Nvidia’s system will try to detect the location and direction of travel for the sound. From this information, the vehicle can plan a route that will allow for the emergency vehicles to pass by, much sooner than if relying only on LiDAR & cameras.