Nvidia Fights to Stay One Step Ahead of AI’s Many Evolutions

This year’s Nvidia GTC event comes after the bombshell DeepSeek moment, the acceleration of a global trade war, and a broader market rout.

Sign up for smart news, insights, and analysis on the biggest financial stories of the day.

Amid a not-so-good, pretty terrible year, Nvidia’s GTC conference this week may not have been the party that the company was expecting.

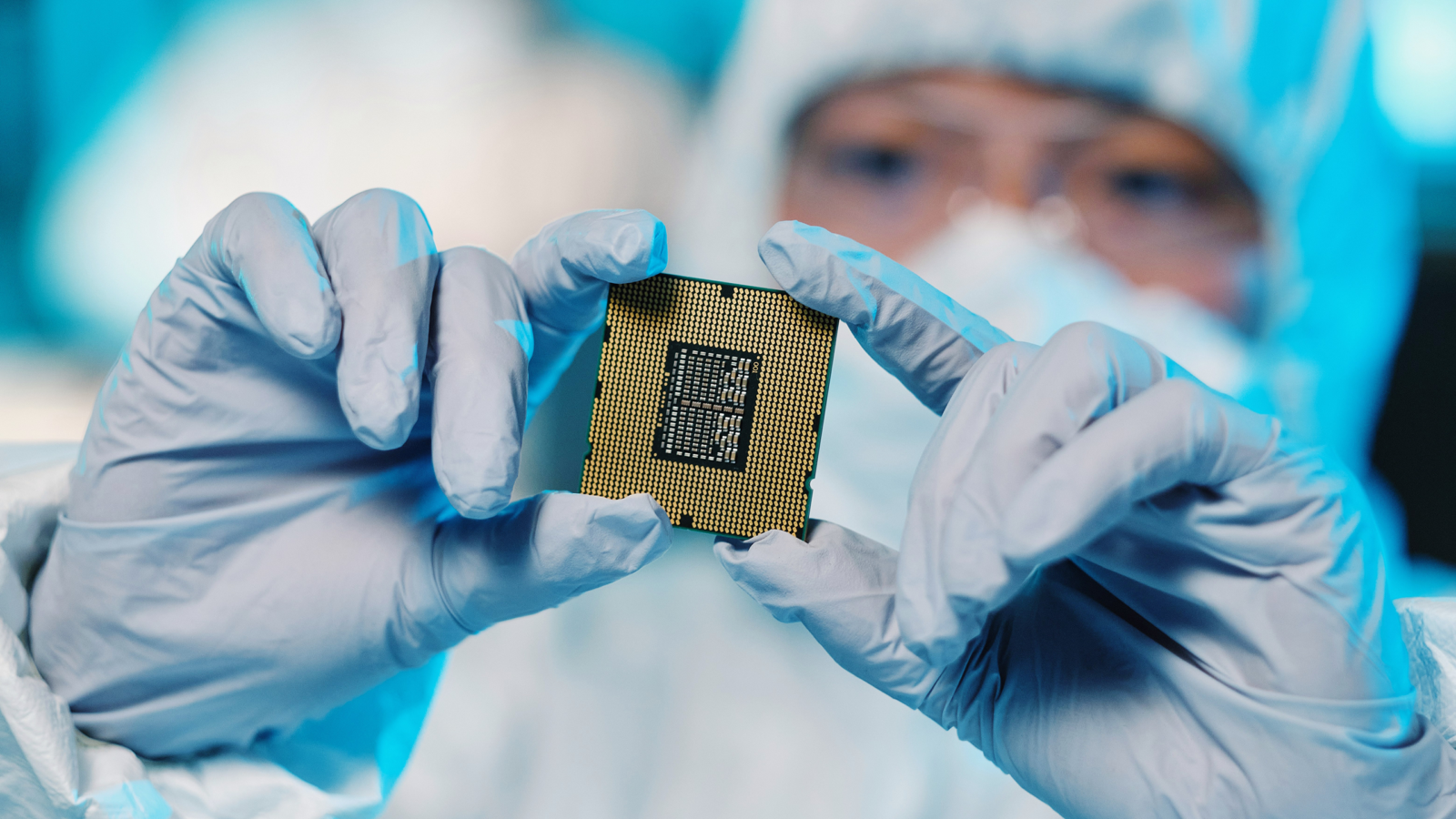

The conference, yearly since 2009, offers a glimpse into the present and the future of the concentric artificial intelligence and semiconductor industries. This year’s event comes just weeks after the bombshell DeepSeek moment, the acceleration of a global trade war, and a broader market rout. So where does the preeminent semiconductor maker go from here?

No Inference Indifference

Nvidia — whose share price is down over 14% year-to-date — spent the week attempting to make one thing clear: The AI revolution is continuing apace, and Nvidia is fortifying its position at the forefront of it. Which for Nvidia means fighting a two-front war. On one front, the company is focused on shoring up its supply chains against potential shocks. On the other front, it’s focused on staying one step ahead of the AI industry’s shifting demand toward chipstacks wired for “inference” models, such as those offered by Chinese upstart sensation DeepSeek, and beyond the training chip stacks that Nvidia currently dominates.

In the AI race so far, most compute demand has been for training new models. According to Barclays, Nvidia is expected to control nearly 100% of the chip market for the training space moving forward. But now that many models have been trained, the industry is rapidly turning toward “inference” models. While techies can explain the subtle nuances of what that means, laymen simply need to know it requires a different chipstack (and much more power) than training. And it’s here that Nvidia will suddenly face competition — and new opportunity:

- According to analysts at Morgan Stanley, some 75% of compute power demand from US data centers will be for inference AI models in the coming years.

- That means a massive shift in investment. According to Barclays analysts, capital expenditures for inference in the largest and most advanced AI models will leapfrog capex for AI training within two years, jumping from a projected $122 billion this year to $200 billion in 2026. Barclays analysts also project that Nvidia will only be able to serve roughly 50% of the growing market for inference chipstacks.

At the conference this week, Nvidia CEO Jensen Huang argued that “almost the entire world got [the DeepSeek moment] wrong.” While the Chinese startup trained its model using a remarkably scant chipstack, running its model will spark more demand for compute power. And rest assured, he said, the latest chip the company debuted this week, named after famed astronomer Vera Rubin, is perfect for the job. Investors didn’t exactly buy the pitch; shares fell 1% earlier this week after Huang’s keynote speech (though have since clawed back the losses).

Born in the USA: On the supply chain front, Huang told the Financial Times in an interview Wednesday that the company plans to spend hundreds of billions of dollars in the next four years to base its supply chain in the US as much as possible. That’s because the company fears that its relationship with critical supplier Taiwan Semiconductor Manufacturing Company (TSMC) could be imperiled by growing Chinese aggression toward Taiwan, just as China-based Huawei is growing into a true Nvidia competitor. TSMC, for its part, recently announced a $100 billion investment in its growing facilities in Arizona, which was kickstarted by a $65 billion investment from the Biden administration.