Happy Halloween and welcome to Patent Drop!

Today, a patent from Google could help cool down quantum computers while they work with classical ones, overcoming a major barrier in scaling the tech. Plus: OpenAI wants to work on its active listening skills, and Intel wants to make AI robots more collaborative and scalable.

Let’s take a peek.

Google Keeps Its Cool

Quantum computers run hot. Google wants to cool them down.

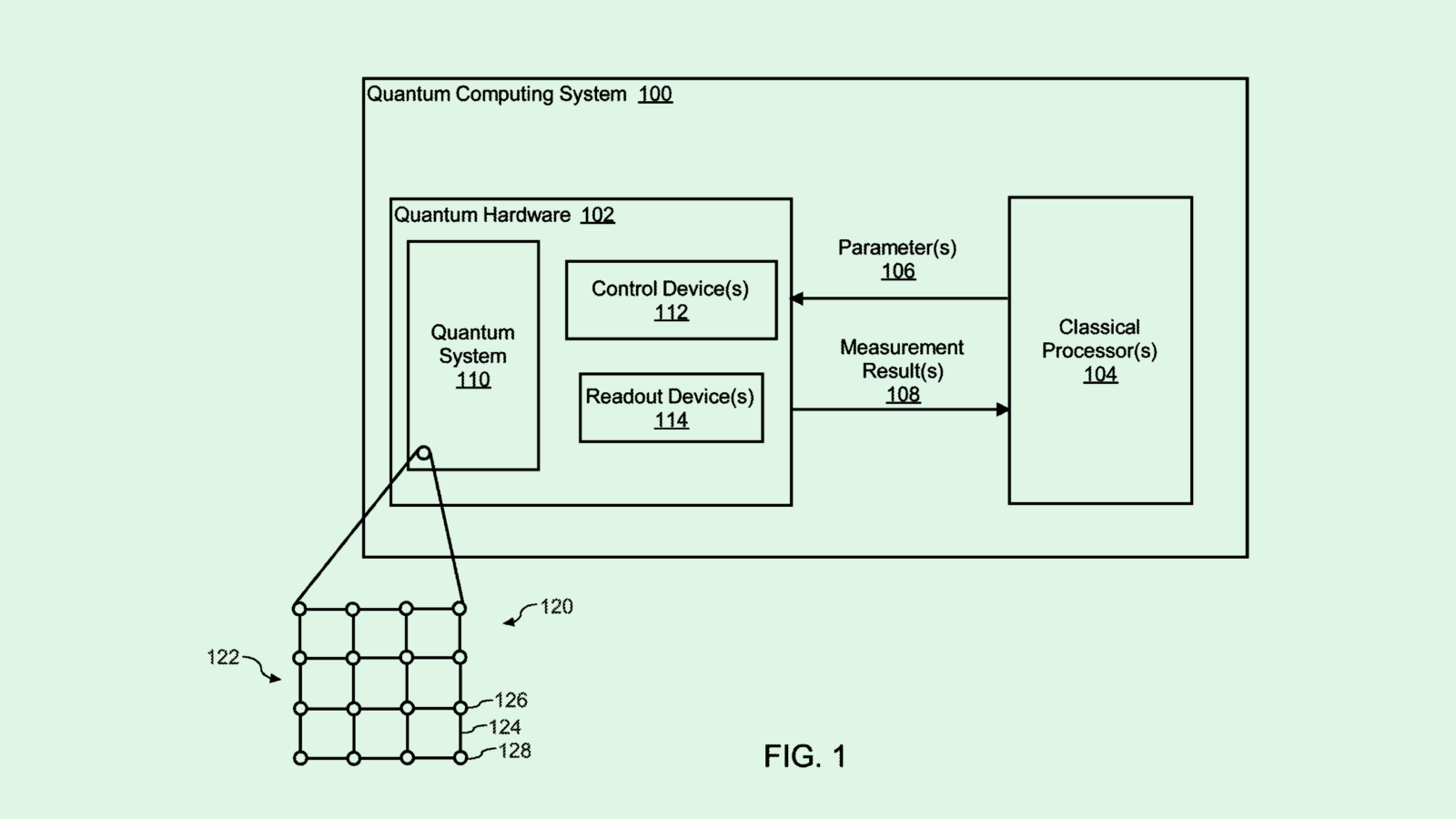

The company is seeking to patent a “cryogenic cooling system” for “multi-unit scaling of quantum computing.” Google’s tech uses multiple stages of cooling to properly get a quantum computer to the extremely low temperature it needs to operate.

The reason quantum computers need to be kept so cool is that they rely on “superconductivity,” or conducting an electrical current without energy loss, to operate, Google said. “A challenge associated with quantum computing includes cooling quantum hardware … to a temperature at which the superconducting qubits achieve superconductivity.”

Google’s tech uses seven stages to cool these devices close to cryogenic “absolute zero” temperatures, referring to the lowest point on the Kelvin scale — nearly -460 degrees Fahrenheit.

The first stage starts above 60 kelvins, or roughly -350 degrees Fahrenheit, and the drops between temperatures get smaller at each stage, reaching around 20 millikelvins (-459 degrees Fahrenheit) by stage seven. This tiered method ensures that there isn’t a dramatic drop in temperature that could damage the quantum components.

Quantum computing faces a number of barriers to scaling. The stability of these devices is deeply impacted by their environment. The most advanced ones have only reached just over 1,000 qubits, and, as Google’s patent addresses, they need to be kept near absolute zero.

“This patent really shows that they’re trying to improve their mastery of [quantum], at least on the cooling side in terms of temperature efficiency, because you don’t want to spend too much energy cooling,” said Ashley Manraj, chief technology officer at Pvotal Technologies.

Quantum computers need to be kept in extremely isolated environments, making their usefulness and collaboration with classical computers limited. “Getting the normal computer itself next to a quantum computer ends up being a problem,” said Manraj.

“This patent is interesting because the objective seems to be to maintain efficient stages of cooling and progressively have regular computers be introduced next to the quantum workload,” he added.

While these obstacles remain significant, the advantages of this market may be underestimated, said Manraj. A quantum computer’s main strength is optimization, he said, which in theory can be applied to practically any field: economics, agriculture, logistics, government, and more. “Basically, we don’t even know what we don’t know,” he said.

Researchers are constantly making progress on this tech, said Karthee Madasamy, founder and managing partner at deep tech venture fund MFV Partners, and many have gone from focusing on physics problems to engineering ones.

“We came to the edge of Moore’s law a few years ago,” said Madasamy. “This is really the next generation of computing.”

And while very few working quantum computers exist, they’re something that Google, IBM, Amazon, and practically every hyperscaler company have their eyes on, he said. There likely won’t be one clear “winner” of quantum computing, he said, but a combined effort of “multiple architectures” that pushes the boundaries.

“Even supercomputers cannot solve many of these problems — it’ll take them thousands of years to solve a problem that can be done in a few minutes [with quantum],” said Madasamy. “I have not seen any other technologies that can leap from current trends of computing to newer things that we can achieve.”

OpenAI Listens Closely

OpenAI wants to hear you better.

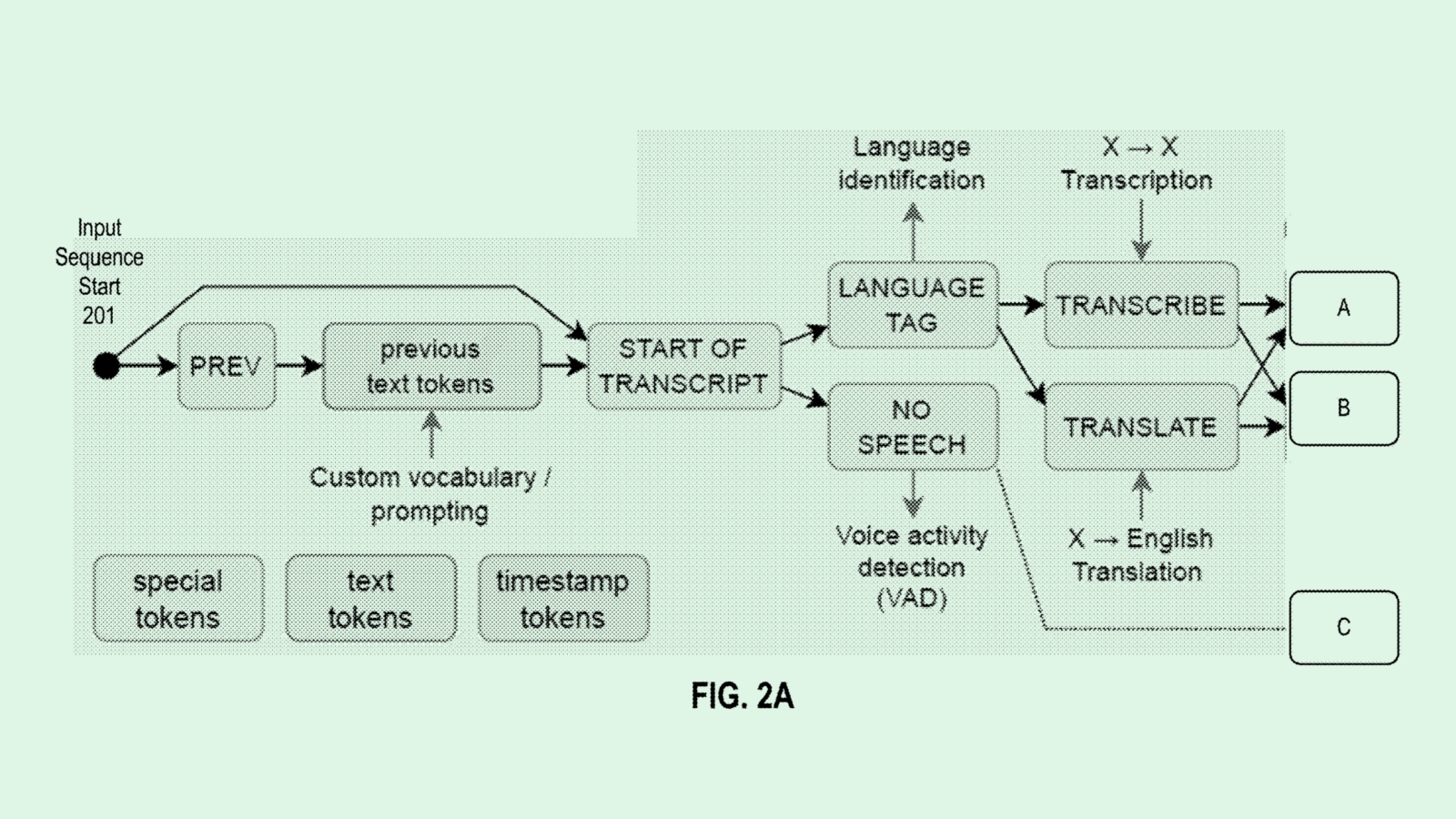

The company is seeking to patent a system for “multi-task automatic speech recognition.” OpenAI’s patent details a voice-activated AI model that’s able to handle tasks in several different languages.

OpenAI’s tech uses a transformer model, which learns context and relationships between data, outfitted with an encoder and decoder to process streams of audio and turn them into text. The decoder is configured to pick up a “language token,” which specifies the target language for translation, as well as a “task token,” which determines the task that the audio stream is asking for.

Additionally, the transformer model is configured to understand “special-purpose tokens,” which guide it to complete specific tasks, and “timestamp tokens,” which time-align audio to text.

“There are many different tasks that can be performed on the same input audio signal: transcription, such as translation, voice activity detection, time alignment, and language identification,” OpenAI said in the filing.

This system helps the model understand relationships between audio snippets and their corresponding text to make it more efficient at translation and task performance, and uses these specific tokens to hone the model’s skills in specific contexts.

Voice-operated AI has become a priority for OpenAI. The company unveiled its advanced voice mode back in May with the announcement of GPT-4o, and released the feature to an invite-only group in July before opening it up to a wider audience at the end of September.

The model surpasses its standard voice mode, capable of handling interruptions and interpreting emotion in a user’s voice. Additionally, the company unveiled several new tools earlier this month that are capable of fast-tracking voice assistants’ development using only a single set of instructions.

However, the company has run into problems with some of its speech recognition tech. OpenAI’s transcription model Whisper has reportedly faced major issues with hallucinations, researchers told the Associated Press, something that’s particularly problematic given the model’s use in healthcare settings.

“It’s an absolute nightmare to have a medical translation tool hallucinate,” said Bob Rogers, Ph.D., the co-founder of BeeKeeperAI and CEO of Oii.ai. “The last thing you want to do is try and push out mission-critical applications and technology that’s not ready for primetime.”

But the tech in this patent (one of the very few filed by OpenAI) could be a “first step” toward making speech recognition models more robust, said Rogers. Having a one-size-fits-all approach to AI may work for some models, but for those used in critical applications, context is often key, he said. “This idea of focusing and creating tokens that control context could be a good start,” Rogers said.

Plus, a major issue with “far-ranging” models is the domino effect that can occur as it learns and grows. “You change things in one place and you get impacts in others — it’s really hard to control,” Rogers said. “Maybe focusing helps with that as well.”OpenAI wants to hear you better.

Intel’s ‘Cobots’

Intel is continuing its quest for robot co-workers.

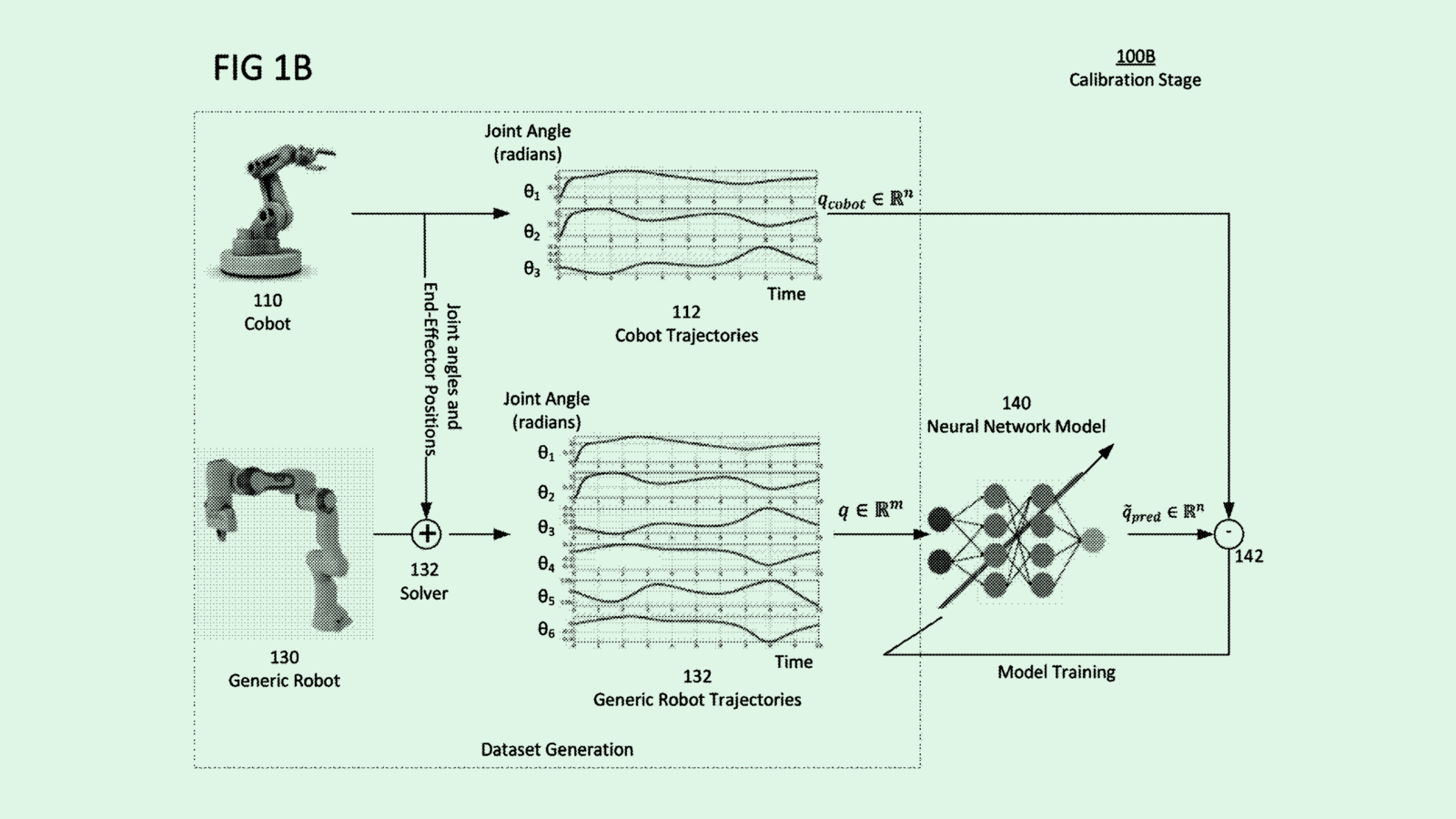

The company is seeking to patent a system for “cobot model generation,” based on a model used for a generic robot. “Cobot” is short for “collaborative robotics,” which places robots in situations that are “not predefined by the manufacturer,” requiring them to be adaptable.

“Unlike traditional industrial robots, cobots operate in diverse and dynamic environments that are often unpredictable,” said Intel. “Cobots frequently need to be programmed by individuals without specialized robotics expertise.”

To make these robots more versatile, Intel’s tech starts off with a generic robotics model that’s been taught redundant “degrees of freedom,” or the independent movements a robot can make, to cover a wide range of motion and “encompass extensive workspaces.” Starting with a general model that’s adaptable to different situations and machines potentially allows for easier scalability.

A trained neural network adapts the trajectories of these movements to each cobot’s specific environment, learning its limitations and improving collision avoidance. The movements are refined iteratively, making cobots better at their tasks over time. This self-training could reduce the need for expert oversight.

This isn’t the first time we’ve seen Intel take an interest in robotics. The company has previously sought to patent a way to detect broken sensors for autonomous machines and a system for real-time motion planning.

These patents come as AI robotics are on the receiving end of growing hype. Google and its AI subsidiary DeepMind have plenty of patents in the space, and seek to bring the capabilities of its Gemini language model to robots. Nvidia’s patent history tells a similar story, including proactive safety and decision-making systems for autonomous machines. And Tesla has been working on humanoid robot companions since 2021.

Though these robots seem like fringe technology, the market for them may have potential: A report from Goldman Sachs found that the addressable market for humanoid robots is projected to reach $38 billion by 2035, driven largely by demand in manufacturing environments, especially if device components get cheaper.

But a big part of making robotic co-workers an everyday reality is making them safer, as patents like Intel’s intend to do. Plus, given that Intel is falling behind in AI as a whole, patents like this one may be an attempt to help it carve out niche IP in emerging AI use cases.

Extra Drops

- Google wants to make your YouTube videos pop. The company is seeking to patent a system for “enhancing the quality of user generated content.”

- Airbus wants to leave no trace. The aerospace developer is seeking to patent a system for “contrail suppression.”

- Netflix wants to get really, really good at telling you what to watch. The company filed a patent application for optimizing a “deep learning recommender model” with reinforcement learning.

What Else is New?

- Meta’s Reality Labs lost $4.4 billion in the recent quarter, though revenue rose 29% year over year to $270 million.

- Microsoft CFO Amy Hood said that the company’s investment in OpenAI will weigh on its earnings.

- Block-owned Square will expand its corporate card service in the UK, marking the first time the offering has been available outside of North America.

Patent Drop is written by Nat Rubio-Licht. You can find them on Twitter @natrubio__.

Patent Drop is a publication of The Daily Upside. For any questions or comments, feel free to contact us at patentdrop@thedailyupside.com.