Happy Monday and welcome to Patent Drop!

Today, a machine learning AR patent from Meta highlights that the future of the company’s metaverse vision may involve its ambitious AI bet. Plus: Microsoft wants its language models to be more adaptable, and Uber wants to know how you feel before you get in the car.

Before we get into it, let’s talk about putting AI to work for people. The ServiceNow platform puts AI to work across your business, helping your people work better. Resolving issues faster. Increasing productivity. So your people can focus on the work that matters. Learn more about the AI platform for business transformation.

Let’s jump in.

Meta’s AI Resolution

Meta wants to broaden the picture.

The company is seeking to patent a system for generating “super resolution images” using a “duo-camera artificial reality device.” This tech uses machine learning for artificial reality image reconstruction and generation, fusing Meta’s two main focuses: AI and the metaverse.

Artificial reality glasses have two main requirements: high-resolution imagery and small form factor, which Meta noted are “in general contrary to each other.” While “single-image super resolution,” which uses machine learning to make low-res images clearer, can achieve both goals, Meta’s patent aims to improve methods for doing so within the context of a pair of smart glasses.

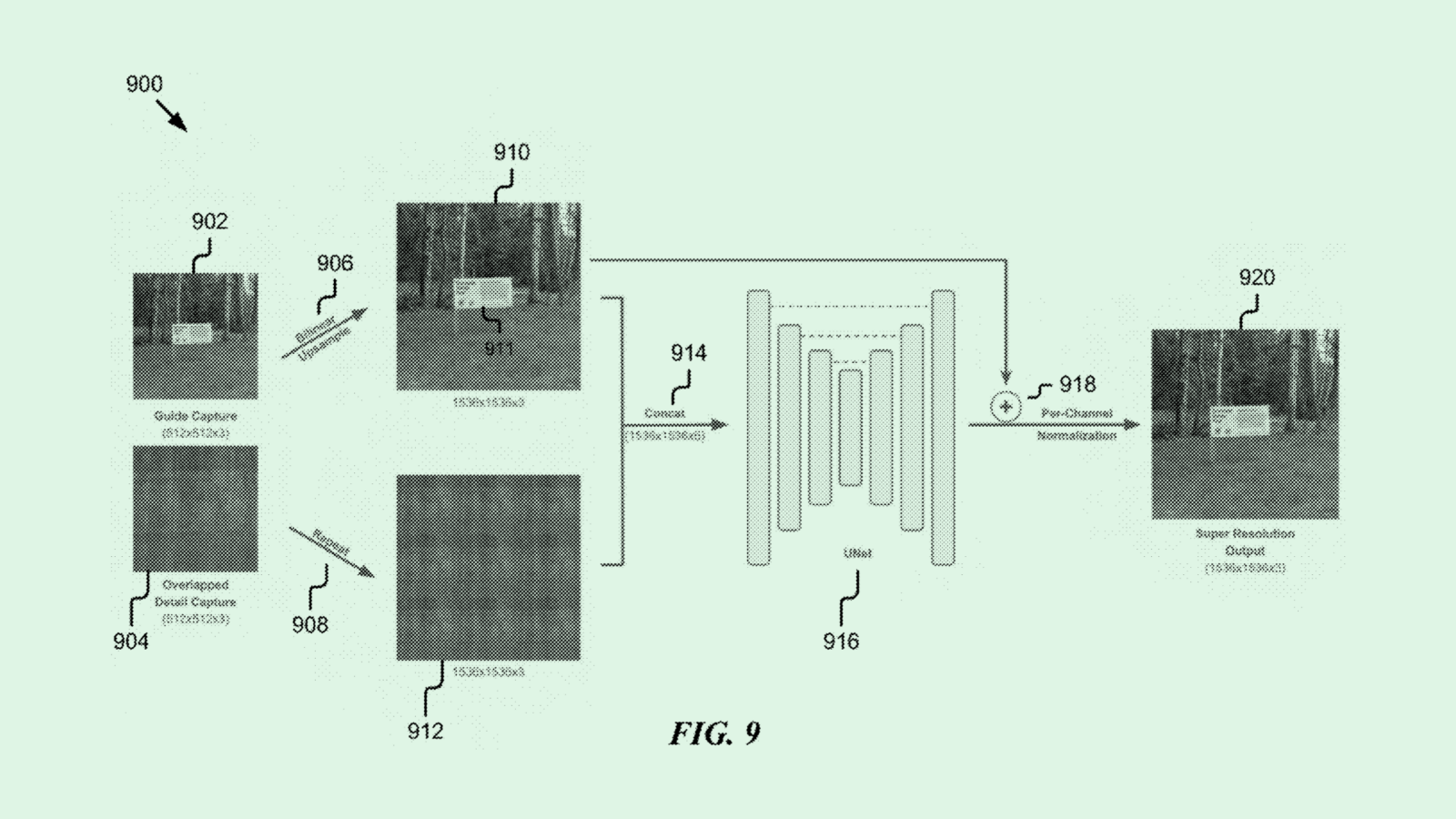

Meta’s process first takes images from multiple cameras positioned on a pair of glasses, the primary being a “guide camera” with a wider field of view and lower resolution, and one or more secondaries being “single detail cameras” with a narrower field of view but higher resolution. The filing noted that there may be up to nine single-detail cameras positioned around the frames.

The images taken by the guide camera are “upsampled,” or increased in resolution without losing quality. Meanwhile, the images from the single-detail camera are “tiled,” in which multiple perspectives of a scene are combined into one image. This makes it so that the “resulting overlapped detail image can capture the scene in high quality from all different views.”

This image data is then fed to a machine learning model or neural network, which reconstructs a final view that smooths out a high-quality field of view of the entire scene. The result is a much smoother, low-latency viewing experience.

Meta has been working hard to keep up with the rest of Big Tech’s AI drive. The company released an early version of Llama 3, the latest version of its open-source large language model, in mid-April and has started embedding AI throughout its social media experiences.

The company’s push is far from over. In its recent earnings call, Meta noted that capital expenditures would “increase next year as we invest aggressively to support our ambitious AI research and product development efforts.” The company bumped up its spending guidance from $30 billion-$37 billion to $35 billion-$40 billion. Investors were none too pleased with the lavish spending plans, with shares tumbling 15% on the news, cutting its market cap by billions.

As it stands, Meta’s monetization strategy and timeline for its AI are “somewhat vague, particularly in comparison to major tech competitors who are already monetizing generative AI services via cloud computing,” said Ido Caspi, research analyst at Global X ETFs.

But the company’s broad user base across its family of apps gives it plenty of opportunities to make money from these models, Caspi noted. Plus, its access to robust data sets and its open-source approach can only benefit it in the long run. “We anticipate that Meta will emerge as one of the frontrunners in the AI race,” Caspi said.

This patent also signals that its mixed-reality technology could be a potential use case for its AI research, said Jake Maymar, AI strategist at The Glimpse Group.

Similar to its AI work, the company has invested billions into its metaverse technology with little revenue to show for it. But using machine learning to create a clearer picture and bring in new augmented reality capabilities, while simultaneously slimming down the form factor from headsets to glasses, could be the company’s plan to make the metaverse experience more “frictionless,” Maymar said.

“The biggest hurdle for AR is the form factor,” said Maymar. “Ultimately, the first company that takes machine learning, computer vision, and neural networks and starts applying it to vision in a way that’s fashionable – it’s going to be a no-brainer.”

The AI Platform for Business Transformation

The ServiceNow platform puts AI for people to work across your business.

Right now, there are a lot of AI voices out there making big noise and even bigger promises. But which AI is the best? Which one actually makes a difference? Truth is, AI is only as powerful as the platform it’s built into. We’re talking a single, intelligent platform that’s made for this moment. One you can trust with all your customer and business data to harness the true power of AI. To help your people do what they do even better.

With ServiceNow, AI touches every corner of your business—transforming how everything works.

Learn more about the AI platform for business transformation.

Microsoft’s Broader Language Model

Microsoft wants its AI models to pick up on subtleties.

The company is seeking to patent a system for “large language model utterance augmentation.” This aims to give a language model a broader understanding of user queries by understanding when certain phrases are “semantically related to one another.”

Conventional training of large language models is often tedious, as it involves “brainstorming complex regular expressions or curating massive, labeled datasets containing an exhaustive collection of possible utterances,” Microsoft noted. This patent aims to upend that process by helping models understand the intent of a user’s request, rather than just the words they’re saying.

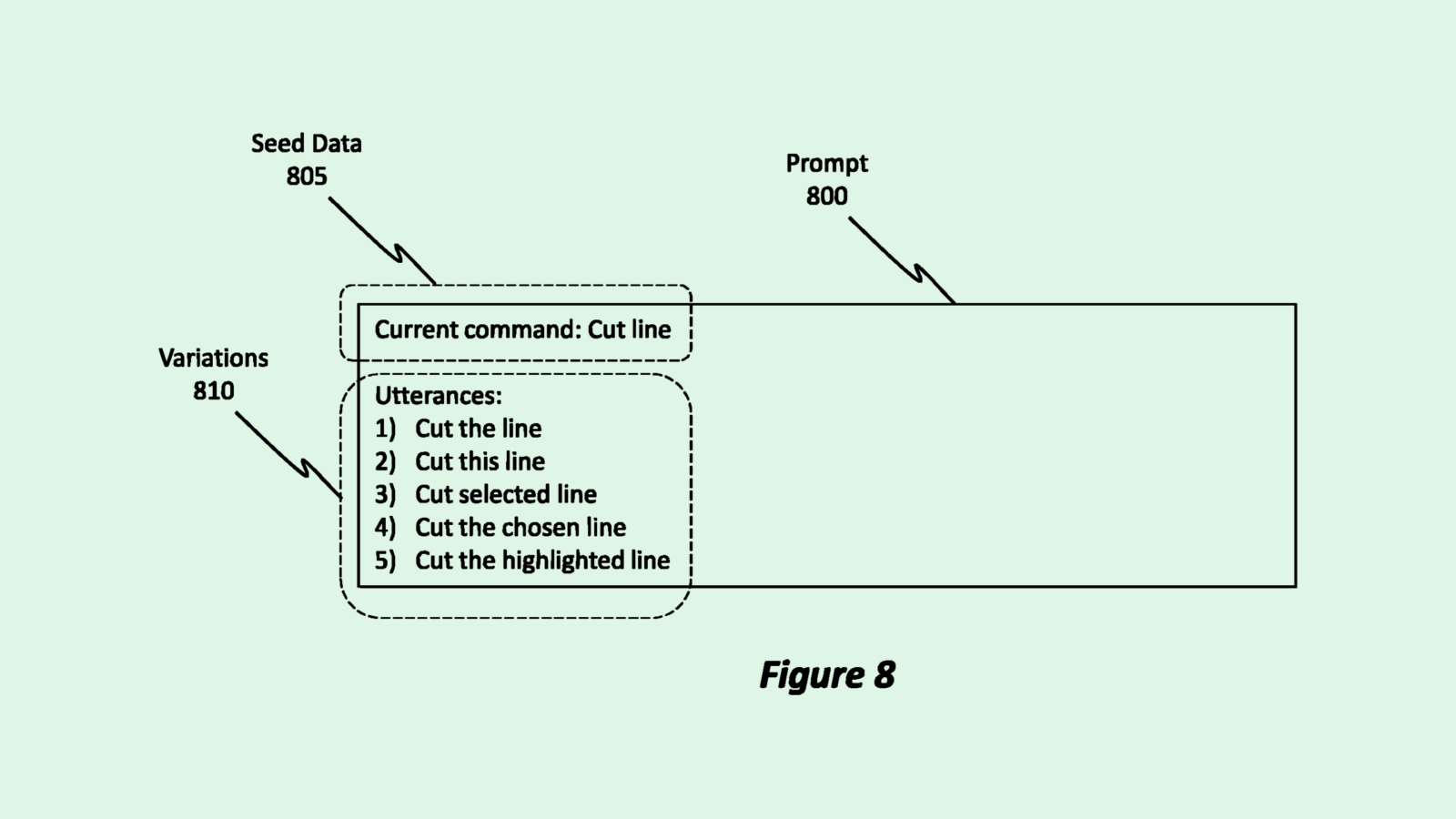

Microsoft’s system starts with a large language model trained on an “arbitrary corpus of language training data.” This system then feeds that language model “seed data,” which includes several phrases that are semantically linked together, such as variations of a certain phrase.

The language model takes that seed data and creates even more possible variations, broadening its scope. When any one of these phrases is later received as an input query by a user, the model understands it as linked to a group of phrases, triggering a specific command.

For instance, in the context of a voice-activated AI model, if you say “lower the music,” this system may link it to related phrases like “turn down the music” or “lower the volume,” and understand what to do. In the context of a chatbot or copilot, if you ask it to search for a certain file, but misspell the file name, it would be able to complete the request anyway.

For the past year, Microsoft has worked arm-in-arm with OpenAI on the development and integration of its large language model into several of its Copilot products. To date, Microsoft has invested $13 billion in the startup.

But the tech giant seems to be working on a large language model of its own. Last week, The Information reported that Microsoft is training a large language model called MAI-1, which can reportedly rival OpenAI’s GPT-4. The model’s development is being overseen by Mustafa Suleyman, co-founder of Google DeepMind and former CEO of AI startup Inflection.

The model is far larger than any of its current open source models at around 500 billion parameters (compared to OpenAI’s one trillion parameters) and is likely to be much more expensive, The Information noted. The company may debut this model at its Build conference on May 16.

While the company hasn’t clarified what it intends to use this model for, Microsoft CTO Kevin Scott took to Linkedin last week to say that its partnership with OpenAI isn’t likely to go away. “There’s no end in sight to the increasing impact that our work together will have,” Scott said.

And though little is publicly known about the company’s new AI, patents like these may give some insight into how it’s training and implementing its large language models.

Uber’s Ride Therapy

Uber wants to know how you’re really feeling when you call that 2 a.m. ride.

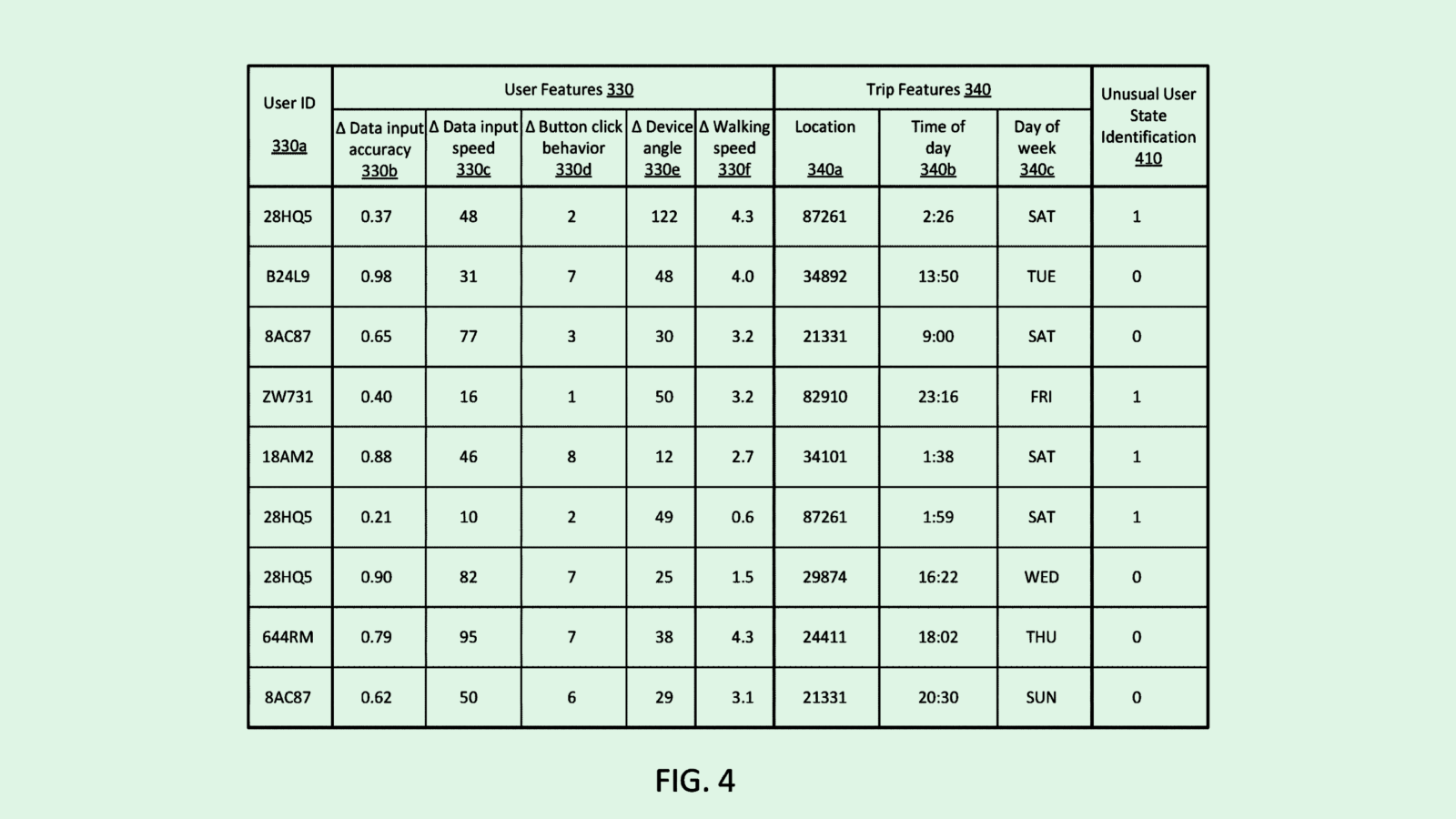

The company wants to patent a system for “predicting user state using machine learning.” Uber’s tech monitors a user’s past and present behavior on rides to determine what normal versus “uncharacteristic” behavior may look like.

Uber said in the filing that safety incidents or bad ride experiences may occur when a user or provider acts uncharacteristically. By understanding a bit more context on the user’s current state, whether it be physical or mental, this tech aims to help mitigate safety or experience issues.

Uber’s tech uses a machine learning model to identify patterns in the user’s past trip data to detect if a ride being called is indicative of an unusual state. This data can include trips that were monitored as they occurred, user ratings, or feedback from drivers or riders about trips. It can also include more in-depth data like texts sent to drivers, movement of user devices, time and destination of the trip, and even the speed at which a user interacts with the Uber app.

With this hoard of data, the system can determine whether or not a user may act out of character before they even get into the vehicle. For example, if a user generally takes an Uber to and from work, but they happen to call a ride home from a bar at midnight on a Tuesday after a happy hour that went long, this system may deem that as out of the ordinary.

The system can respond in a number of ways, including changing the time of pick-up, modifying pick-up or drop-off locations to ones that are “well lit and easy to access,” matching users with more experienced providers, or preventing users from getting into carpools. In some cases, the user may not be matched with a provider at all.

Uber already offers different safety features, including speed limit alerts, real-time ID checks, and phone number anonymization. The company also previously sought to patent a system for detecting “location-spoofing” to keep track of a driver’s location.

The tech in this patent could serve multiple purposes. For one, rides that are tailored to a user’s current state could open up the door to personalized services at a premium – something that may boost sales after the company’s earnings swerved back into unprofitability.

Another likely use case is boosting safety for both drivers and riders. This kind of tech is in line with calls for increased in-car surveillance, such as requiring cameras, to ensure safety after hundreds of lawsuits against Uber claiming that the company hasn’t done enough to prevent assault.

But building behavior profiles of users may come with some privacy concerns. This tech relies on tracking not just a user’s ride history and ratings, but up-close data like their typing speed, accuracy, and movements to determine whether or not you’re acting strangely. Given that AI isn’t the best at understanding human emotional or mental states, Uber’s tech may have difficulties gaining any insight from this kind of tracking.

Extra Drops

- Google wants to gauge the atmosphere of your neighborhood. The company wants to patent a temporal map for “neighborhood and regional business vibe and social semantics.”

- Nvidia wants your video games to move more naturally. The company wants to patent “physics-based simulation” of character motion using generative AI.

- Amazon wants to make Alexa more conversational. The company filed a patent application for systems to provide “supplemental information” in addition to a vocal request.

What Else is New?

- Squarespace is going private in a $7 billion, all-cash private equity deal with Permira. The move follows years of turbulence for the company in the public markets.

- Amazon’s autonomous vehicle startup Zoox is under investigation by the National Highway Traffic Safety Administration following two crashes caused by unexpected braking.

- On our intelligent platform, AI isn’t just a promise. It’s happening today. Simply. Seamlessly. Securely. Freeing your people to do the real work. The work that matters. Put AI to work for your employees, with amazing self-service experiences. Put AI to work for your service reps, to quickly turn intelligence into action. Put AI to work for developers, so they can code faster than ever. Learn more about the AI platform for business transformation. *

* Partner

Patent Drop is written by Nat Rubio-Licht. You can find them on Twitter @natrubio__.

Patent Drop is a publication of The Daily Upside. For any questions or comments, feel free to contact us at patentdrop@thedailyupside.com.