Happy Thursday and welcome to Patent Drop!

Today, Google’s patent to scrape radio stations for AI training could provide a pool of useful data, though it may face a copyright dilemma. Plus, Hyundai is bringing cybersecurity to its connected vehicles; and Microsoft wants to track down cloud attackers.

Before we jump in, a quick note: We have reinstated our “Read in Browser” function, which you can access by clicking our logo at the top of this email — or via the “Read in Browser” prompt at the very bottom of this newsletter.

And a programming announcement: Monday’s newsletter will be a special edition focusing on AI’s impact on election integrity.

Now, let’s dive in.

Google’s Data Radio Station

Google wants its AI models to listen to the radio.

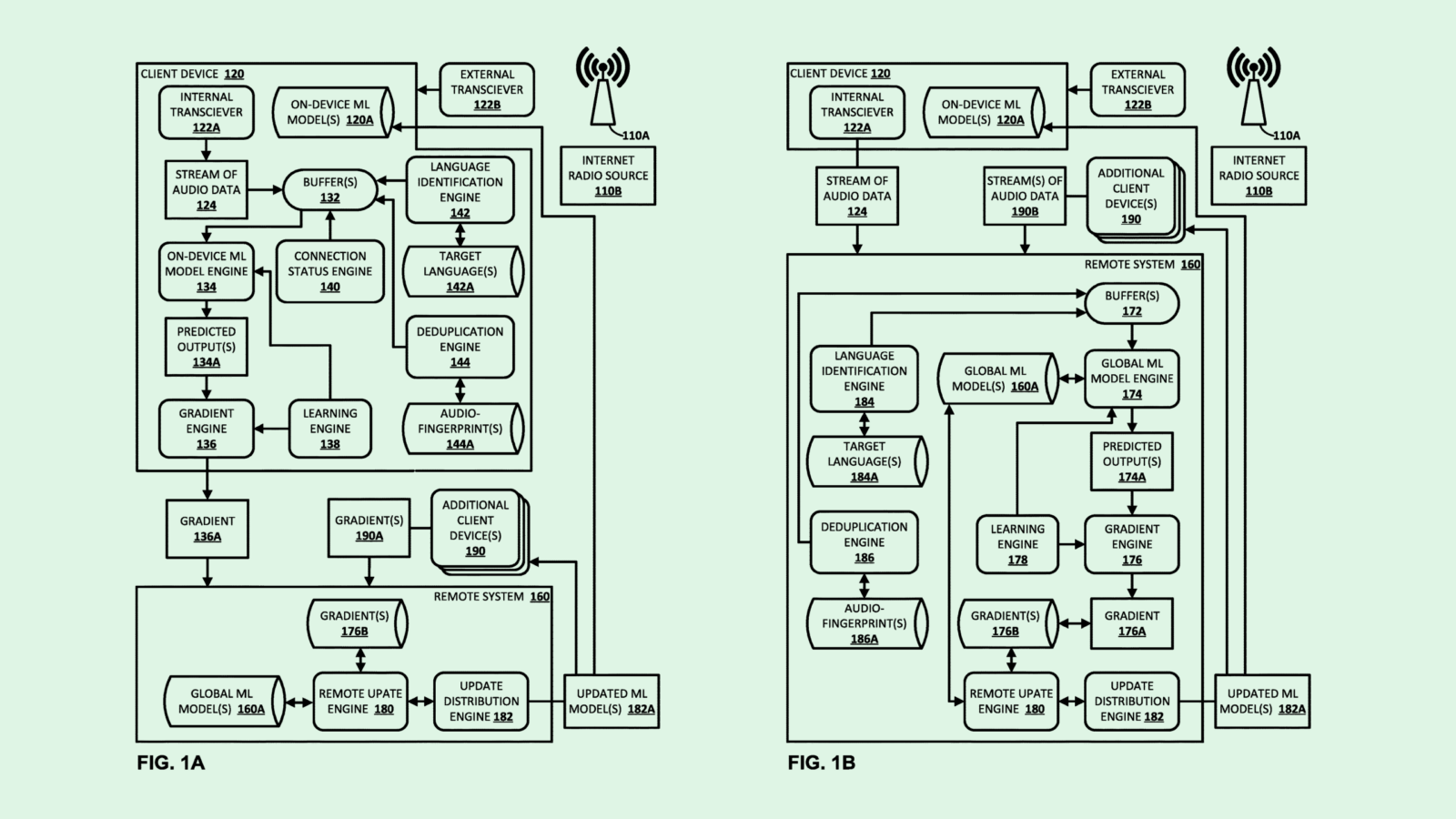

The company is seeking to patent a way to train machine learning models on radio station audio with “ephemeral learning and/or federated learning” Basically, Google’s system trains audio-based AI models on streams from tons of different radio stations around the globe.

Because publicly available speech data repositories may be limited, Google said in the filing that using radio streams will “expand the practicality of using these different ML techniques beyond explicit user inputs and … broaden the diversity of data utilized by these different ML techniques.”

This system allows machine learning models to learn less-common languages (Google calls these “tail languages”) via local radio stations. To keep these models from using the same audio streams over and over again – for example, if a commercial repeats once an hour – Google’s system employs “deduplication techniques” to remove those samples and prevent overfitting.

Google’s system relies on two kinds of machine learning techniques: federated and ephemeral. Federated learning is when a trained model is aggregated across a number of devices and servers, allowing the data to stay local to the device that collected it. Ephemeral learning is a subset of federated learning where the audio data is never stored by Google at all, but rather a continuous stream of data is simply used for training and then discarded.

Using these techniques, Google can constantly train and update audio-based models with the data of as many radio stations as it wants. The end result of all of this training is an ever-evolving model that can understand a wide range of phrases in many languages, even those that are “commonplace or well-defined,” making it more effective at responding to commands.

A major benefit of Google’s training method is the privacy-preserving element. For one, rather than getting audio data directly from users to train AI models, such as some of Google’s previous patents propose, this system uses voice data that’s already publicly available, thereby preserving user privacy. Plus, the use of federated and ephemeral learning keeps the audio data of those radio stations safe by never storing it within Google’s systems in the first place.

But there may be some privacy caveats that Google has to consider before training with a system like this, said Vinod Iyengar, VP of product and go-to-market at ThirdAI. If Google is wantonly drawing audio from radio stations globally, it may need to have a system in place for anonymizing that data, in case someone’s private information is shared on the radio.

“For example, let’s say someone’s phone number is mentioned on the broadcast … or names of people might be mentioned that they might want to remove,” Iyengar said. “Those are things that might be something that they should consider.”

And just because this data is publicly available doesn’t mean that Google won’t run into copyright pushback, Iyengar said. NPR, for example, notes that all of its content is covered by copyright protection, and must be cleared for use. And if this system takes in music as part of its data collection it opens another can of worms, as music use and licensing tends to be tightly regulated by labels.

The issue of fair use of data has been a hot topic in the advent of large language models, said Iyengar. A major example of this occurred in late December when The New York Times sued OpenAI and Microsoft for alleged copyright infringement related to the unauthorized use of the publication’s stories. OpenAI has since been sued by more media outlets.

In Google’s case, a problem could arise when this data is used to train AI models that the company makes revenue from, and that revenue isn’t shared with the original data source, said Iyengar. This is especially true if Google has nowhere else to get that data, such as training a speech model to understand local dialects where little data is available.

“These radio broadcasts are coming from underrepresented languages,” said Iyengar. “If I were Google, I’d be proactively trying to at least share some of the pie and give them some royalties or share revenues.”

Hyundai’s Cyber Protection

Hyundai wants to protect your car from more than just crashes.

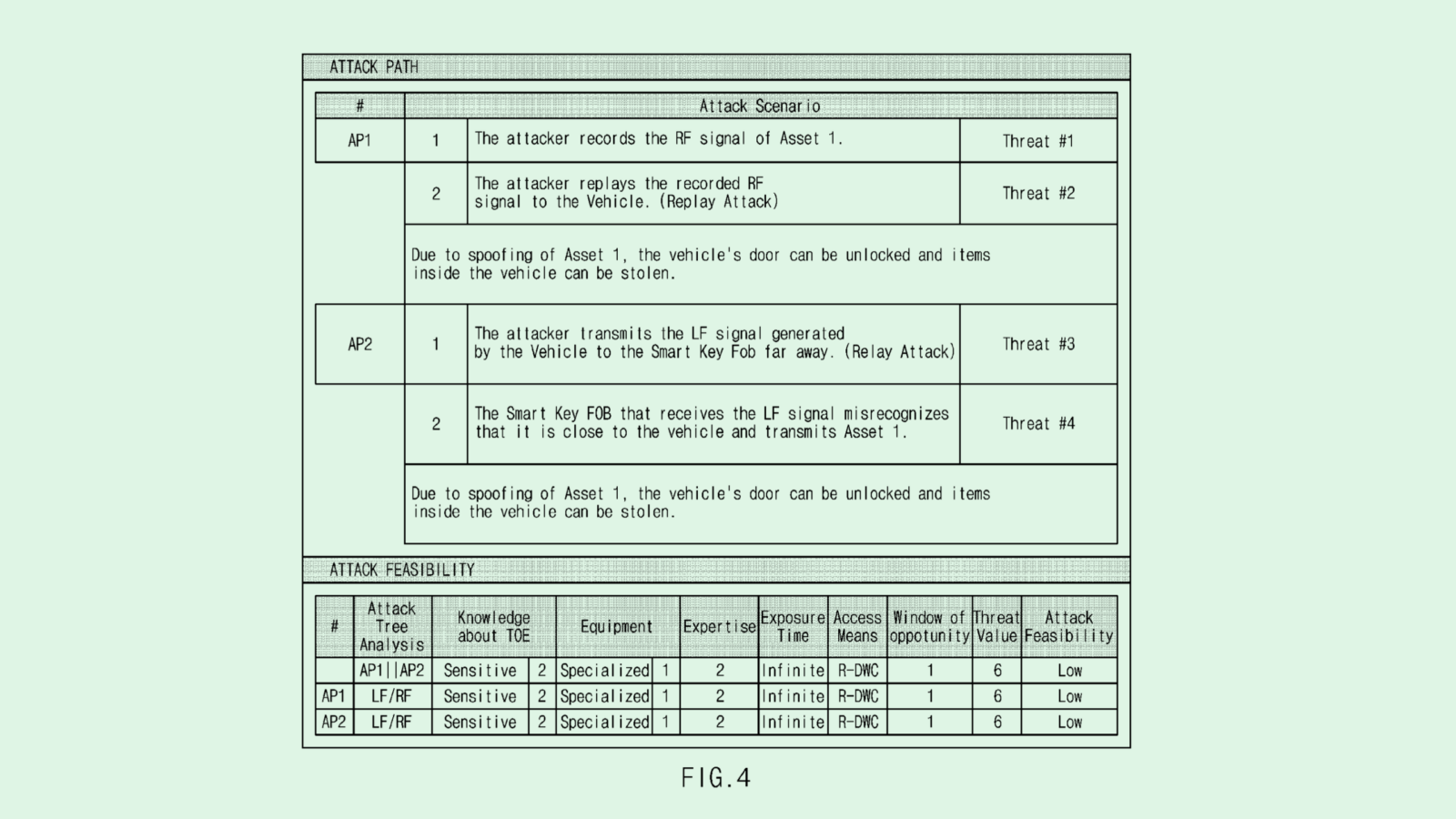

The South Korea-based automaker filed a patent application for “vehicle cyber security and attack path analysis.” Hyundai’s system aims to prevent hackers from being able to access the slew of data that its high-tech vehicles collect.

As vehicles become more connected and autonomous, and “software complexity increases, vulnerabilities inherent in software increase, and cyber threats such as hacking to exploit them have … also rapidly increased,” Hyundai noted in the filing. “Therefore, cyber security has become a very important factor in vehicle design.”

Hyundai’s system stores what it calls “threat scenarios” for how an in-vehicle control system could be vulnerable to cyberattacks, as well as the “attack paths” a bad actor could take to complete the attacks.

The filing identifies different threat patterns that the vehicle’s computer systems could be vulnerable to, such as spoofing, tampering, or information disclosure. Hyundai’s system also deciphers different “damage scenarios,” or the different kinds of data or functions that could be put at risk in the face of an attack.

In practice, this system would essentially run attack scenarios on different parts of the car to figure out its vulnerabilities. For example, its simulations may determine that a car’s Bluetooth system is vulnerable to tampering, its cellular connection system is vulnerable to information disclosure, and your GPS is at risk of spoofing attacks.

If patent activity from automakers has revealed anything, it’s that these companies are very interested in making their vehicles more high-tech. For example, Tesla’s vehicles are already loaded with user tracking features, and the company has sought to patent a “personalization system” to automatically adjust vehicle features to user preferences. Ford, meanwhile, has filed applications for things like biometric car keys and driver data usage for tracking test drives.

Automakers and tech firms alike are also building up their stables of autonomous technology, which requires far more advanced tracking capabilities than the average car has traditionally come equipped with.

Hyundai’s patent exemplifies a major issue that automakers face in developing connected cars: As these vehicles become more advanced, the amount of personal user data they collect to operate certain services grows larger. That inherently makes them more vulnerable to cyberattacks, said Bob Bilbruck, CEO of consulting firm Captjur.

“All these services that are going into the car are potentially a breach point that could be hacked or taken over,” Bilbruck said. “Your information can be taken wherever it resides. And the more connected this world gets, the more risks arise.”

Though one of the most secure ways to protect user data is simply not to collect it, in-vehicle data collection isn’t likely to stop, said Bilbruck. Automakers are walking a fine line with overcollection of personal data, he said, especially as the market for personalization features and in-vehicle AI forges ahead.

“I don’t think there’s going to be less data coming out of those cars,” he said. “Because it’s a consumer based product, it’s going to be more rather than less.” Because of this, he said, automakers need to continue looking at ways to safeguard that data – for both the protection of consumers and protection of their brands.

Microsoft Defends the Cloud

Microsoft wants to keep the cloud locked up.

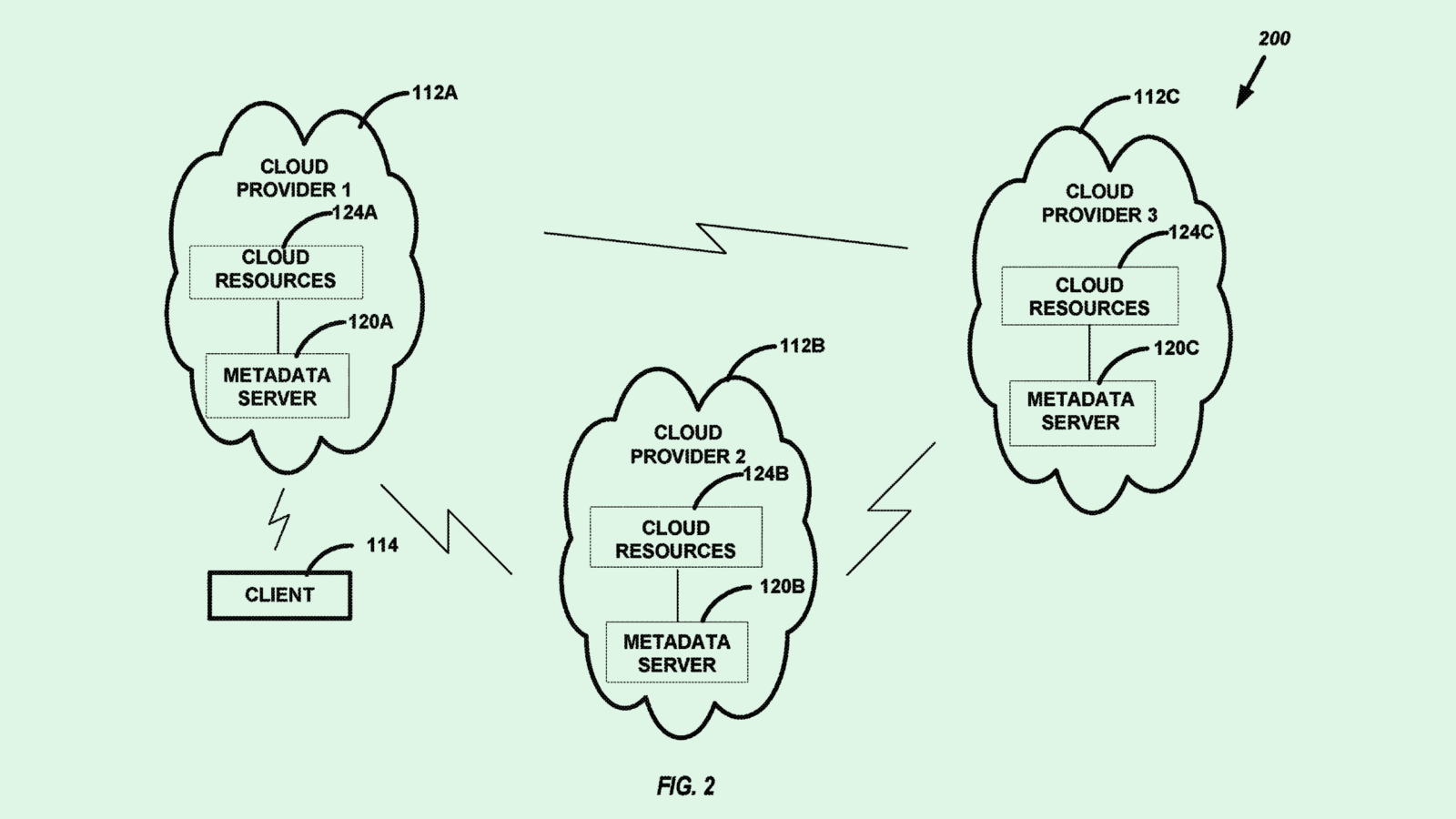

The tech firm is seeking to patent a method of “cloud attack detection” that uses API access analysis. Microsoft’s system essentially tracks user access data related to cloud programs to proactively figure out if an attempted log-in is indicative of a cyber attack.

“Attackers that get access to cloud compute resources through the API can leverage easy access to the metadata server to steal a token,” Microsoft said in the filing. “An improved cloud resource security method can detect attempts to steal cloud identities.”

Microsoft’s system uses a machine learning model to monitor and detect anomalies in an API access log. For reference, an API in this context is what allows cloud services to communicate with one another, and access logs just represent the requests users have made to bridge that communication and access certain features.

This model catches anomalies by essentially tracking if an access request came from the cloud provider that the resource is stored in. For example, if a user is seeking to access a program in Microsoft Azure, but the request comes from a user of Google Cloud, then this system may flag it as needing further vetting.

When it deems that a user attempting to gain access is fishy, it performs what Microsoft calls a “security mitigation action.” This could be checking the legitimacy of the log-in access request, performing an anti-malware scan on the resource the user is attempting to log into, or removing the user’s access permissions entirely. It’ll also alert both Microsoft operators and the operators of the other cloud service platforms (such as Google Cloud or AWS).

A system like this adds an additional layer of security and mitigates attacks before anything bad happens, rather than reacting to them after the fact.

Cloud cyber attack defense is a major concern among cloud providers, especially in the age of AI, said Trevor Morgan, VP of product at OpenDrives. AI models can be trained using GPUs via cloud providers, a service which Microsoft offers through Azure. Plus, AI training requires tons of data, a lot of which may be sensitive or personal depending on the model being trained.

“AI is going to magnify the volume of data (transfers) and requests – that curve is going to start going up, if not exponentially,” said Morgan. “And the more automation you have, the less human eyeballs are on things. So this (patent) is preparing for what AI is about to do with cloud computing.”

Cybersecurity patents like this also give Microsoft a stronger play for market share against Amazon’s AWS, which it battles both in the cloud and AI markets. And according to CRN, though AWS is still the industry leader, Microsoft has started closing the gap, ending the fourth quarter with a market share of 24%, leaving AWS at 31%.

This patent in particular adds an additional layer of security to cloud environments seemingly as a method of due diligence as it continues to work on lucrative government contracts. While government agencies have opened up to the idea of using cloud computing, they often have a “zero trust policy,” which “ultimately means trust nothing, and don’t let anyone access anything,” said Morgan.

“That doesn’t work in the real world,” said Morgan. “But our federal government does not take kindly to cloud platforms being compromised in any way … So this is just a means of being stringent.”

Extra Drops

- IBM wants to help you get your house in order (literally). The tech firm wants to patent a system for “household item inventory management.”

- Philips wants to make compliance emotionally intelligent. The medical technology company filed a patent application for an automated therapy compliance video with an “avatar explaining compliance data.”

- Meta wants to know what catches your eye. The tech company filed a patent application for “region of interest sampling” for an artificial reality system.

What Else is New?

- Salesforce debuted new AI tools for doctors, aiming to help with administrative tasks such as appointment booking and patient summaries.

- Meta is building a massive AI model to power its video ecosystem, including the recommendation engine behind Reels and Facebook videos.

- EU regulators are looking into Apples decision to close Epic Games’ developer account, reversing a decision it made a month before to grant the company an account.

Patent Drop is written by Nat Rubio-Licht. You can find them on Twitter @natrubio__.

Patent Drop is a publication of The Daily Upside. For any questions or comments, feel free to contact us at patentdrop@thedailyupside.com.