Happy Thursday and welcome to Patent Drop!

Today, Baidu’s patent to tap into autonomous vehicles for cloud computing highlights that current data centers may not be able to keep up with demand. Plus: Google’s latest AI patent may make your spreadsheets less daunting, and Microsoft is taking hands-free AR to the next level.

Before we dive into today’s patents, let’s talk about travel. When traveling for business – or even Leisure, arriving well-rested is crucial. La Compagnie, an all Business Class airline, offers executive-style travel with prices 30% to 50% cheaper than the competition. Explore how flying with La Compagnie can elevate your travel experience.

Now, let’s take a peek.

Baidu’s On-the-Go Data Center

Baidu may want to take its cloud services on a road trip.

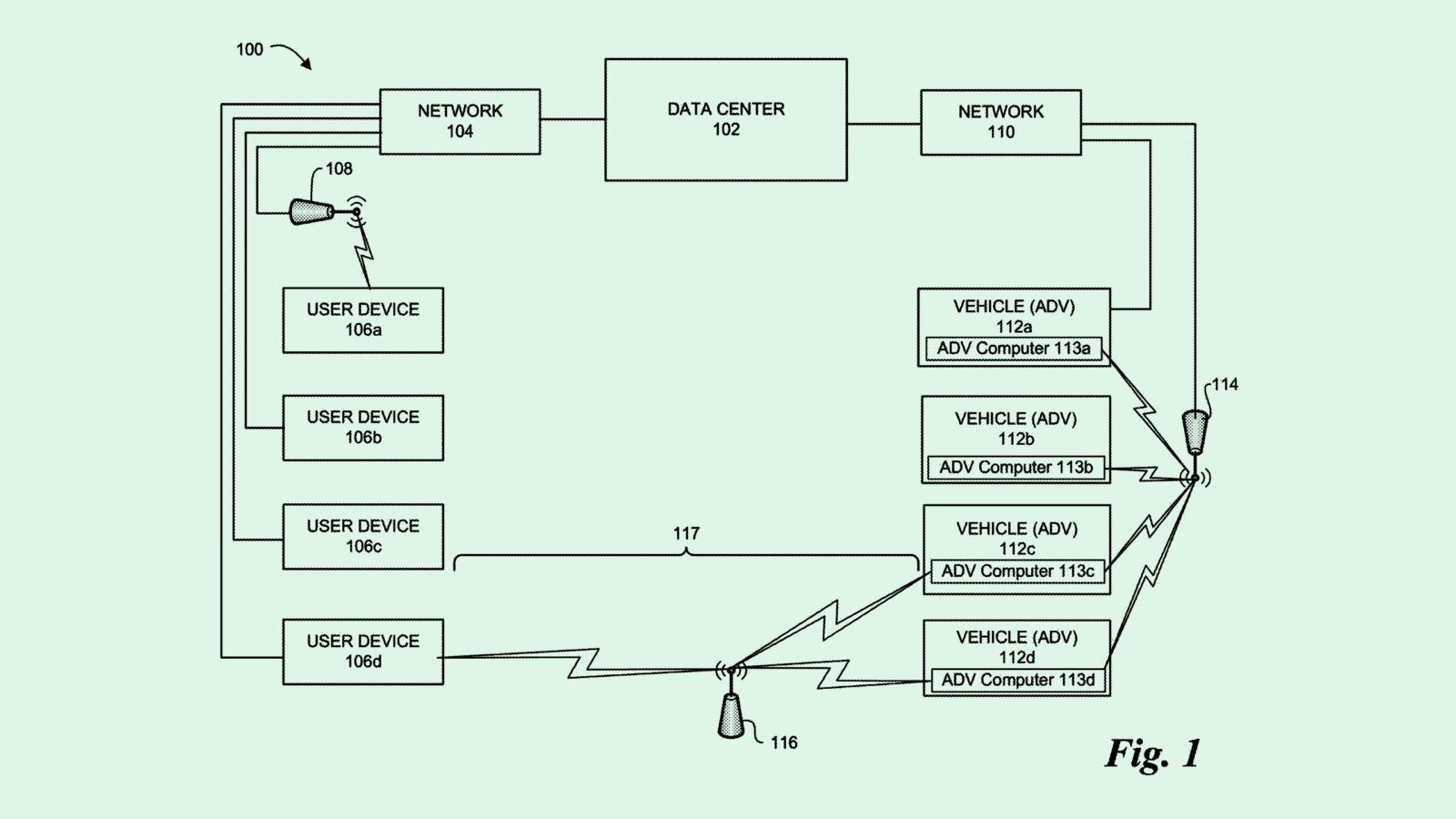

The Chinese tech firm is seeking to patent a way to use autonomous vehicles as “data center infrastructure.” Baidu’s filing essentially details a method of creating mobile data centers that can move where service is needed using self-driving cars.

“This need to service widely distributed users from a centrally located data center puts a burden on the communication networks that connect users to the data center, substantially increasing their bandwidth requirements and their cost,” Baidu said in the filing.

Here’s how it works: Baidu’s system would turn autonomous vehicles into “an extension of (a) data center” by connecting these cars with a network of data centers. If a user who is out of range of a data center requests cloud services, the system activates a self-driving car within a certain distance to complete the user’s requests.

When triggered, this service would use the extensive hardware already available in these cars and remotely reconfigure its software stack from driving mode to “micro data center” mode. These vehicles would then operate as data centers while they’re not running as cars, sitting idle while performing user requests.

Baidu’s filing gave a laundry list of reasons why autonomous vehicles are well-equipped to take on data center processing: For one, these vehicles already come with rich hardware resources that can perform tasks such as multimedia data processing and AI training, and do not require an additional facility to operate, thereby helping “reduce capital cost for data centers.”

They’re also reliable in different weather events, are capable of “over-the-air” updates, and are moveable, which “could make it easier to provide computing services to local users or communities,” Baidu said.

Demand for cloud services is growing rapidly — especially in the age of AI. But as it stands, the infrastructure itself isn’t available to match it. Baidu’s tech may provide a solution using the suite of self-driving tech that the company has already heavily invested in, said Trevor Morgan, VP of product at OpenDrives.

“It certainly has the potential. You’ve got computational power there that can be tapped into,” Morgan said. “They’re finding ways to squeeze business out of resources that are there. That’s real ingenuity.”

Using autonomous vehicles as on-the-go data centers could assist with both meeting increased demand and making services available in regions where they’re otherwise lacking, such as rural areas. Microsoft’s system for “on-demand” data centers similarly takes on this issue, automatically spinning up data center services wherever needed using resources from other local computing devices.

And because autonomous vehicles tend to be built with the tough exterior of a car, this system may come in handy where a typical data center struggles: extreme weather events or natural disasters.

Baidu has sunk a ton of resources into building out its fleet of autonomous vehicles, as evidenced by its pile of related patents. However, adoption of self-driving tech is still in its early stages. “They’re a little bit ahead of the adoption curves, with not only autonomous driving vehicles, but with this whole concept of distributing the data center outside of the data center,” Morgan said.

However, if Baidu’s tech gets put to use, it could present a far more lucrative use case for its autonomous capabilities than shepherding people to and from the airport.

Bringing Luxury to the Skies (Without the Exorbitant Price Tag)

Transatlantic travel presents a dreadful choice — open your wallet for temporary comfort or commit to nine hours crammed into coach.

La Compagnie, the Business Class only airline, offer’s fares 30 to 50 percent lower than carriers operating the same route.

Picture this: after enjoying a madeleine and champagne on the terrace of their lounge, you effortlessly board a modern aircraft with only 75 other passengers, settling into your lay-flat seat. On-board, you spend the flight enjoying a 4-course meal curated by Michelin-decorated French chefs, film-festival quality entertainment, unlimited, free, high speed wifi, and, sometimes, also onboard happenings (think champagne tastings and book signings).

Truly executive-style travel for the discerning executive who must arrive well-rested.

Book a flight from New York to Paris, Milan, or Nice starting at just $2,400 round trip.

Google Sets the Table

Google may want its language models to get comfortable with spreadsheets.

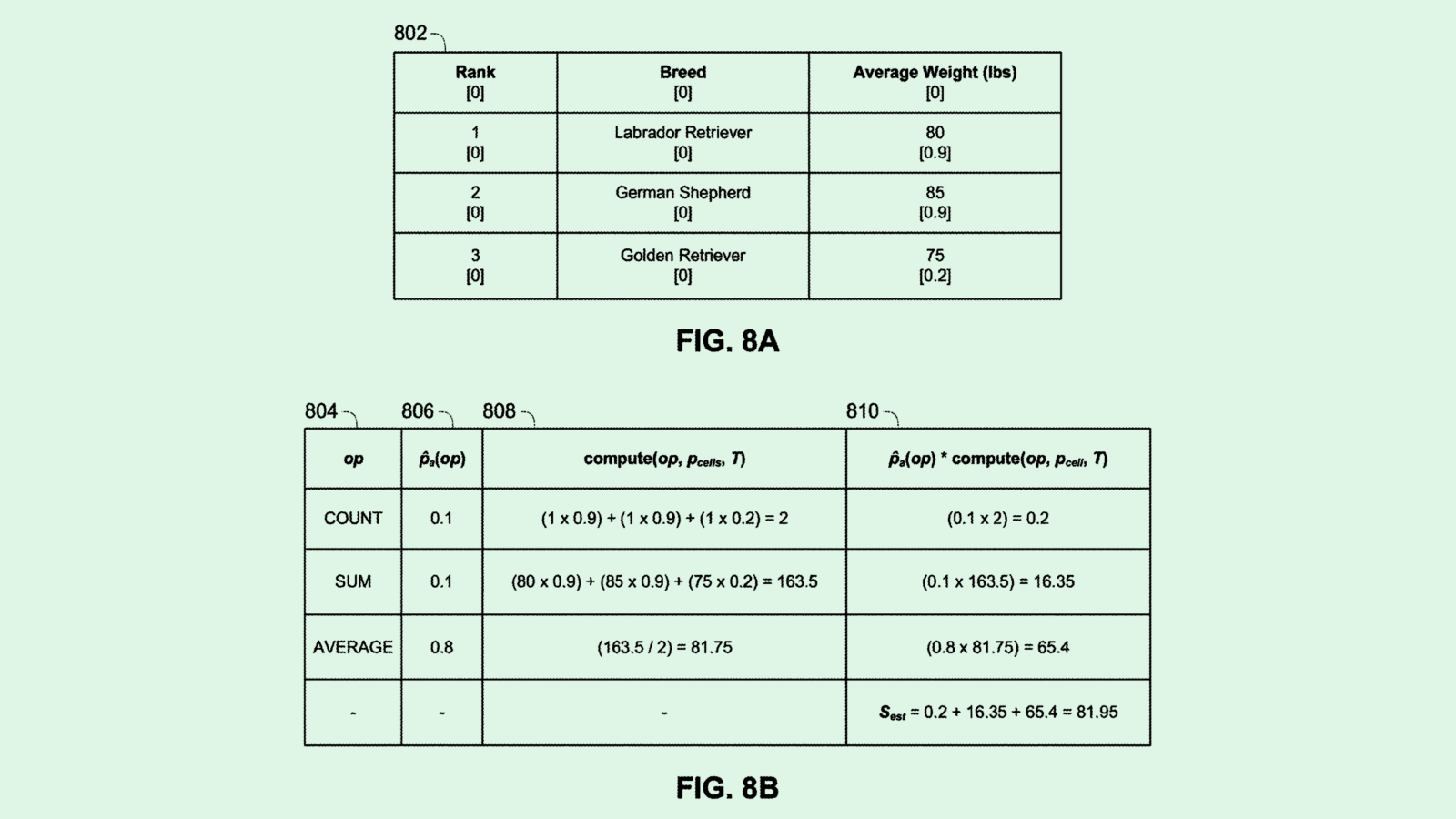

The company is seeking to patent a system for training language models to “reason over tables.” To put it simply, Google’s filing pitches a system that can train language models on a question-and-answer table without the use of “logical forms,” which are explicit representations of a natural language query that are easier for an AI model to understand.

Typically, training a model to accurately translate questions into these logical forms requires a good deal of human labor to create supervised training data, “making it expensive and difficult to obtain enough training data to sufficiently train a model,” Google said in the filing.

“Although an NLP model can, in theory, be trained to generate logical forms using weak supervision … such methods can result in the model generating forms which are spurious,” Google noted.

Google’s system first pre-trains a language model on synthetically generated tasks pulled from a large “unlabeled knowledge corpus,” or database like Wikipedia. Google’s system uses two methods of pre-training on this database: “masked-language modeling tasks,” in which the language model basically has to fill in word blanks of a sentence; and “counterfactual statements,” or “what-if” statements to help it understand relationships between data.

Following this, the language model is fine-tuned using examples derived from only a question, an answer, and a table. This fine-tuning essentially allows the model to apply what it’s learned in training to the specific data in front of it, allowing it to answer questions based just on data from those tables.

Though this may sound quite technical, the outcome is a more easily scalable and adaptable language model that has a simpler development process and can handle all kinds of data, structured or unstructured.

Google’s system relies on taking logical forms out of the process of AI training, which is already a pretty common concept in large language model development, said Vinod Iyengar, VP of product and go-to-market at ThirdAI. Where the value of this tech lies, however, is in its application specifically for breaking down large spreadsheets or documents filled with tables, and answering questions about them with little pre-processing needed.

With structured and unstructured data, “there’s work being done to handle each of those separately,” Iyengar said. “It becomes tricky when you have a mix of data. But Google’s claiming they can handle all of that easily.”

This kind of tech could be particularly valuable for enterprise purposes, said Iyengar. For example, if someone needs to quickly analyze a large financial document that’s filled with both structured and unstructured data, such as tables, figures, and paragraphs of text, a system like this could be trained to accurately answer queries related to it.

“The more they can automate this workflow instead of manually pre-processing all the data — if that can all be eliminated and LLMs can do it themselves — then that will be a huge win,” said Iyengar.

Google has spent the past year weaving AI throughout its Workplace suite and enhancing its search engine with AI. Its patent activity is filled with enterprise-related AI innovations, including automatic generative coding and design tools.

The company faces stiff competition with the tag team of OpenAI and Microsoft, which offers its own AI-based work and productivity suite with Copilot. But creating a system that can train models to parse through tons of different types of data only serves to bolster its enterprise offerings more. “They’re probably going to offer this more broadly as part of their AI stack,” Iyengar noted.

Plus, if this is integrated into Google Gemini, this could also be put to use in its potential partnership with Apple. For instance, a system like this could be paired with iCloud, allowing users to easily break down large documents within Apple’s systems.

Microsoft Slip of the Tongue

Microsoft is taking tongue-in-cheek a little too literally.

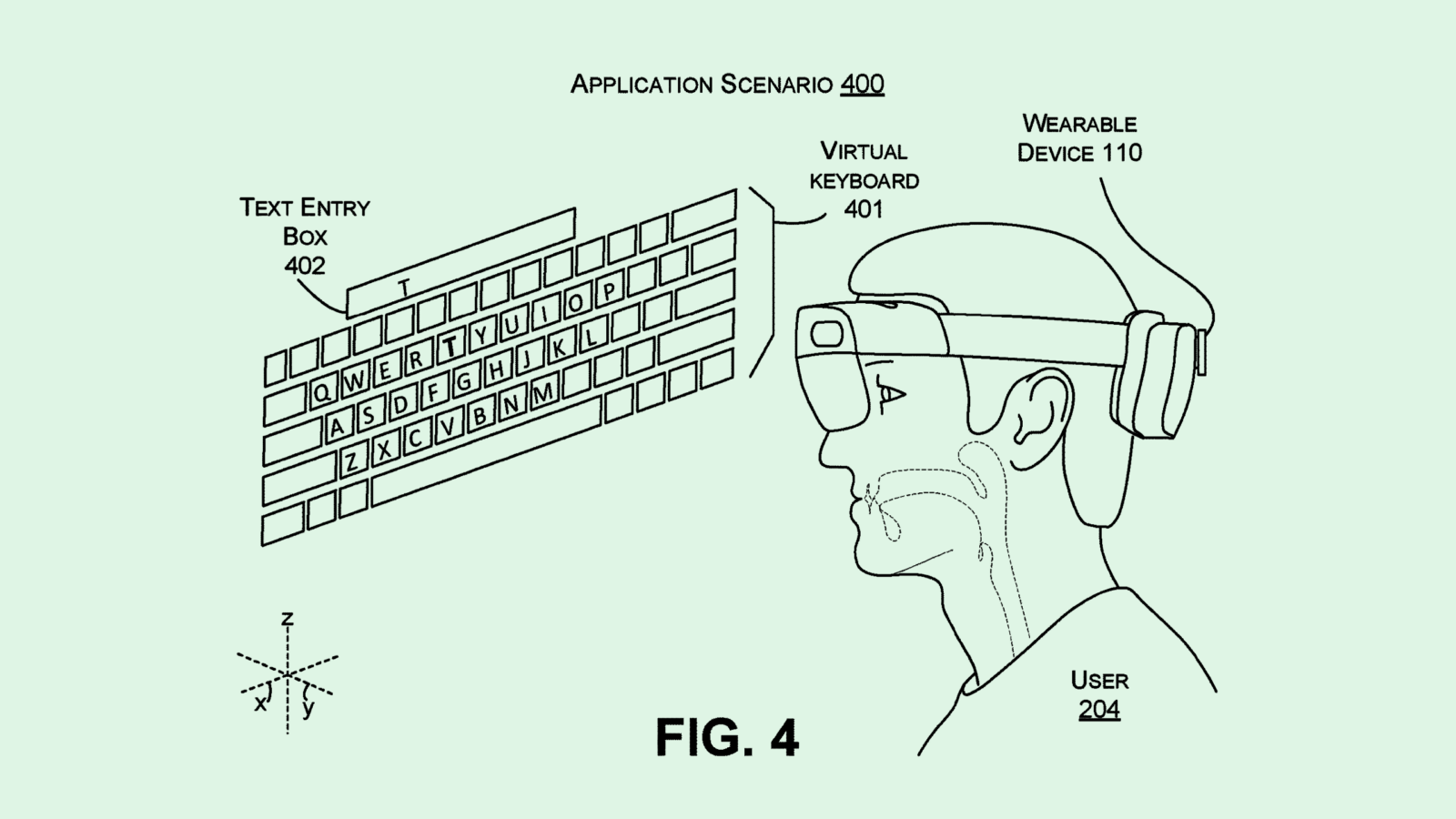

The company filed a patent application for “inertial sensing of tongue gestures.” Microsoft’s filing lays out a system for detecting tongue movements as a means of controlling an artificial reality device.

“Touch and hand gestures have certain limitations for applications where the user’s hands are occupied or for users with certain physical limitations,” Microsoft said in the filing. Speech recognition and gaze tracking “also have certain limitations,” the filing noted, such as privacy drawbacks and latency issues.

Microsoft’s tech tracks motion signals using an “inertial sensor” to detect tongue gestures. Microsoft notes that these sensors would be “non-obtrusive” and externally worn, such as within a head-worn display, earbuds, headphones or cochlear implants. (This overcomes the invasive nature of other tongue-tracking inventions, the company said, such as mouth-worn retainers or “electromyography sensors.”)

Once this system detects these motion signals, a machine learning model would take that data and translate it into gestures that are reflected in a display. In practice, this may allow a user to control elements of an artificial reality scenario without speaking or using their hands.

For example, when typing, this system may use gaze-tracking to hover over letters on a virtual keyboard, and tongue gestures to select them. In an audio-only application, these gestures may allow a user to switch songs, pause music, or interact with a virtual assistant.

This offers the user “quiet, hands-free input” to their devices that can be performed with “little or no perceptible external movement by the user and can be performed by users with serious physical limitations.”

As outlandish as it seems that tech firms want to get into your mouth, this isn’t the first time Microsoft has taken an interest in getting close to its users: The company previously sought to patent “high-sensitivity” facial tracking for artificial reality devices.

Microsoft also isn’t the only company that’s looking for hands-free ways to control artificial reality. Meta has looked at ways to track your body’s neural and muscular signals, Apple has filed patents for eye tracking using neural networks, and Snap’s filed a patent for methods to track gaze direction.

Microsoft offers a line of mixed reality headsets called the HoloLens that start at a price tag of around $3,500 and are mainly sold as an enterprise offering, but metaverse tech is far from its main focus. Plus, the company has competition in the space with Meta’s line-up of more affordable Quest headsets, and Apple’s recent drop of the Vision Pro. As mixed reality tech continues to forge onward, Microsoft could make cash by licensing this tech to other operators.

The goal of these methods of tracking is to create a more intuitive user experience, aiming to scale adoption and increase the amount of time that these devices are strapped to people’s faces. Plus, a positive to these hands-free experiences is expanding the demographic of people that can use them, creating access for people with certain physical limitations or disabilities that may have constraints to their range of movement.

But a common thread with these technologies is that they walk the delicate line of weighing privacy versus convenience. Equipping these headsets with the capability to constantly read body signals may simply make users uncomfortable, though it may venture to do the opposite. And if the data processing for these experiences isn’t done locally, rather via a remote server, that creates another avenue for privacy problems.

Extra Drops

- Google wants to get into voice acting. The tech firm filed a patent application for “generating dubbed audio from a video-based source.”

- Lyft wants to know if you’re scootering the right way. The company filed a patent application for “sidewalk detection for personal mobility vehicles.”

- Snap wants to hand you the paint brush. The company filed a patent application for “sculpting augmented reality content using gestures.”

What Else is New?

- The Justice Department is suing Apple over antitrust violations, alleging that the company has a monopoly over the smartphone market.

- Neuralink showed off its first brain chip recipient in a livestream this week, in which the 29-year-old patient played online chess with his mind.

- La Compagnie is Redefining Transatlantic Business Class Travel by offering a unique experience (including lie-flat seats, free unlimited Wi-Fi, and Michelin-starred menus), at prices 30% to 50% lower than the competition. Book your next flight with La Compagnie.*

* Partner

Patent Drop is written by Nat Rubio-Licht. You can find them on Twitter @natrubio__.

Patent Drop is a publication of The Daily Upside. For any questions or comments, feel free to contact us at patentdrop@thedailyupside.com.