Happy Thursday, and welcome to Patent Drop!

Today, a Microsoft patent for a machine learning-based coding tool underscores the potential pitfalls of relying too much on AI for productivity. Plus: Tesla wants to make sure its autonomous vehicles don’t fall for light tricks, and Google wants to make chatbot development easier.

Ahead of today’s Patent Drop, we want to direct you to Persona’s free guide about how to fight generative AI fraud. While GenAI-enhanced fraud may be alarming, it’s not impossible to deter. Download Persona’s guide to learn how to build a robust defense against both GenAI fraud and future threats.

And a quick programming note: Patent Drop is taking a summer vacation! Publishing will be paused Monday, June 24 and Thursday, June 27. We will be back on Monday, July 1, and then paused again on July 4 in observance of Independence Day.

Now, let’s dive in.

Microsoft’s Coding Companion

Microsoft wants to reward its AI models for avoiding hallucinations.

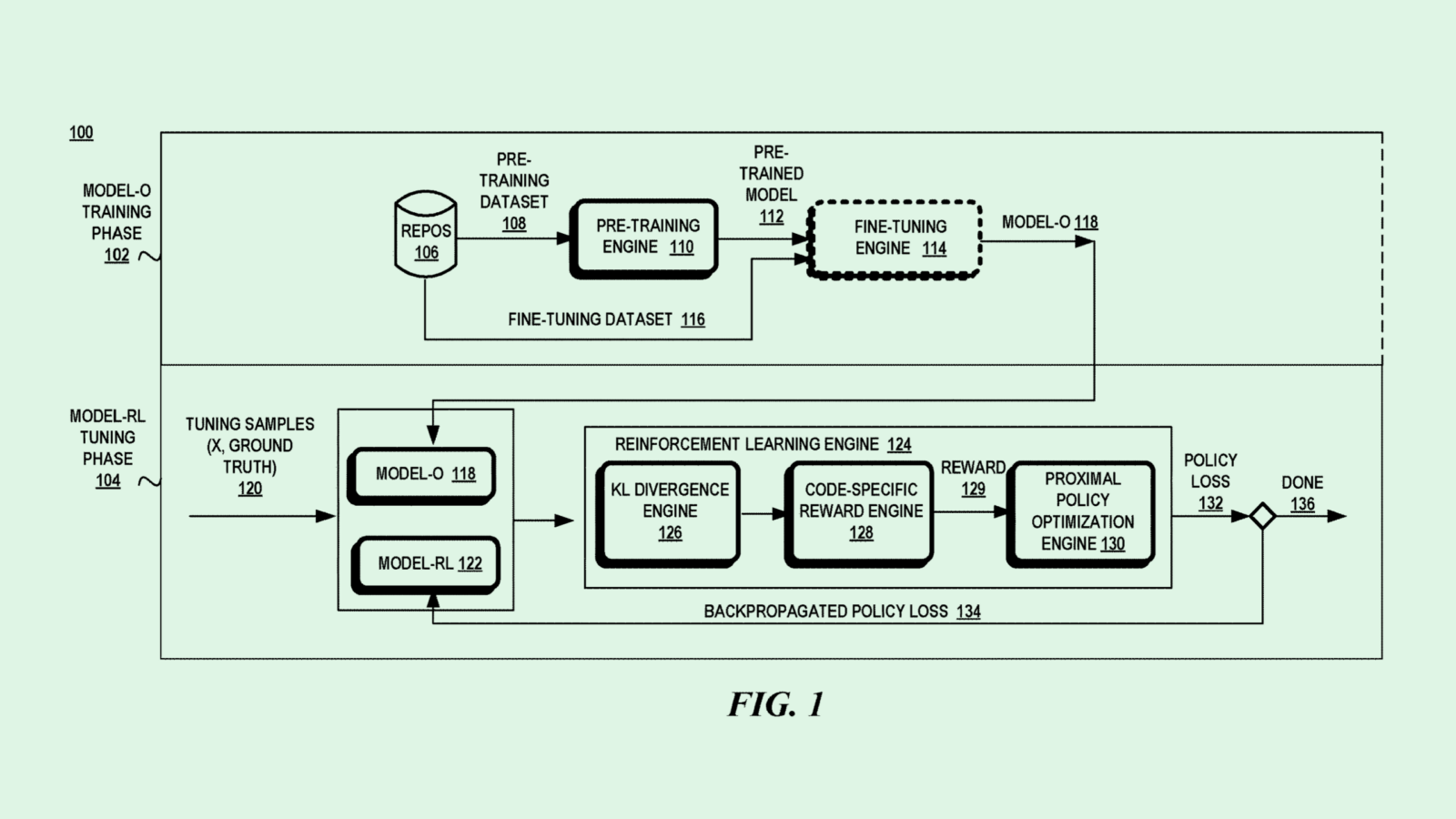

The tech firm is seeking to patent a deep learning-based code generation platform trained through “reinforcement learning using code-quality rewards.” Microsoft’s system fine-tunes a generative AI model to produce more relevant and useful source code for developers.

Conventional code generation systems that use deep learning, which leverage neural networks to learn from large amounts of data, often fall victim to bias and quality issues, Microsoft noted, saying “this may result in the predicted output being less useful for its intended task.”

In Microsoft’s method, an initial deep learning model is trained to generate and predict source code, learning from large datasets packed with source code examples. The model is then fine-tuned to create code for a specific task using reinforcement learning, or a kind of training that rewards or penalizes the model based on performance.

Microsoft’s system would reward or penalize a model based on its scoring in several “code-quality factors.” These include proper syntax; minimal errors in compilation, functionality, and execution; readability; and comprehensiveness.

The system comes up with a metric score based on how close the predicted source code is to “ground truth code,” or code that’s known to be accurate. That score is then used to further train the model’s parameters to “take actions that account for the quality aspects of the predicted source code,” Microsoft said.

Productivity has been a mainstay of Microsoft’s AI plans. The company has gone all-in on Copilot, its AI companion, since launching it last March, and debuted PCs embedded with its AI technology in May. Microsoft-owned GitHub also offers its own AI coding tool, called GitHub Copilot.

While some fear that AI may make their jobs obsolete, these tools can be incredibly helpful if implemented properly, said Thomas Randall, advisory director at Info-Tech Research Group. In ideation, monitoring, and business analytics, many are already finding use cases for this tech, he said. And in software engineering environments, a tool like Microsoft’s could massively speed up project development.

Businesses are still figuring out the limits of these systems, said Randall. But lack of proper education on AI may be blurring the lines. “A big downside that we potentially will see, especially with users who have not been trained by the organization on how to use these tools effectively, is over-reliance,” he noted.

This is something Microsoft seems to recognize too: In an interview with TechRadar earlier this week, Colette Stallbaumer, general manager for Microsoft 365 and Future of Work, said that the company’s goal is to democratize AI, not for it to take over. “We want these tools to benefit society broadly,” Stallbaumer said. “There’s no skill today that AI can do better than us …you can either choose fear, or choose to lean in and learn.”

Learning where to draw the line is especially important when considering where AI may fail, said Randall. These models still often struggle with things like hallucination, accuracy, and bias.

While Microsoft’s patent attempts to cover these issues in code-generation contexts, one issue is seemingly missing: explainability, said Randall. Generative AI’s outputs are often created within a black box. But as AI becomes more ingrained in modern-day workplaces, Randall said, it’s important to understand how the tech comes up with its answers. With that piece missing, relying on these systems may result in inaccurate information, security issues, or accidental plagiarism.

This, too, is where training is vital, said Randall. “There is that core question of how you can train your users to interact with this tool in the right way,” he said. “That you are skeptical, that you maintain a critical thought over it, and that you’re not just accepting what it tells you.”

The Strategic Guide to Fighting GenAI Fraud

GenAI has revolutionized the way everyone works — including fraudsters. In fact, Deloitte is predicting it could enable fraud losses to reach $40B by 2027.

Fortunately, you likely don’t need to completely overhaul your tech stack to protect your business. Instead, you may just need to fortify your existing defenses and implement a few proven tactics.

Download Persona’s strategic guide to GenAI fraud to learn:

- How to implement a holistic fraud strategy that’ll enable you to better serve legitimate users and stop bad actors in real time

- Which risk signals are difficult — if not impossible — for GenAI to spoof

- How to adopt a blended approach to cover different types of risk

Tesla Keeps Its Eyes Peeled

Tesla wants to keep its ego in check.

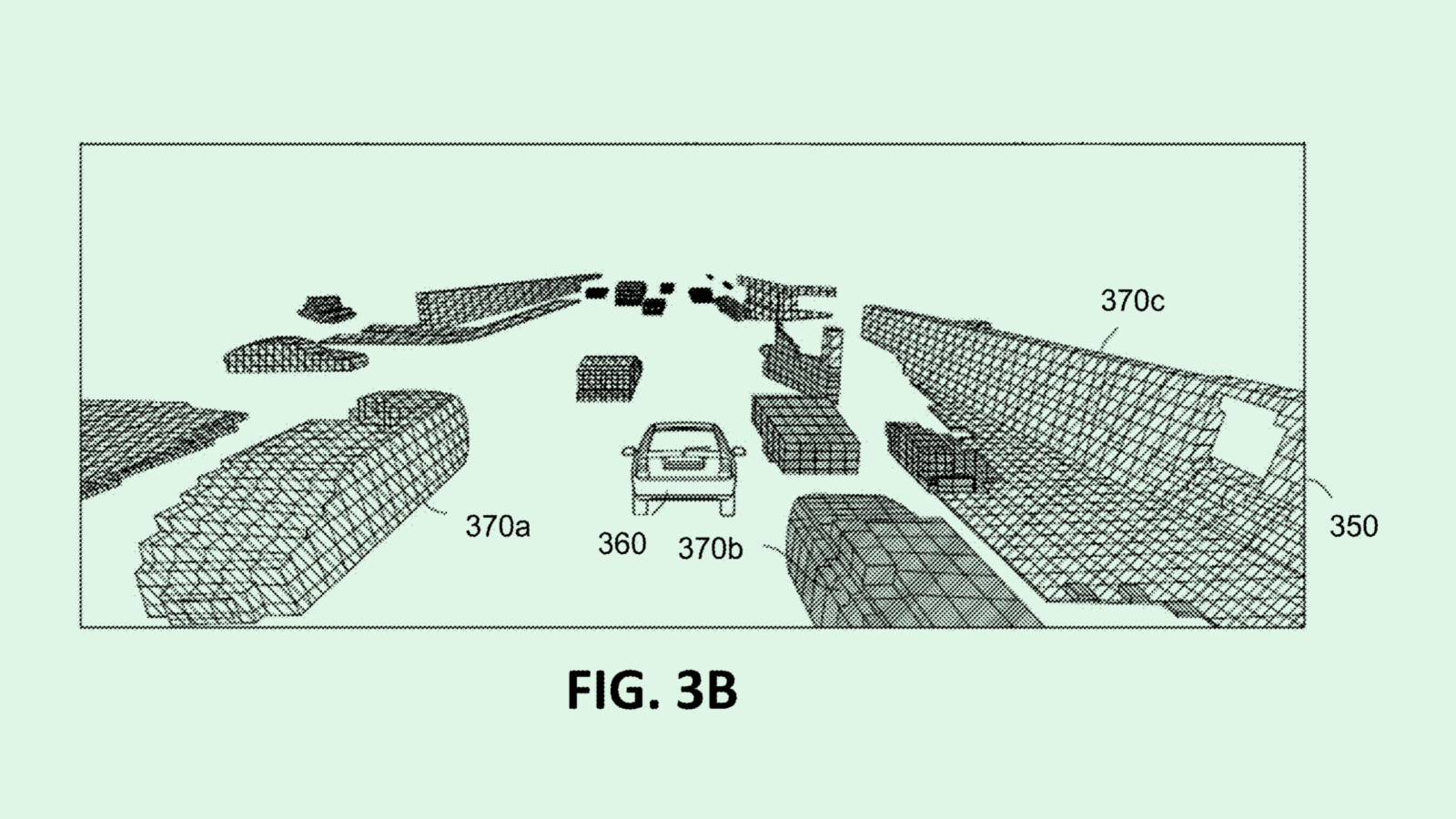

The automaker filed a patent application for vision-based occupancy determination within the surroundings of an “ego,” which the company defines as the “autonomous navigation technology used for autonomous vehicles and robots” within the filing. Tesla’s filing details a system aimed at giving its self-driving cars a more robust view of their surroundings.

“Egos often need to navigate through complex and dynamic environments and terrains,” Tesla said in the filing. “Understanding the egos’ surroundings is necessary for informed and competent decision-making to avoid collisions.”

Tesla’s tech relies on trained AI models to analyze camera feeds on autonomous machines in order to come up with “occupancy data” on a car’s surroundings — specifically determining if the objects around the vehicle have mass. This essentially helps it rule out optical illusions that may trick the autonomous driving system, such as reflections, light, shadows, and virtual artifacts.

The system then uses this information to create a 3D map of the surrounding area to aid in navigation and decision-making, allowing the autonomous system to have a better understanding of its environment and avoid mistakes that could be caused by light tricks or particularly dark shadows.

This isn’t the first time we’ve seen a patent from Tesla that aims to lay claim to stronger autonomous capabilities and better situational understanding. The company has previously sought patents for adjusting vehicle actions with better environmental awareness and object detection to eliminate phantom braking (when self-driving vehicles make sudden, inappropriate stops).

CEO Elon Musk has long claimed that the company’s Full Self-Driving technology can be safer than human drivers’ efforts. However, the company has hit a number of roadblocks over the years in making that vision a reality. Tesla has issued several warnings related to its Full Self-Driving feature, including one in December that impacted 2 million vehicles.

The automaker has also long battled the National Highway Traffic Safety Administration over the feature, with the administration pressing Tesla for answers about the recall in May — otherwise, it must face more than $135 million in fines.

Nevertheless, Tesla continues to push forward with its ambitious autonomous plans, which include robots and robotaxi services. Musk unveiled the next update to its Full Self-Driving hardware, called “AI 5,” at its shareholder meeting last week, claiming the tech will offer a tenfold improvement compared to its current offering.

And despite its struggles in the US, the company made some headway internationally. Tesla announced a partnership with Baidu earlier this month; the Chinese internet giant will provide Tesla’s vehicles with lane-level navigation services. Shanghai will also reportedly allow Tesla to test its most advanced autonomous technology with 10 of its vehicles in the city.

Google’s DIY Chatbots

Google wants to make training a chatbot as easy as small talk.

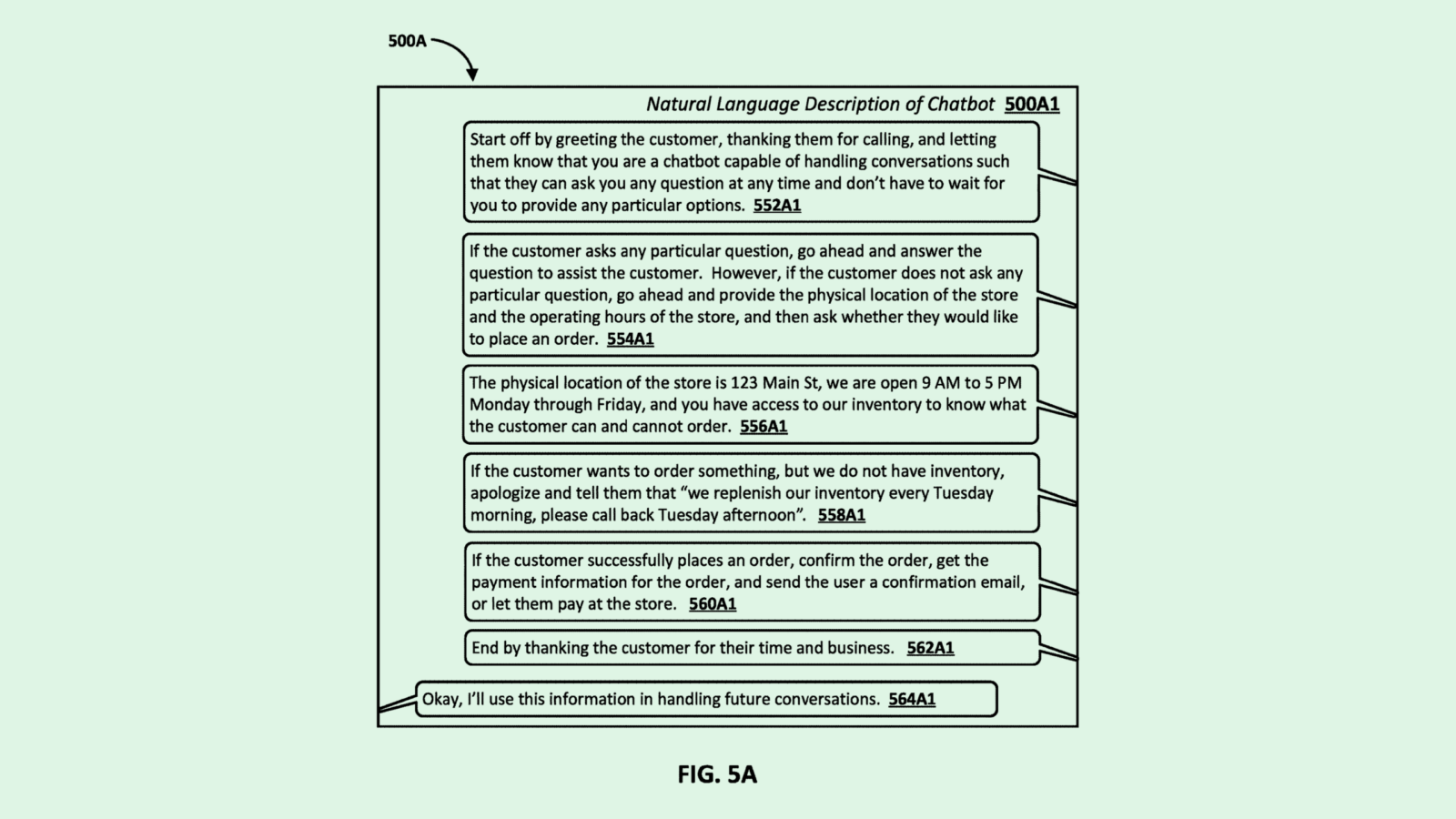

The company is seeking to patent a system for chatbot development using “structured description-based” techniques. Google’s tech basically allows a user to create a fine-tuned chatbot for specific tasks using just natural language inputs.

Google’s system starts off with an AI model “utilized in conducting generalized conversations,” and relies on user inputs to fine-tune it. It may map certain inputs as different dialog states, such as questions, responses, or transitions, and a user can instruct the chatbot to respond to those accordingly.

For example, if a user were building a chatbot that could answer questions related to a business for their website, the user could instruct it to do things like provide store locations and hours, answer questions about inventory, or confirm order statuses by only using simple explanations.

Google noted that, in some examples, these chatbots can be fine-tuned or generated in an “on the fly” manner in response to “unstructured free-form natural language input.” Meaning, if the developer notices a mistake or unwanted output, it can be easily amended with natural language instruction.

“As a result, the chatbot can be generated and deployed in a quick and efficient manner and for conducting the corresponding conversations on behalf of the user or an entity associated with the user,” Google said in the filing.

Google noted that this system can also be used to train voice-based conversational AI used over “phone calls or locally at the client device.”

Big Tech firms are constantly looking at ways to make their AI models bigger and better. And as it stands, large language models are the subject of this effort, with Google, Microsoft, Meta, and OpenAI all feeding their own ever-growing chatbots as they strive for artificial general intelligence.

While these language models have incredible capabilities, they also face significant challenges — one of which is the sheer cost. DeepMind CEO Demis Hassabis warned earlier this year that the Google subsidiary may spend more than $100 billion developing AI. Meta and Microsoft, meanwhile, warned investors of rising expenses related to this development in their recent earnings reports.

Additionally, these models present data security issues and suck up tons of power. And despite the tech industry’s fascination with large language models, the average enterprise doesn’t need a hundred billion parameters to achieve a functional chatbot.

Patents like these, however, may hint that tech firms are looking at ways that the average business or enterprise may get use out of AI without having to hire a software development team, quickly training a conversational AI agent on any topic or domain with simple, natural language instruction. Google’s tech could offer a no-code solution to AI development that’s far less resource-intensive or expensive than building on top of a massive large language model.

Plus, Google isn’t the only company that seems to have this idea: JPMorgan Chase and Oracle have also sought similar no- and low-code AI patents, signaling a broader interest in getting AI into the hands of more than just developers.

Extra Drops

- Snap wants to be on aux. The social media firm is seeking to patent a system for “music recommendations via camera system.”

- Zoom wants to make sure you’re paying attention. The company filed a patent application for “scrolling motion detection” in video calls.

- Google wants to make sure you’ve got the essentials before walking out the door. The company is seeking to patent “automated testing of digital keys for vehicles.”

What Else is New?

- Ilya Sutskever, cofounder of OpenAI, announced a new startup called Safe Superintelligence. He left OpenAI in May.

- OpenAI competitor Anthropic announced Claude 3.5 Sonnet, its most powerful AI model to date.

- Learn AI in 5 Minutes a Day. AI Tool Report is one of the fastest-growing and most respected newsletters in the world, with over 550,000 readers from companies like OpenAI, Nvidia, Meta, Microsoft, and more. Their research team spends hundreds of hours a week summarizing the latest news, and finding you the best opportunities to save time and earn more using AI. Sign up with 1-Click.*

* Partner

Patent Drop is written by Nat Rubio-Licht. You can find them on Twitter @natrubio__.

Patent Drop is a publication of The Daily Upside. For any questions or comments, feel free to contact us at patentdrop@thedailyupside.com.