Happy Tuesday and welcome to Patent Drop!

Today, Sony’s deepfake-catching blockchain technology could help track down misinformation and overcome AI trust issues. Plus: Amazon is making AI fraud detection for everyone, and Tesla’s autonomous vehicles are shifting gears.

Let’s check it out.

Sony’s Blockchain Fact Checker

Sony may be looking at new ways to put blockchain technology to good use.

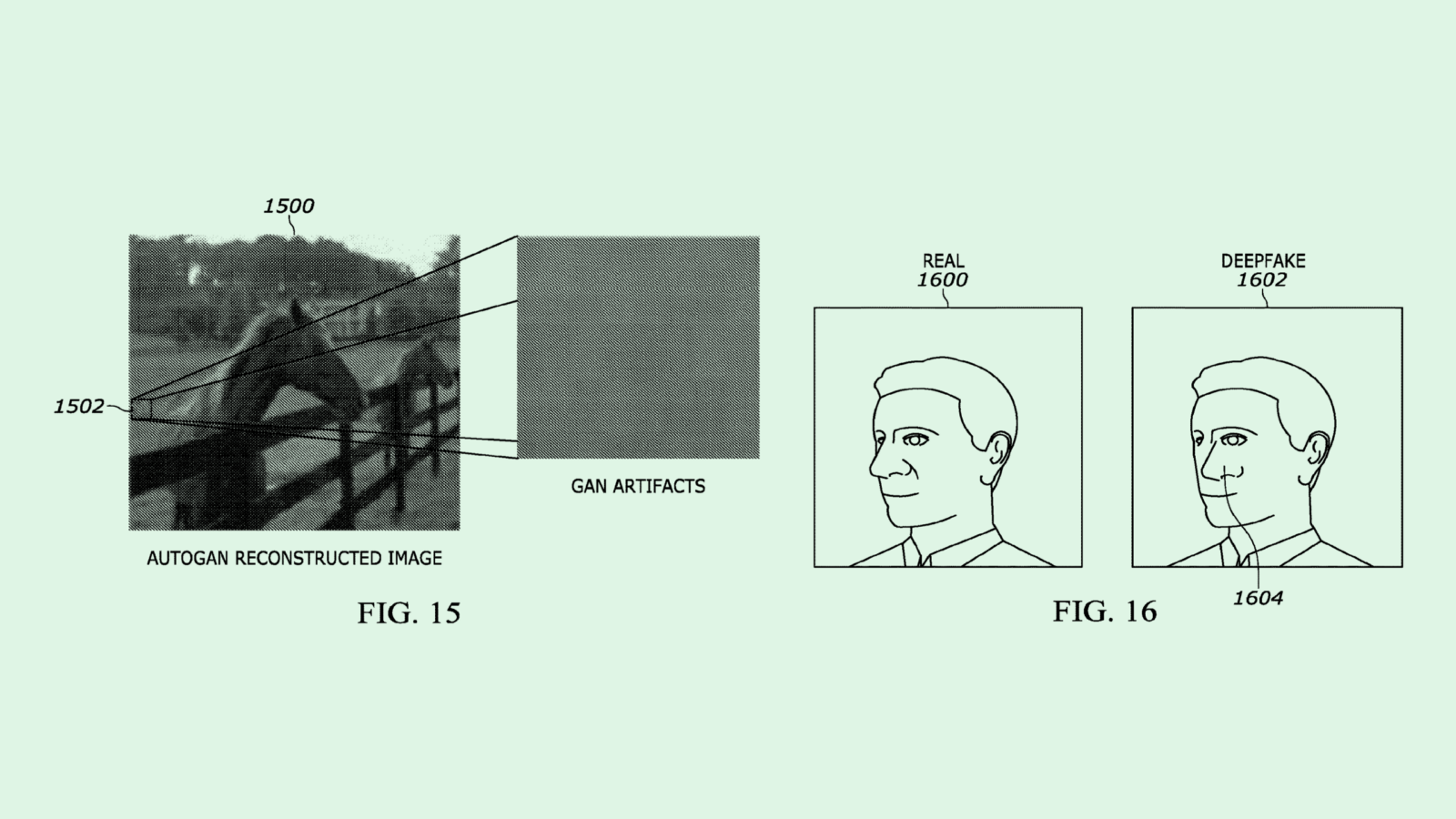

The corporation filed a patent application for “fake video detection using blockchain.” Sony’s tech uses AI image analysis and “image fingerprinting” to determine when a video has been altered or faked based on an original.

“Modern digital image processing, coupled with deep learning algorithms, presents the interesting and entertaining but potentially sinister ability to alter a video image of a person into the image of another person,” Sony said in the filing. This tech aims to solve this issue by determining when a video is genuine or AI-generated.

Sony’s system uses blockchain as a method of creating “digital fingerprints” of videos. In this, a blockchain block stores a “hash” of a video, which is essentially a digital verifier, as well as its metadata, such as location or timestamp, to prove authenticity.

Before a video can get added to this blockchain, Sony’s system puts it through several different kinds of analysis to determine if the subject of the video is a real human being. First, a facial recognition model checks for irregularities in facial texture or between the face and the background, as well as whether the facial movements seem natural.

Next, the system employs “discrete Fourier transform,” a mathematical technique which can detect irregularity in video brightness. For audio, it may use a neural network to break down whether or not the voice in the video is representative of natural human speech.

If the video is determined to be fake, the system will either refuse to add its data to the blockchain entirely, or will add it with an indicator that it’s fake. The fake video may also be reported to the original video distributor or service provider to be taken down.

As AI-generated content continues to weave its way into everyday life, it’s becoming harder for people to distinguish fake faces from real ones. According to a study released in November by the Australian National University, the majority of test subjects couldn’t tell an AI-generated face from a real one, specifically when that face was white. (Non-white AI-made faces still fell into the so-called “uncanny valley,” likely due to disproportionate training data in the models that made them.)

Viral deep fake images are becoming more and more common, too, whether it be The Pope in a puffer jacket or Taylor Swift peddling Le Creuset cookware. And ahead of the 2024 presidential election in the US, the spread of this kind of content could quickly tip from humor to misinformation.

But Sony’s patent lays out an example of how blockchain could be used to fight it, said Jordan Gutt, Web 3.0 Lead at The Glimpse Group. Two of blockchain’s biggest features are immutability and transparency, said Gutt. Implementing those tenets into content distribution could help service providers ensure that the content they’re serving comes from actual human beings.

Though it’s unclear exactly how a system like Sony’s would be implemented, “the immutability, transparency and security of it are things that would improve the user experience and trustworthiness across multiple platforms,” he said.

This is just one example of how blockchain and AI may converge, said Gutt. The traceable nature of this tech could be valuable for verifying the authenticity of data in datasets, bringing more integrity to the AI training process, he said. “Blockchain really has these core functionalities that make a database seem antiquated.”

But blockchain adoption faces big consumer trust issues. After crypto’s fall from grace in 2022, blockchain technology gained a bad reputation. Before blockchain can be used to build trust in other technologies, the tech firms that want to use it may need to figure out how to make consumers trust it, too.

Amazon’s Auto-ML

Amazon wants to make AI for any business that wants it.

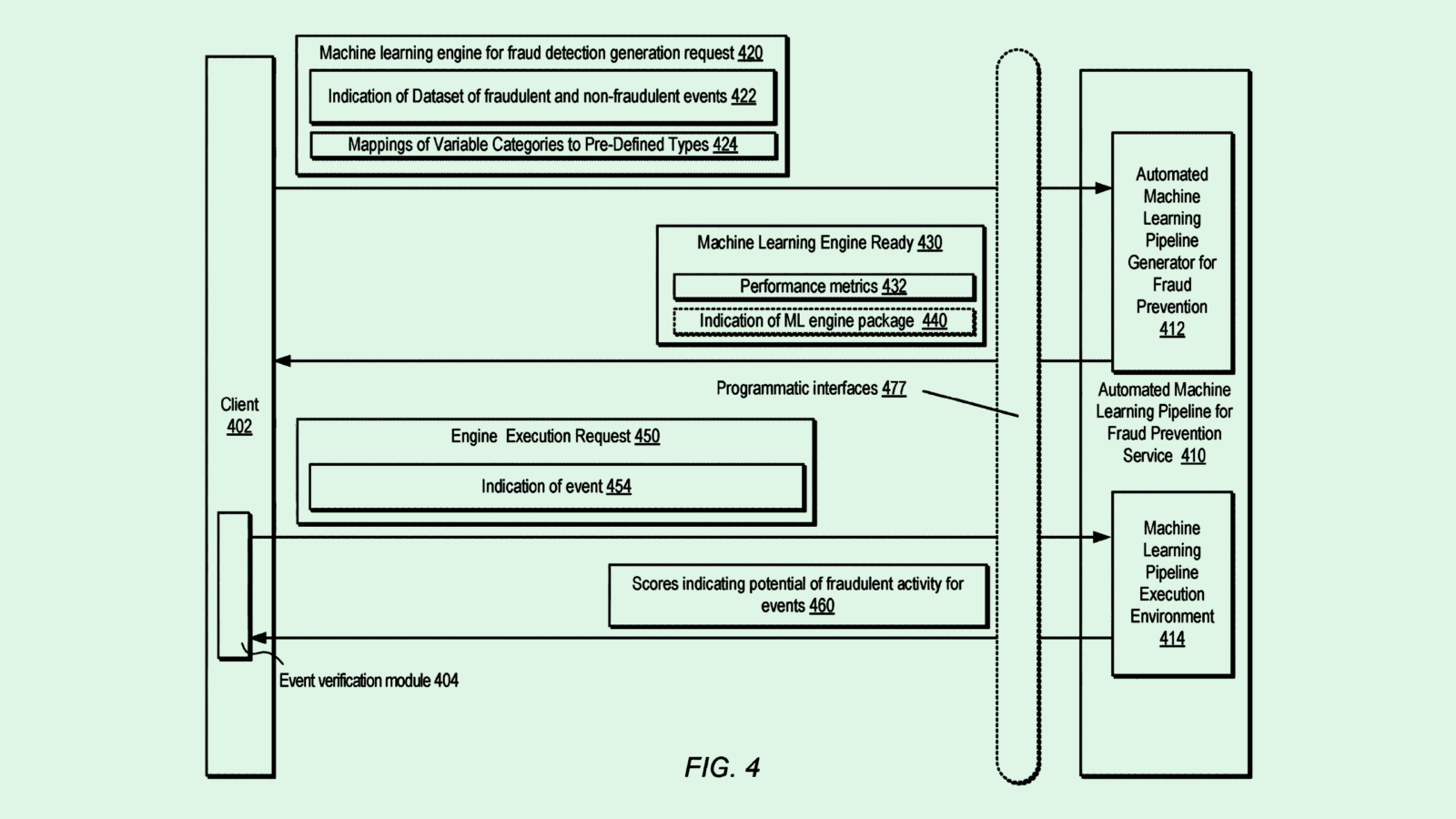

The company is seeking to patent “automated machine learning pipeline generation.” Amazon’s filing describes a service in which businesses or developers can feed large amounts of data to automatically get a trained machine learning tool.

When Amazon’s system first receives a dataset and a request to create a machine learning model, the first step is validating the data to make sure it meets certain criteria and splits it into multiple datasets into three categories: training data, validation data, and test data. Then the system enriches these datasets using “external data sources,” and are transformed to be more suitable for a model to learn from.

After this pre-processing, the model is trained on this data and gift-wrapped into an “executable package” for the customer to run. Along with the model itself, this package essentially includes everything the customer needs to get the most use out of it, such as enrichment and transformation “recipes” that help the customer process their own data. The entire package is then tested and the customer evaluates the results to make sure it works as they intended.

Amazon’s filing specifically notes that this service may be useful for building machine learning fraud prevention tools, which often require “significant domain expertise, an engineering team to automate and integrate it into business workflow, and a number of investigators to keep a human eye on suspicious activities. This demands massive investment and hence, many online companies have relied on external fraud management solutions in the past.”

Though Amazon got a later start on AI compared to its Big Tech counterparts like Google and Microsoft, the company has quickly climbed the ranks to narrow the gap. Its announcements in recent months have included new chips, generative AI tools and Amazon Q, a chatbot for AWS customers that it debuted at Re:invent.

And last week, its recently restructured AGI team said that its text-to-speech model is displaying “state-of-the-art naturalness” and “emergent abilities” in an academic paper. The paper notes that the model was trained on 100,000 hours of “public domain speech data.”

Despite its growing strength, Nvidia surpassed Amazon in market capitalization last week for the first time since 2002. Though it’s largely ridden the AI chips wave to achieve this, Nvidia has expanded outward, such as partnering with Cisco to help businesses build in-house AI computing, entering the $30 billion bespoke cloud chips market, and seeking AI software patents across a range of different sectors.

But with patents like this, Amazon may be looking to expand outward, too. This tech in particular would help get AI fraud prevention tools into the hands of enterprises without having to build out their own AI teams.

It’s also not the first time the company has attempted to lower the barrier to AI. Its previous patent for a “pre-trained model selector” aimed to get machine learning in the hands of those “with little or no prior knowledge.” Making AI as easy to access as possible only serves to broaden its customer base.

Tesla’s Back and Forth

Tesla wants to make sure its self-driving cars know which way to go.

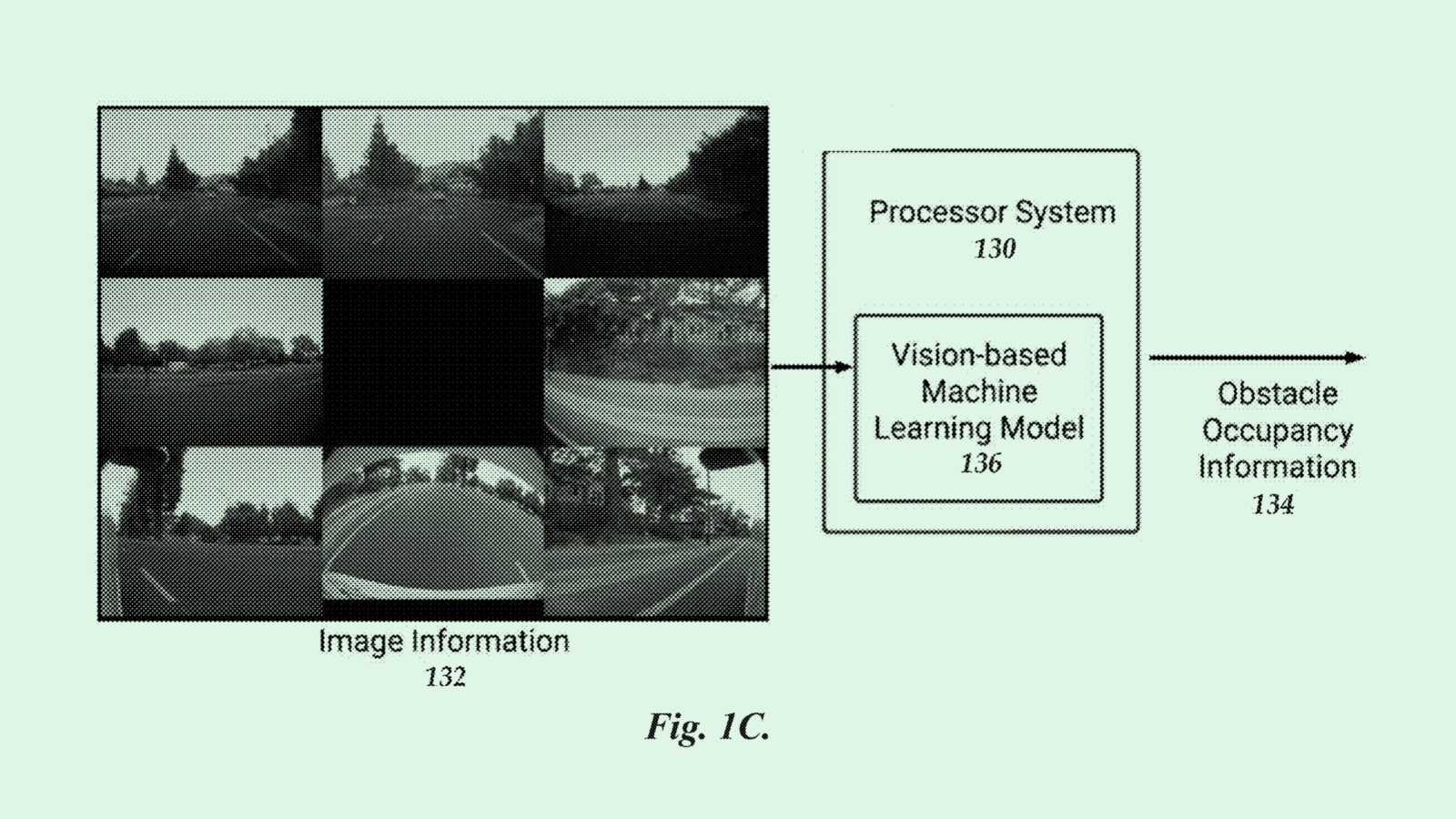

The company wants to patent “automated adjustment of vehicle direction” based on environmental analysis. To put it simply, Tesla’s tech aims to give its vehicles better awareness and understanding of its surroundings, such as wonky objects or obstacles in its path, as well as driver intent to decide into which direction to set the gear.

Tesla’s system uses machine learning to process data from its vehicle’s dozens of sensors to determine if there may be obstacles that are outside of the driver’s field of vision. It also uses AI to learn the driver’s historical behavior as a way to determine intent, and uses GPS to determine appropriate orientations.

All of this data collection culminates in a system which automatically anticipates what gear to shift into in any given situation. For example, if a user tends to park in reverse in their garage at home, but park facing forward in the lot at their office, the car would automatically shift into those gears without user interference.

For unseen objects, like if a driver accidentally chooses forward-driving mode without being aware that there is an object sitting in front of their bumper, Tesla’s system may aim to mitigate a crash by reversing to avoid it. “In such a situation, if the driver persists in operating the vehicle, there is a risk of the vehicle colliding with the object.”

Several of Tesla’s patents aim to give its vehicles a heightened sense of awareness, including a recent one for a vision-based system with “thresholding for object detection” that could help put an end to phantom braking. Any tech that helps its autonomous systems be more cautious makes sense for Tesla, given that it hasn’t had the smoothest ride with its self-driving tech.

The company has run into regulatory backlash from the National Highway and Transportation Security Administration more than once regarding this feature, including a probe of incidents involving Tesla drivers crashing into emergency vehicles while using autopilot and concerns regarding a bug that lets drivers take their hands off the wheel for extended periods.

In December, the company also recalled 2 million vehicles – nearly every one it sold in the US in the past decade – to update software that makes sure its drivers are actually paying attention when their cars are driving themselves.

And last week, The Washington Post published a report that Tesla’s self-driving system may have been engaged during a fatal crash in 2022 involving a Tesla employee, making the incident the first recorded fatality involving the system. Though CEO Elon Musk said that Full Self-Driving wasn’t downloaded into the car, the NHTSA said that evidence of whether or not the feature was involved was destroyed in the crash.

Tesla isn’t the only self-driving car company that has faced a bumpy road. Google subsidiary Waymo, whose cars operate at level-four self-driving automation compared to Tesla’s level-two fleet, issued a recall for its software after two Waymo vehicles hit the same truck within minutes.

Public trust in autonomous vehicles is already fragile. According to the J.D. Power 2023 U.S. Mobility Confidence Index, comfort and confidence in self-driving vehicles declined for the second year in a row. Despite optimism from tech and auto firms alike, these kinds of incidents may further damage this tech’s already injured public perception.

Extra Drops

- Intel wants to keep its chips on lockdown. The company filed a patent application for methods to prevent “counterfeiting and tampering of semiconductor devices.”

- eBay is getting into the creator economy. The e-commerce platform is seeking to patent a way to use machine learning for “matching influencers with categorized items.”

- Google wants to hold your attention. The tech firm wants to patent techniques for “reducing distraction in an image.”

What Else is New?

- Adobe launched an AI assistant for Acrobat and Reader that can summarize and answer questions about PDFs.

- Elon Musk said that a patient implanted with a Neuralink device can control a computer mouse by thinking. Musk said the patient made a full recovery with “no ill effects that we are aware of.”

- Tinder is expanding ID verification in the age of generative AI images. The verification will include driver’s license and selfie videos.

Patent Drop is written by Nat Rubio-Licht. You can find them on Twitter @natrubio__.

Patent Drop is a publication of The Daily Upside. For any questions or comments, feel free to contact us at patentdrop@thedailyupside.com.