Happy Thursday and welcome to Patent Drop!

Today, Toyota’s patent to track carbon emissions throughout manufacturing highlights a new way to get people to buy electric vehicles and hybrids. Plus: Google wants to track and predict quantum errors, and OpenAI adds to its tiny patent war-chest.

Let’s check it out.

Toyota’s Carbon Cost

Toyota may want to pass the cost (or the savings) of carbon emissions onto its customers.

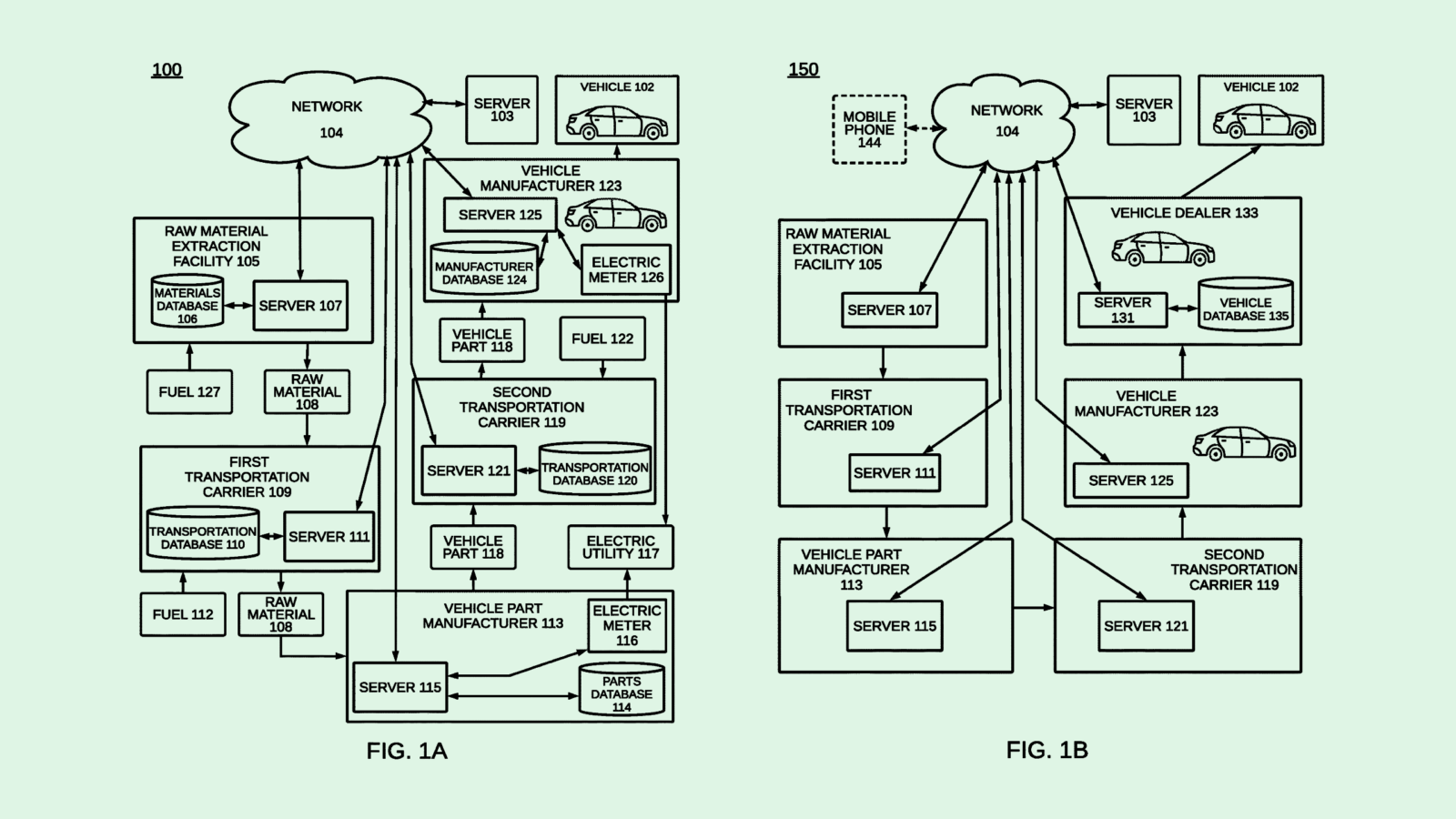

The auto manufacturer filed a patent application for “carbon footprint determination for manufacturing a vehicle.” This tech essentially tracks how much carbon is emitted when creating vehicle parts, and sets the vehicle’s price “based on the carbon footprint.”

To calculate the carbon footprint of manufacturing each vehicle, Toyota’s tech would take into account every process that goes into producing each component, including obtaining raw building materials, transportation, the manufacturing itself, and installation.

The total sum of carbon is then used to adjust the price of the vehicle. To do this, Toyota looks at the average carbon footprint to manufacture a similar vehicle, and subtracts that from the carbon cost of the individual vehicle’s pricing. The system may also perform this calculation for the individual components to discover the “highest carbon contributing part” of a vehicle.

“The price of the vehicle can be set so as to encourage consumers to purchase vehicles that are manufactured using lower total carbon footprints,” Toyota said in the filing. For example, the price “may be set to include a discount that is inversely related to the total carbon footprint for manufacturing the vehicle.”

As it stands, cost remains the biggest pain point in getting consumers to purchase electric vehicles, said Madeline Ruid, AVP and research analyst covering clean energy at Global X ETFs. Any kind of purchasing incentive is going to be welcome, whether it comes from government-sponsored rebates or from the automaker itself, as this patent suggests.

“Any potential cost savings based off a vehicle’s lower-than-average carbon footprint could influence more price-conscious consumers,” said Ruid.

Toyota, however, has historically focused less on fully electric vehicles than its competitors. That’s because of the company’s success with hybrids, namely the ever-popular Prius. CEO Ted Ogawa told Automotive News in March that he doesn’t expect EVs to make up more than 30% of the vehicle market by 2030. “We are respecting the regulation, but more important is customer demand,” Ogawa said.

Demand for hybrids has jumped as factors such as cost and lack of charging infrastructure scare buyers away from EVs. Hybrid sales grew 57.2% in the first half of 2024, according to Bloomberg, though Toyota’s sales fell around 9.8% in the aftermath of Prius and other models’ recalls.

“Hybrid vehicles have been gaining popularity in recent quarters as they can be cheaper than fully electric EVs but still offer gas cost-savings potential,” said Ruid. “We have seen other automakers shift their strategy to focus more on hybrid models and match current consumer preferences.”

But despite current market shakiness, Ruid expects EVs to take over the market in the long run, and for Toyota to follow suit. “Toyota is among the automakers working towards next-gen EV batteries and models, so we expect they will be able to potentially shift their focus more towards fully-electric vehicles as the market evolves,” she said.

Plus, like every other automaker, Toyota has its own lofty climate goals to meet, and tracking carbon emissions as this patent suggests could help it meet them. The company has pledged to become carbon neutral across its operations by 2050 — though that goal may be contradicted by the company’s heavy lobbying to stop climate policy goals.

Google’s Quantum Fix

Google wants to keep quantum computers in line.

The tech giant filed a patent application for a method of “quantum error correction.” Google’s tech essentially helps identify where errors crop up when running an algorithm on a quantum computer.

Because the environment to run a quantum computer is so delicate, the computers have a tendency to make mistakes when knocked out of balance. Anything from temperature to electromagnetic waves to the “noise” of the surrounding atoms can wreck the fragile state of a quantum computer, causing what’s called decoherence: That can lead to errors.

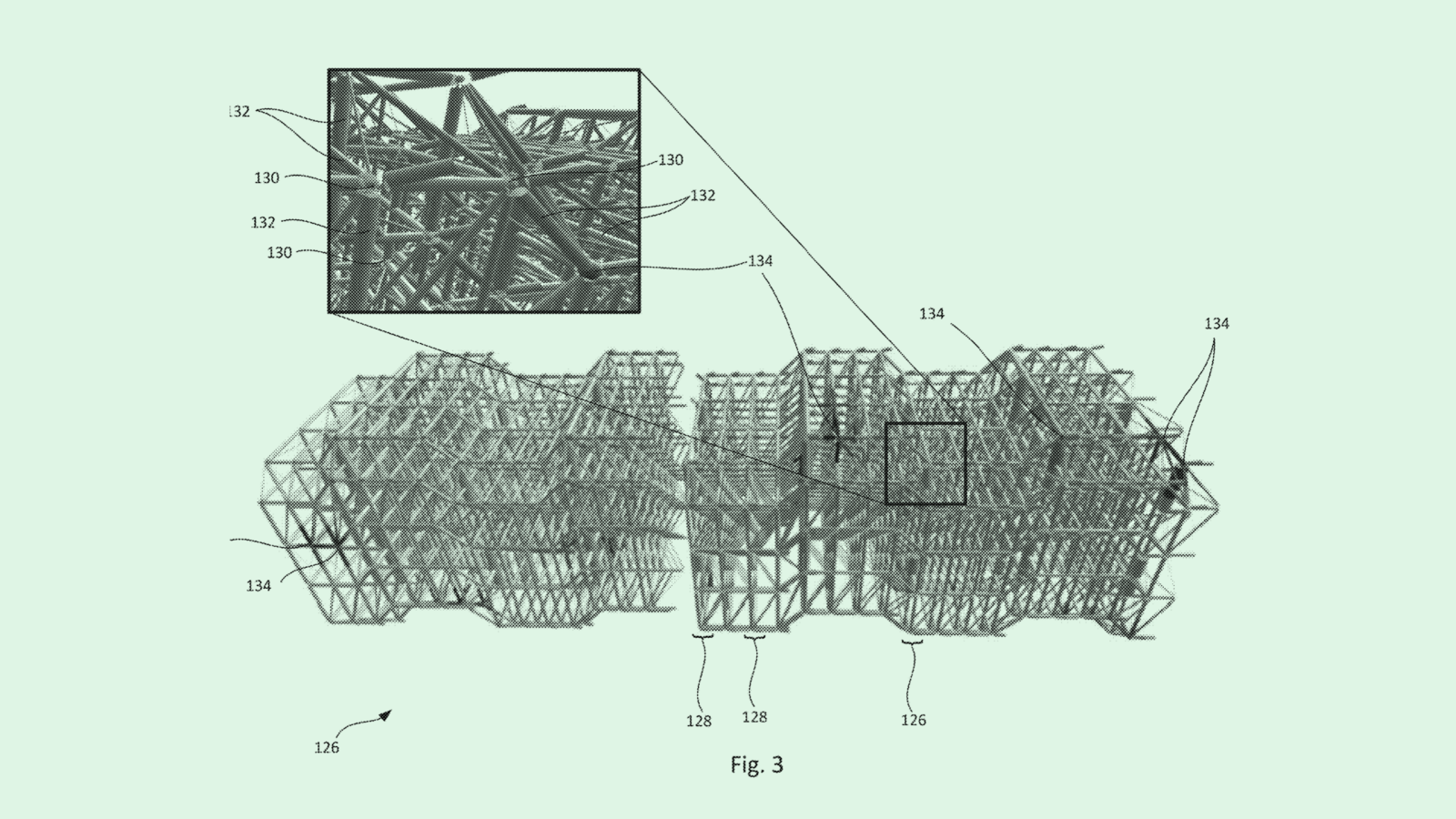

Google’s patent is quite technical, but to break it down simply: Before a quantum algorithm is run, this system would create a multi-layer model to show how errors may be created and spread throughout the computer’s circuits. Every layer of this model corresponds to a different error that could occur, as well as the probabilities of occurrence.

While a quantum algorithm is running, the system collects data on those errors and translates that into events which are recorded on an “array,” or a table that tracks the events in real time and updates as more errors occur. By updating the array, the system gets better at determining probabilities of error. The layered model then analyzes the data in that array to find out exactly where an error occurred and fix it in real time.

2024 has been a big year for quantum. In June, Google reportedly developed a quantum computer capable of executing calculations that would take a classical computer 47 years. Microsoft and Quantinuum announced their own breakthrough in reliable quantum computing with record-low error rates. And Honeywell may be considering an IPO for Quantinuum: It values the startup at $10 billion, Bloomberg reported last week.

Though handling errors could aid in one of quantum’s major roadblocks, a lot stands in the way of making reliable quantum computing happen. For starters, most quantum computers only have a few hundred qubits (the bits that operate the computer’s calculations): Atom Computing’s quantum computer broke IBM’s record in October at just over a thousand.

The machines remain small because quantum computers require a delicate environment and freezing temperatures to operate, and the only way to grow them is to bulk up the hardware itself. Plus, both the complex hardware and talent are incredibly limited.That makes quantum computing expensive and extremely difficult to scale.

The payoff, however, could be tremendous. These machines could be capable of handling difficult calculations an order of magnitude faster than classical computers, making them applicable to industries such as finance, cybersecurity, and medicine.

The tech also has the power to exponentially scale AI training, and could even help solve the mounting cost of AI training infrastructure, Dr. Jamie Garcia, director of quantum algorithms and partnerships at IBM, told VentureBeat in July. Securing IP in the nascent space could allow Google a competitive advantage if or when science is able to break quantum’s barriers.

OpenAI Compares and Contrasts

OpenAI wants to streamline model training.

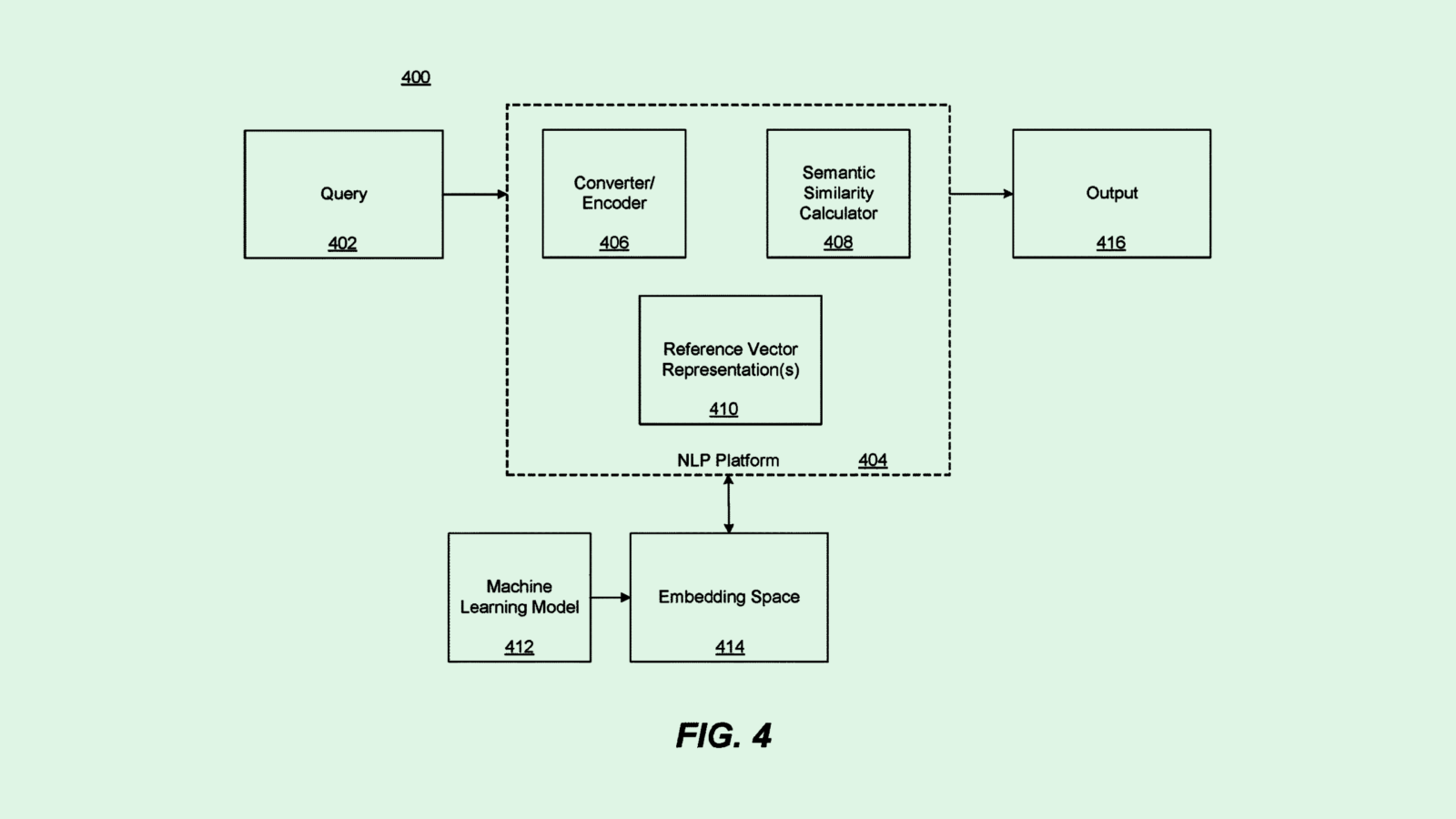

The company filed a patent application for a way to use “contrastive pre-training” to generate text and code embeddings. To put it simply, OpenAI’s filing details a way to train a model on similar and dissimilar pairs of data in order to improve its accuracy.

First, the system receives datasets made up of several “positive” data pairs (semantically similar to one another) and “negative data pairs” (different from one another). It then converts those into pairs into “vector representations,” which capture the essential features of a data point in a way that’s easier for a machine learning model to understand.

These vector representations are used to train an AI model to generate more vector representations; it can thereby effectively learn the similarities and differences between data points. The model is also trained to generate a similarity score between the data points.

While this sounds somewhat esoteric, this method of training allows for a more robust generative model. Using both positive and negative samples allows a model to perform better on new and unseen data, and converting data into vector representations is more computationally efficient, OpenAI noted. It’s also meant to work on large datasets and a lot of data types, making this method versatile and scalable.

Unlike Google, Microsoft, and other large AI firms, OpenAI’s patent war-chest remains relatively empty. The company has less than a dozen total patents, compared to the hundreds of patents other AI giants file on a regular basis. And there are a few potential reasons for this, said Micah Drayton, partner and chair of the technology practice group at Caldwell Intellectual Property Law.

One reason could be resources, said Drayton. While OpenAI is now an AI industry kingpin worth $80 billion, that hasn’t always been the case. The company was founded in 2015, and didn’t start filing patent applications until 2023. Giants like Google and Microsoft have decades’ worth of resources.

Drayton noted that patent litigation can add up quickly, and it may not have been a priority for the company in its early days. The other consideration is that patent applications can often take 18 months to become public — it’s quite possible many simply haven’t become public yet, he said. However, because of the massive head start that bigger tech firms have, OpenAI may “find themselves boxed out,” said Drayton.

“There’s three parties in that race: There’s OpenAI, there’s a competitor that has a war chest of patents, and there’s the [US Patent and Trademark Office],” he said. “They could be at a disadvantage, but there’s always the question of how patentable AI is in general.”

And because development is moving so quickly — and patent guidance is changing, too — Big Tech’s head start may not be as big of an advantage. “It’s possible that [competitors] don’t have a much more mature portfolio in the relevant space than OpenAI does.”

Extra Drops

- Microsoft wants to find what’s been copy-pasted. The company filed a patent application for “intent-based copypasta filtering.”

- PayPal wants to predict a domino effect. The company wants to patent a system for “predicting online electronic attacks based on other attacks.”

- Snap wants to upgrade online shopping. The social media firm filed a patent application for a “customized virtual store.”

What Else is New?

- Meta’s Reality Labs recorded an operating loss of $4.5 billion in the second quarter. Revenue reached $353 million, up around 28% year over year.

- 23andMe CEO Anne Wojcicki filed a proposal to take the company private at a price of 40 cents per share as the company’s stock tumbles.

- Ready to Fly Around Your Phone Like a Pro? Check out the Android Shortcut Supercourse, a free 6-day e-course full of hidden treasures to boost your productivity. You’ll learn all sorts of efficiency-enhancing Android magic, like little-known app-switching tricks, time-saving typing secrets, and more. No cost, no catch — just pure Android awesomeness. Sign up today for free!*

* Partner

Patent Drop is written by Nat Rubio-Licht. You can find them on Twitter @natrubio__.

Patent Drop is a publication of The Daily Upside. For any questions or comments, feel free to contact us at patentdrop@thedailyupside.com.