Happy Thursday and welcome to CIO Upside.

Today: What the Trump administration’s approach to AI could mean for enterprise adoption. Plus: Where AI fits into human resources; and JPMorgan’s recent patent could help it avoid data security slip ups.

Let’s check it out.

Trump Administration Set to Ramp Up AI Development – Never Mind the Risks

Within hours of taking office, the Trump administration made no secret of its AI stance: Bring it on.

Among the flurry of new executive orders and announcements, several have the potential to shake up the tech industry. With fewer regulations and less oversight to worry about, the organizations building massive AI models are ready to stomp on the gas (which the new administration also happens to favor).

For starters, Trump revoked a 2023 executive order on Monday that required AI developers creating systems that could pose a threat to national security and safety to share the results of safety tests with the U.S. government.

Additionally, on Tuesday, Trump trumpeted a joint venture between OpenAI, Softbank, Oracle, and Abu Dhabi’s MGX called Stargate, in which the firms are expected to commit up to $500 billion over the next four years. Oracle CEO Larry Ellison said that 10 of the project’s data centers were already under construction in Texas.

- In a blog post, OpenAI said it would begin by “deploying $100 billion immediately,” and that it, alongside Arm, Microsoft, Nvidia, and Oracle, are the initial technology partners for the project. “This infrastructure will secure American leadership in AI,” the blog post said.

- Trump said in a press conference Tuesday that he would “help a lot through emergency declarations … we’ll make it possible for them to get that production done very easily.”

- Not every tech figurehead is all in, however: Anthropic CEO Dario Amodei called the plan “a bit chaotic,” and Elon Musk, the centibillionaire Trump chose to lead a government efficiency initiative, cast doubt that the project has enough funding in a post on X.

The support may be music to model developers’ ears, but what do these changes mean for enterprises? For one, they probably will put a lot more security and safety responsibility into the hands of the people using AI, rather than the ones building it, said Thomas Randall, advisory director at Info-Tech Research Group.

Pumping a model’s size and capability doesn’t get rid of the data security issues that AI still faces. But the repeal of Biden’s executive order strips AI developers of their already-minimal safety responsibilities, meaning it will be entirely up to them whether they want to implement safeguards.

This may force organizations adopting AI to be even more diligent about responsible AI principles and risk framework, said Randall.

“The order’s repeal means that organizations must take on increased accountability for adopting responsible AI principles and implement an AI risk framework in their use and governance of AI technologies – especially regarding privacy, safety, and security,” said Randall. “There will be less federal support for organizations to have peace of mind with a safety baseline.”

Plus, while the infrastructure investment may open the door for big AI developers to make their models even bigger, it doesn’t mean that adoption will grow alongside it, said Randall. In fact, some enterprises may take adoption even slower, he said, “especially if organizations do not have a strong data management strategy, cannot form a business case to justify the investment, or are put off by safety risks.”

The impact of this administration’s approach may stretch beyond the U.S., said Randall: Compared with the EU’s “high standards for risk and privacy,” the U.S. already had lower regulatory barriers “to spur innovation,” he said.

“The next few years of the Trump administration, especially given the presence of technology leaders at the inauguration, will potentially exacerbate those regional differences including economic, social, and legal repercussions,” said Randall.

AI Could Give Human Resources ‘More Than Human Power’

Do human resources departments need to rely entirely on human labor?

As AI adoption ramps up among enterprises, CIOs are looking for ways that automation can transform every department – HR included. Though “human resources will remain human,” AI agents and automation could help departments make better use of their data and their time, said Maxime Legardez Coquin, CEO and co-founder of AI talent acquisition firm Maki.

“We want to give human resources more than human power,” said Legardez Coquin.

Maki was founded in 2022, offering personalized conversational AI agents for talent acquisition. The company announced a $28.6 million Series A funding round in mid-January, and its client roster includes large companies like H&M, BNP Paribas, PricewaterhouseCoopers, Deloitte, FIFA and Abercrombie.

Within HR departments, useful data is often “lost as soon as it’s created,” said Legardez Coquin. Employing AI in HR contexts could allow organizations to collect and utilize data from thousands of candidates and interviews to make better decisions with hiring and talent. “What we want to become is a kind of AI data layer that can help get data of talent pushed to the right place, to the right person at the right time.”

The startup’s focus represents a growing enterprise use case for generative AI, especially as automation with agentic AI continues to capture the tech industry’s attention. “If we can automate plenty of things, we can keep the human part for the value-added things, meaning building relationships,” said Legardez Coquin. “I think it would be amazing.”

And the rush to adopt AI is already well underway: In hiring and recruitment alone, use of AI doubled between 2023 and 2024, from 26% to 53%, according to a survey of HR professionals by HR.com. A report from HR Brew found that just 13% of survey respondents hadn’t incorporated AI into their HR workflows.

However, when using AI in HR contexts, it’s important to be aware of its risks – namely, models’ tendency to take on and amplify biases from their training data. Research from the University of Washington released in October found significant racial and gender discrimination in how large language models ranked resumes based on names.

This is why AI hiring tools shouldn’t rely just on resumes or “subjective criteria,” said Legardez Coquin. Maki’s tech instead relies on “assessing skills” of candidates that are relevant to any particular job, he said. “I think bias will always exist – with or without AI,” he said. “Over all the experience, what matters is the skill.”

JPMorgan Data Redaction Tech Could Stay Ahead of Privacy Regulation

Many Wall Street institutions are keenly interested in keeping up in the AI race, but its data-security pitfalls make it tricky for finance firms to adopt.

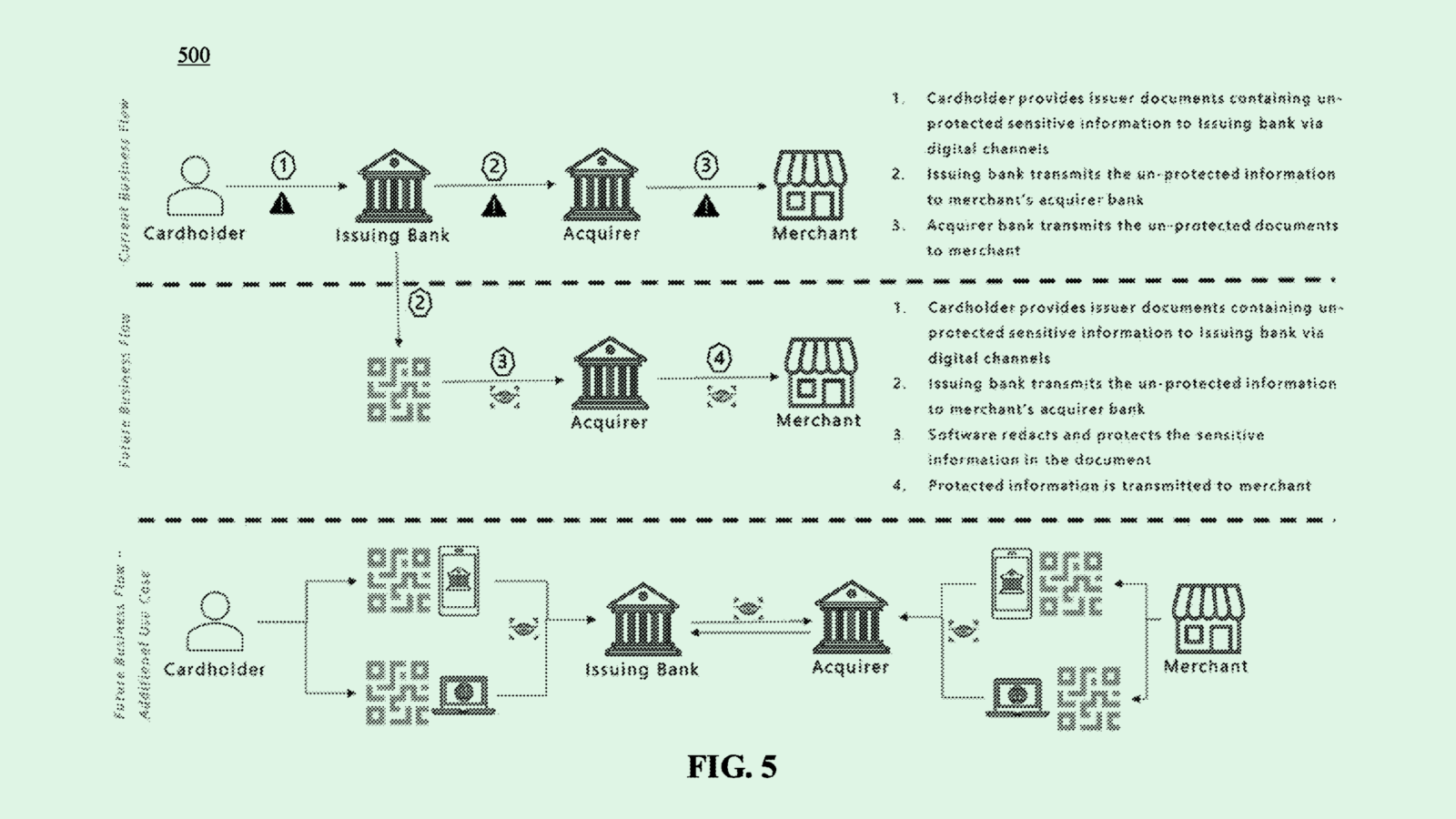

JPMorgan Chase may have a solution: The company is seeking to patent a system for “automated data redaction.” The patent application details a way to use machine learning to automatically recognize and obscure any information deemed sensitive or restricted in a dataset.

Using “optical character recognition,” this tech scans datasets for restricted information and creates new sets with that data redacted. The patent notes that the tech works with both structured data, such as databases or spreadsheets, and unstructured documents, such as PDFs and images.

Unstructured data tends to cause problems for conventional redaction techniques, so the system could allow JPMorgan to perform redaction in real-time “without compromising document integrity,” the company said in the filing.

JPMorgan has been loud about its commitment to AI. Along with its AI-focused patent activity in recent years, the company is largely leading the banking sector in AI adoption, including training every new hire on AI and rolling out AI assistants last year to 60,000 employees.

But given AI’s tendency to spill the beans, the patent is “likely an effort to ensure safeguarding of personal information and allow the business teams to be able to continue to invest in AI,” said Daniel Barber, co-founder and CEO of DataGrail.

Tech like this could also help JPMorgan and other firms get ahead of regulatory issues, Barber said. Though we’re “unlikely to see a federal privacy bill,” he explained, many states may follow models similar to the recently enacted New Jersey Data Protection Act and the California Privacy Rights Act, especially amid growing AI adoption. “Businesses like JPMorgan Chase would be very probably concerned about that from a compliance standpoint.”

“The market’s still evolving in this area, but it’s clear the regulatory requirements are being put forward, and I wouldn’t expect that to slow down,” Barber added.

Extra Upside

- Paris-based startup Mistral AI intends to eventually go public, CEO Arthur Mensch said at Davos on Tuesday.

- Scale AI is facing a lawsuit from workers over allegations of suffering psychological trauma. It’s the startup’s third lawsuit relating to its labor practices in just over a month.

- Nvidia partner SK Hynix posted record quarterly profit as demand for high-end memory chips for AI data centers continues to grow.

CIO Upside is written by Nat Rubio-Licht. You can find them on X @natrubio__.

CIO Upside is a publication of The Daily Upside. For any questions or comments, feel free to contact us at team@cio.thedailyupside.com.