Happy Monday and welcome to CIO Upside.

Today: With a few big tech firms controlling major model development, the onus of governing responsible AI has shifted into the hands of its users. Plus: A few enterprise highlights from CES; and Microsoft’s patent to make sure your workforce uses AI correctly.

Let’s jump in.

AI Market Concentration May Cause an Ethics Responsibility Shift

With AI model development in the hands of just a few major firms and AI adoption in the hands of all, how should the tech be governed?

Following the initial AI frenzy, a few major players have cemented their place as the gold-star generative model proprietors, with Meta, Google, OpenAI and Anthropic emerging on top. The concentrated market has raised the question of who should be responsible for AI governance: the creators or the users.

“All model creation has wound up in the hands of a few dominant ones,” said Pakshi Rajan, co-founder and chief AI and product officer of Portal26. “This kind of general-purpose AI, people don’t have to build anymore.”

There are three main pillars of AI governance that developers often follow when building these models, Rajan said: Data, ethics, and explainability.

- Before building even starts, developers need to make sure their data is up to snuff. This means ensuring it’s as high-quality and free of bias as possible. Once trained, it’s important to monitor models for “overfitting” to datasets, or when a model doesn’t work on new data.

- The next pillar is ethics, Rajan said. This means asking whether or not your model is going to be used for good. “In other words, can I actually trust this model to make good judgments without causing harm to the society?” he said.

- Finally, there’s explainability, or the capability of a model to retrace its steps and show its work, Rajan said. A model being able to explain how it came up with its outputs and what data its responses are derived from is “the holy grail – and the point where some people just give up,” he said.

While the major players can try their best to follow those pillars in creating and deploying their nearly trillion-parameter generative models, such AI systems are so big and so widespread that they’re practically “neural networks on steroids,” Rajan said. Even with guardrails in place, governance is made harder by the fact that many of these models are actively learning and growing from their users.

“On the model creation side, there is governance required,” Rajan said. “They do have guardrails. But they can’t possibly put a guardrail around every document you could possibly upload.”

That has caused a shift in responsibility, with the burden of AI governance and responsibility shifting into the hands of the people interacting with the models. And despite this new responsibility, enterprises continue to move forward with AI adoption because they’re “afraid that they’re going to lose out” if they prohibit their workforces from using it, Rajan said. “Everyone has to somehow be accountable for how they’re consuming AI.”

The pillars of AI governance for the user look a bit different than those of the builder. The main goal? “Value maximization and risk minimization,” Rajan said. This means identifying where AI is of most use within your organization, and whether the risks of using it – like data security, bias or ethical issues – are significant in those use cases.

As for employers, this could mean implementing clear and understandable responsible use policies and education around AI, and applying consequences when those policies are breached, he said.

“Employees are using models, and it’s the employer’s job to govern and monitor what’s going on,” Rajan said. “Not knowing – and trusting that everyone is using AI responsibly – that phase is out.”

What Enterprise Leaders Can Take Away From CES

Though smart glasses, wellness wearables and robotic vacuum-air purifier hybrids garnered lots of attention at the 2025 Consumer Electronics Show, several announcements from the past week signal trends for the future of enterprise.

AI, of course, took center stage at this year’s CES, from robotics to chips to applications to the models themselves. Unsurprisingly, Nvidia took the opportunity to continue cementing its dominance in the AI industry with ample announcements.

Several of the inventions unveiled point to a larger goal of empowering the individual within the enterprise and democratizing access to AI. One of the biggest examples is Nvidia’s Project Digits, an AI supercomputer that fits on a desk aimed at AI researchers and small enterprises. The device will clock in at roughly $3,000 when it hits the market in May.

“Maybe you don’t need a giant cluster. You’re just developing the early versions of the model, and you’re iterating constantly,” Nvidia CEO Jensen Huang said in his keynote. “You could do it in the cloud, but it just costs a lot more money.”

Nvidia also turned its focus to one of the biggest trends in AI: Agents. The company announced a project called Nvidia AI Blueprints, a system for building AI applications to automate enterprise work. The company said these agents act as “knowledge robots” that can “reason, plan and take action to quickly analyze large quantities of data, summarize and distill real-time insights from video, PDF and other images.”

It wouldn’t be CES without some form of gadgetry: The merging of AI and robotics has been a hot topic for the past year, and companies seized the chance to show off where that focus has gotten them, said Ido Caspi, research analyst at Global X ETFs.

“Humanoid robots were also very visible throughout the conference, suggesting that commercialization of these devices is closer than many expect, especially within industrial/manufacturing industries,” Caspi said.

While companies like Samsung, Unitree and Pollen Robotics showed off their machinery, several of Nvidia’s announcements focused on the models and data that autonomous machines run on.

The company debuted several initiatives to help in the training and development of physical AI, including Cosmos, a platform of generative world foundational models and guardrails to accelerate the development of robotics and autonomous vehicles, and Isaac GR00T, a research initiative aimed at creating general-purpose models and data pipelines for humanoid robotics. “In our view, this can help alleviate the bottleneck of limited data for robotics applications,” Caspi noted.

And of course, tech firms took the opportunity to put their chips on the table … literally. CES is often the place enterprises choose to show off faster and better chips, so it’s no surprise that firms like Intel, AMD, Qualcomm and Nvidia came prepared.

Additionally, despite the notion that AI development is starting to slow, Huang claimed in his keynote that its systems are “progressing way faster than Moore’s Law.” Moore’s Law refers to the 1965 prediction of Intel co-founder Gordon Moore that the speed and capability of chips would double every two years.

Workplaces Face a Balancing Act with Generative AI Monitoring

How can you know whether your employees are using AI the right way? Microsoft may have an answer for that.

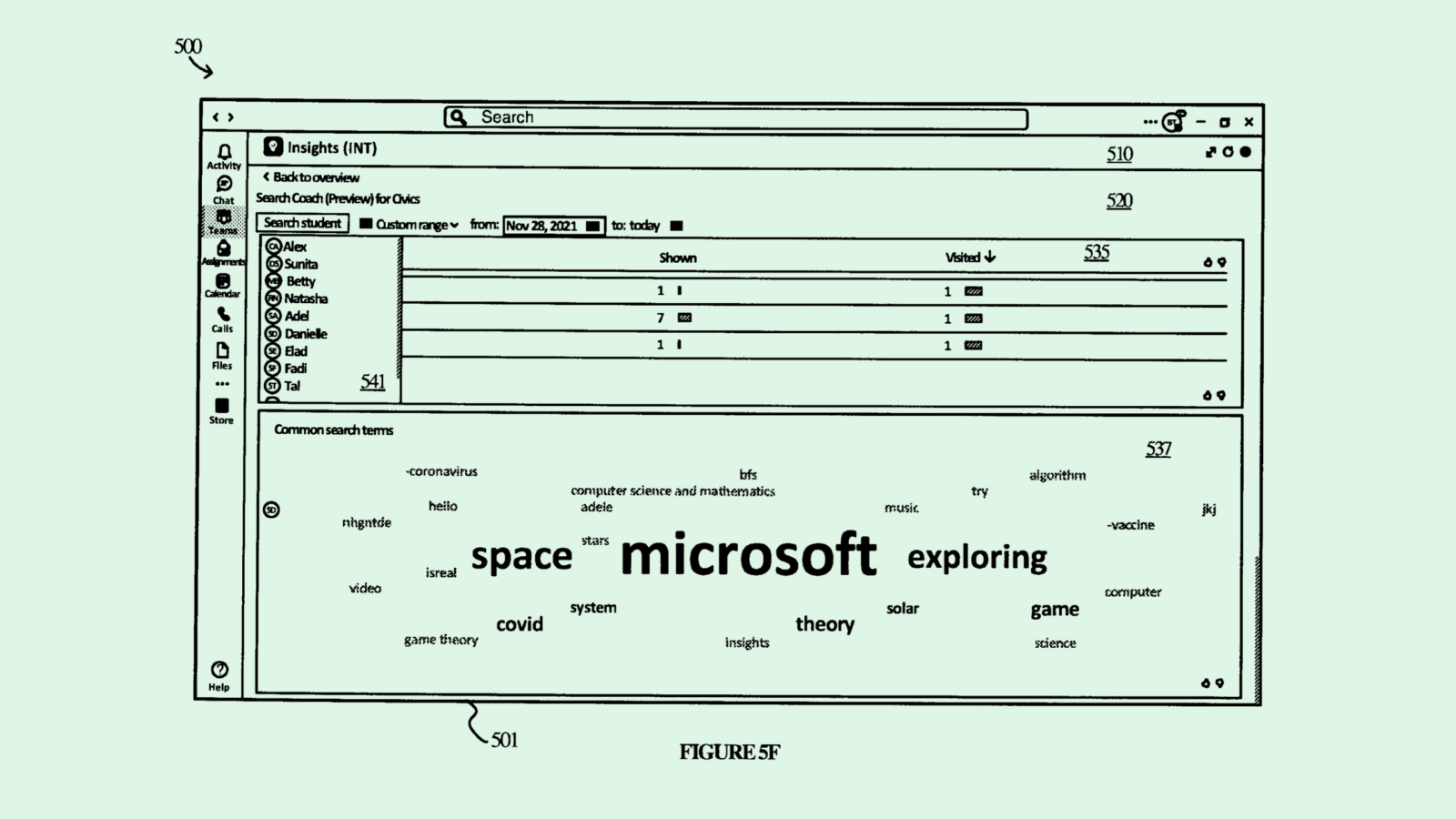

The company is seeking to patent an “insights service for large language model prompting.” Microsoft’s tech is intended to observe and understand how a group of people, such as a business, prompt and utilize large language models. “Users who lack proficiency with respect to prompting are at risk of falling behind in their careers and education,” Microsoft said in the filing.

The system would monitor an “observed group” on a “per-user basis,” tracking prompts and replies as well as behavioral metrics like dwell time, when they stop the conversation and whether they click links provided by the language model. It may categorize user prompts as on-task, off-task or inappropriate, and rate them for their quality.

There are several reasons why an enterprise might want to track the ways in which their employees are using AI, said Thomas Randall, advisory director at Info-Tech Research Group. Tracking AI usage could help with infrastructure and software improvement, as well as ensuring that model usage “aligns with organizational objectives” and falls within legal compliance, he said.

Monitoring can also help workforces figure out if they’re overutilizing – or underutilizing – the AI at their disposal, he said. While that balance differs between companies and sectors, AI generally shouldn’t be doing workers’ jobs entirely, Randall said.

“If it augments your position so that it gets rid of the drudge work so that you can focus on being more attentive to the delivery of service, that’s going to be the best use of AI,” he said.

But enterprises may walk a fine line with monitoring how employees use these models, Randall said. Given that these models often struggle with data security flaws, understanding who has ownership over what data is important in enterprise AI strategies.

Another major factor is employee consent: “Are they aware that they’re being monitored, and are they empowered to have a say in what kind of data they’re happy with being tracked?” Randall said. “Especially if specific individuals can be called out, versus if it’s aggregated and anonymized to the extent possible.”

Extra Upside

- Chip giant TSMC reported record revenue in 2024 amid the AI boom, bringing in $2.9 trillion New Taiwan Dollars, or the equivalent to nearly $87.6 billion U.S.

- A Dutch technical university backed by chip company ASML suspended classes and shut down it’s computer network after a cyberattack.

- Hewlett Packard won a deal worth $1 billion to provide Elon Musk-owned X with servers for AI work.

CIO Upside is written by Nat Rubio-Licht. You can find them on X @natrubio__.

CIO Upside is a publication of The Daily Upside. For any questions or comments, feel free to contact us at team@cio.thedailyupside.com.