Happy Monday and welcome to CIO Upside.

Today: Enterprises need to think about the ethical implications of their AI deployments — or risk long-term reputational damage, one expert said. Plus: Why the “next Nvidia” may be in software; IBM makes agentic follow the rules.

Let’s jump in.

How Enterprises Can Fit Ethics Into Their AI Strategies

Amid the AI frenzy, a critical question is often getting lost: What are the consequences of building and using these models?

The ethical implications of AI often fall to the wayside in favor of faster and more efficient deployment – especially as competition in the space remains high, said Brian Green, director of technology ethics at the Markkula Center for Applied Ethics at Santa Clara University. But enterprises building ethics into their own AI strategies could help prevent lasting reputational damage, both for themselves and the industry at large, Green said.

“Ultimately, these technologies are world-shaping technologies, and if they’re done wrong, they’re going to turn the world into a bad place,” said Green.

So what does it mean to use AI ethically? The main consideration lies with figuring out where AI fits into your organization, said Green: Who is using it, what it’s used for, who is benefiting from it and who is potentially harmed by it.

- “They should make sure that the AI that they’re making or using is producing the effects that they actually wanted,” Green said.

- There are many intersections of risk to consider, such as data privacy, model biases from training, copyright issues, environmental considerations or how the AI is put to use.

- When introducing new AI, enterprises can perform cultural assessments to ensure that everyone in their business is on the same page about AI and its risks, said Green. But while ethics should be considered by everyone within an enterprise, “the tone is set at the top,” he said.

“The leadership has to decide whether they’re actually going to take ethics seriously,” said Green. “If the leadership is not on board, everything is going to look like a cost instead of a benefit. ”

The last step is implementation, he said. That involves moving past the “theoretical phase” and implementing practical AI monitoring and guardrails for privacy, safety and accountability, he said. “It’s one thing to have a set of principles … it’s a completely different thing to actually implement those in a company and measure them and make sure that you’re making progress on them.”

Building ethics into enterprise AI strategies could help companies steer clear of incidents that inflict long-term damage on their reputations, said Green. The impact of such problems can stretch beyond the enterprise responsible, impacting the industry as a whole.

“If you are making mistakes in the deployment of AI technology, it is going to damage your reputation by making you look untrustworthy, incompetent or uncaring,” he said. And depending on the magnitude of harm, “it’s not something that can be recovered from very easily.”

But in a market as hot as AI is right now, the downsides tend to get lost. The more competition there is, the less people think about ethics, said Green. They tend to focus on the short-term payout rather than the long-term impacts.

A lot of tech giants shifted their focus away from ethics and responsibility when the AI boom began – with the likes of Meta, OpenAI and Microsoft cutting responsible AI staff in recent years. And with the Trump administration slicing the already-feeble government oversights that were in place, the onus of responsibility and ethics lies on the users themselves.

For tech giants, ignoring ethics “hasn’t affected their money enough” so far, Green said. “This is an experiment that’s run over time. Over a certain period of time, people will start realizing that the companies that are protecting their reputations better are getting more business.”

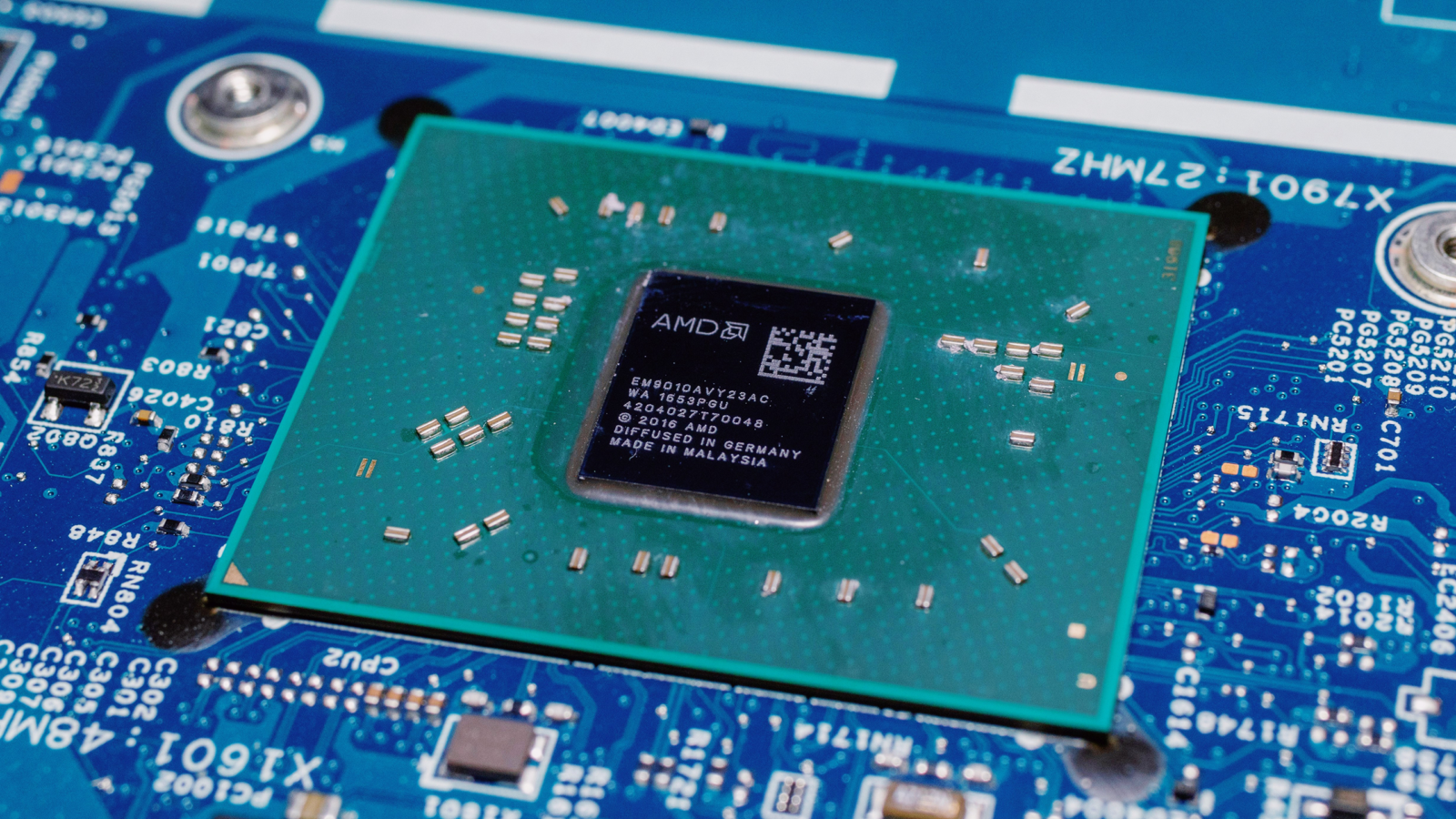

Investors are Growing Cautious of the AI Hardware Market

The AI market has largely been on the rise for the past few years, but investors may be getting more cautious about where the chips fall — literally.

Chipmakers AMD and Arm saw their shares slide last week on disappointing earnings outlooks. Though AMD reported growth will be in the “strong” double digits, it wasn’t enough to impress investors. Arm, meanwhile, predicts that revenue will be $1.18 billion to $1.28 billion in the next quarter, coming in below Wall Street estimates.

Though AMD has struggled to shine with Nvidia’s performance soaking up the AI chip spotlight, Arm’s outlook could serve as a better “bellwether” for the industry at large, said Romeo Alvarez, director and research analyst at William O’Neil. The U.S.-China trade war and export ban could also “put a ceiling” on expectations, he said.

“Companies are definitely being more cautious,” said Alvarez. “Nvidia may even get caught in the crossfire … it’s reasonable to tone down your expectations.”

But broadly, these forecasts may signal a shift in the market similar to what took place in the early days of the internet: Investor interest is shifting from innovations in hardware to those in software, said Alvarez. Similar to Cisco being one of the “early winners” of the internet, AI hardware companies “allowed for the technology to become mainstream.”

And though hardware providers like Nvidia were the “clear winner” of the AI market’s early days, only so much more innovation can happen on the hardware side. Even large language models are “turning into a commodity,” said Alvarez.

So what kind of companies are the industry’s next big thing? That’d be those monetizing AI in a way that makes sense for enterprises, such as AI agent applications for departments like accounting, HR, cybersecurity and coding, Alvarez said.

- “If you can automate things with your platform, companies are going to be all over it — if you can do it faster and at a cheaper price,” Alvarez said.

- Firms like Palantir and Cloudflare — which both saw stock surges after recent earnings reports — could signal this shift.

“The next Nvidia isn’t going to come from the semiconductor side,” Alvarez noted. “It’s probably going to come from the software side, as companies figure out ways to develop applications. The AI cycle will continue, but the winners may change from the hardware side to the software side.”

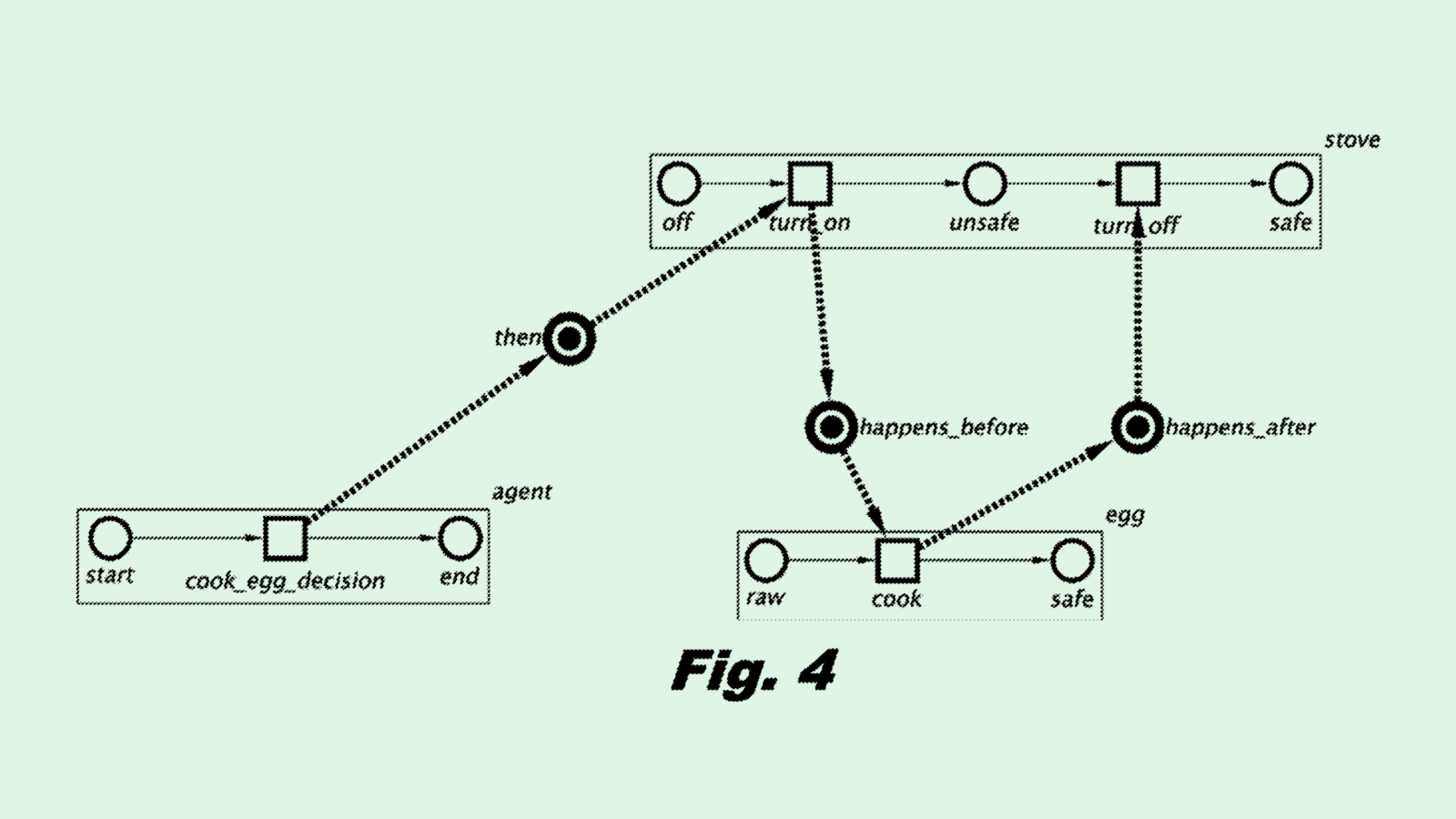

IBM Patent Adds Guardrails for Agentic AI

How can you make sure your AI assistants don’t go off the rails?

IBM might have something for that: The tech firm is seeking to patent a system for “constrained policy optimization for safe reinforcement learning.” This tech essentially implements rules into language models to keep them from picking up risky behaviors during reinforcement learning.

For reference, reinforcement learning is a training technique that essentially teaches a model to function in an environment through reward and penalization, with the goal of a reinforcement learning agent being to “maximize a cumulative reward over time.” What IBM’s tech does is extract “safety hints” from a model’s behavior that indicate potential risks, and use those to assign a cost to such actions.

“If the reward signal is not properly designed, the agent may learn unintended or even potentially dangerous behavior,” IBM said in the filing.

IBM’s system is dynamic, meaning that it adjusts over time to better understand the needs and constraints of the environment that it’s in. The tech is also specifically used for text models, and could be helpful for keeping chatbots or automated AI assistants in check.

This filing comes at a time when agentic AI is all the rage. Beyond call-and-response chatbots, enterprises are seeking ways to get more from their AI. Autonomous agents with the ability to freely handle tasks seem to be the solution.

Interest from Big Tech could signal that this trend is more than just a buzzword: Nvidia, Microsoft, Google and Salesforce have all staked their claim in agentic AI with new enterprise products. IBM has released its own agentic tooling, including a system to “supervise” how AI agents get a task done.

But even if this new wave of AI is capable of handling more, it still comes with the same challenges that any AI program has, including data security, hallucination, and a tendency to slip into biases. Tech that keeps these agents in line, as IBM proposes in its patent application, could become increasingly important as enterprises continue to adopt them.

Extra Upside

- Job Jump: Employers added 228,000 technology jobs during January. Despite this, unemployment among IT positions also rose.

- Defense Tech Boom: Anduril is in talks to raise raise up to $2.5 billion at a valuation of $28 billion.

- OpenAI Overseas: OpenAI is planning to open an office in Munich, Germany.

CIO Upside is written by Nat Rubio-Licht. You can find them on X @natrubio__.

CIO Upside is a publication of The Daily Upside. For any questions or comments, feel free to contact us at team@cio.thedailyupside.com.