Happy Thursday and welcome to CIO Upside.

Today: The recent proliferation of deep research agents is like “search on steroids,” one expert told CIO Upside. But risks still exist. Plus: Security lessons from Oracle’s recent breaches; and OpenAI makes AI agent building as easy as prompting.

But first, a quick programming note: Monday’s newsletter will feature a Q&A with Dataiku’s Head of AI Strategy, Kurt Muehmel, discussing how to make AI agents work for your enterprise.

Let’s take a peek.

What You Need to Know About Deep Research Agents

What would you do with a deep research agent?

AI heavyweights have begun prioritizing the development of agents that go above and beyond the typical chatbot to provide in-depth, complex responses to queries. And with improved accuracy, the agents could offer a more reliable assistant for enterprise workers, said Bob Rogers, chief product and technology officer of Oii.ai and co-founder of BeeKeeper AI.

“It’s a credible tool that ups people’s game around not being fooled by the first thing that a (large language model) says, which may or may not be real,” said Rogers.

OpenAI came out of the gate in early February with its deep research offering, which is capable of complex, “multi-step research” using reinforcement learning, a type of AI training that employs rewards and penalties. The company claimed in its announcement that, when prompted, the model “accomplishes in tens of minutes what would take a human many hours.”

Google DeepMind launched its deep research offering in December, Perplexity debuted one in mid-February and Anthropic is now reportedly working on a deep research feature called Compass.

Among the factors separating deep research products from traditional large language models, Rogers said, is their ability to sift iteratively through several layers of research to retrieve, check, refine and synthesize information, reducing the probability of hallucination.

- Using reinforcement learning for training also may give these models “a material lead in the accuracy and completeness of the process,” he said.

- If you ask a large language model about how to put together a cybersecurity strategy, it may say “an appropriate backup strategy is necessary,” for example, but not provide further details that a deep research agent would offer, Rogers said.

“Classic large language models, they talk a good game, but they don’t know anything,” said Rogers. Deep research models, meanwhile, “they’re kind of like search on steroids.”

And there are many ways an enterprise could put these agents to work, Rogers said. Along with providing clear benefits for people in deeply technical roles, these models can also save time in helping create policies and guidelines for issues such as cybersecurity and regulatory compliance, said Rogers.

There also may be an opportunity for a “hybrid” of deep research and traditional language model capabilities for customer service, said Rogers, since typical customer service bots “can hallucinate and are never going deep into solving a problem.”

Even though these agents go above and beyond your average chatbot, they still face some of the same problems that plague less advanced AI. For one, while these models have a lower chance of hallucination, it’s not zero, said Rogers, and any outputs should still be double checked.

The models also come with the same security risks that any AI system has, said Rogers. “I would still be very hesitant about putting sensitive data into one of these systems.”

Enterprise Security Lessons from Oracle’s Reported Breaches

It’s been a rough few weeks for Oracle.

Not only is the FBI reportedly investigating the theft of patient data from legacy servers at Oracle’s healthcare arm, but security researchers have presented evidence that hackers exploited a vulnerability allowing access to Oracle Cloud infrastructure, potentially impacting approximately 6 million records from over 140,000 tenants. Oracle has denied the Cloud-related claims.

Security breaches are often linked to four main attack vectors: Legacy infrastructure, credential attacks, social engineering such as phishing attacks, and forgotten software bugs, said Sharon Auma-Ebanyat, Research Director at Info-Tech Research Group. “What a lot of hackers are looking for are the places people are not paying attention to,” said Auma-Ebanyat.

“Organizations need to prioritize cybersecurity at a much higher level than they’ve normally done,” she added.

The alleged breaches highlight the risk presented by a lack of visibility on third-party software and technologies, said Joe Silva, co-founder and CEO of cybersecurity firm Spektion. By its nature, third-party software is entirely external, said Silva, meaning there’s little visibility into the underlying risk and vulnerabilities that a software provider may face, and few security controls that an enterprise can apply.

“Enterprises need to take a harder look at the amount of risk and volatility that they’re carrying associated with third-party providers that are handling critical data and critical services,” said Silva.

The best way for organizations to handle the issue when using third-party software is to know what you’re in for if something goes awry, said Silva.

- Having a “tolerance for ambiguity” when you’re relying on a third-party provider can allow your business to account for vulnerabilities before they become problems, he added. “Knowing that you’re not going to have good visibility into underlying security issues – you have to understand and have an organizational risk tolerance for that.”

- Software providers, meanwhile, should be held up to a higher standard for continuous monitoring of vulnerabilities, said Silva. This is especially true if these providers are handling business-critical operations that, “if exploited, would have a huge blast radius,” he said.

“Enterprises need to demand the same type of continuous monitoring and runtime visibility into risk that they would have on critical data and services within their sphere of control,” Silva added.

As Agentic AI Sweeps Market, OpenAI Seeks Patent For Easy Model Builder

The concept of autonomous agents has swept the enterprise AI market, and OpenAI may be trying to lock down some IP while development is still in early stages.

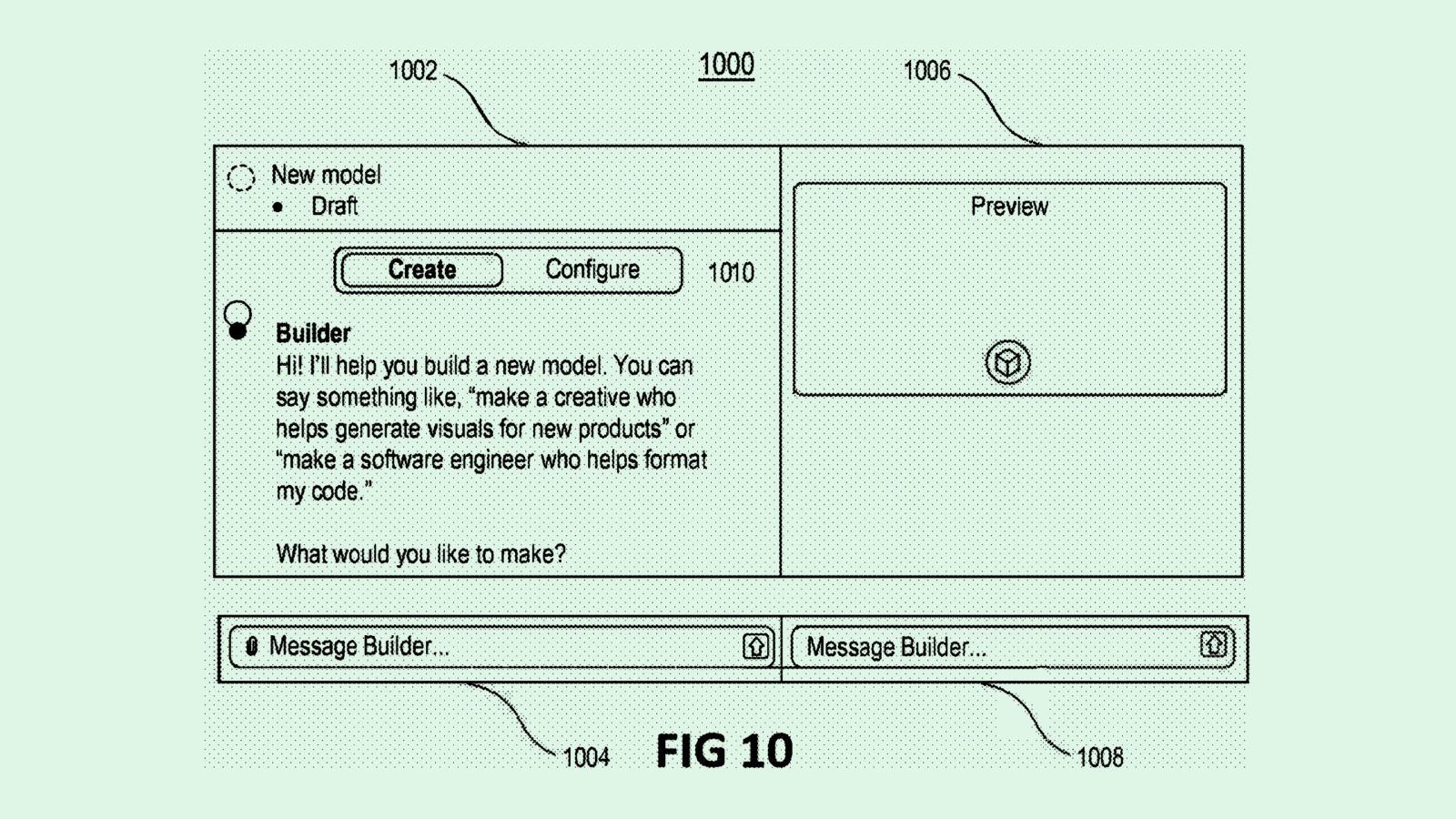

The company is seeking to patent a system for “generative customized AI models” that includes building, evaluating and deploying task-specific AI agents that users can customize.

Users who want the system to build a custom agent would submit a query with specifications, including a “knowledge base” – such as relevant documents or data – and any capabilities they want.

OpenAI’s tech would then use the information to fine-tune a “base model” and generate a custom interface in which a user can interact with it. Before deployment, OpenAI’s system evaluates the agent to ensure it complies with content policies and security standards, and blocks deployment if any red flags appear.

The goal is “to make the model faster, more efficient, and use less computational resources than a standard or generic model,” OpenAI said in the filing.

As it stands, OpenAI’s patent history is rather sparse, especially in comparison with competitors such as Google, Microsoft or Amazon in the heated AI race. This patent application in particular could signal that the company is paying close attention to the emergence of agentic AI – and its flaws.

Giving AI more autonomy, which can streamline workflows and cut down on busywork, also opens your enterprise up to more security, governance and accuracy risks. By running evaluations on these models before their deployment, OpenAI’s system could mitigate some of the risks. Plus, allowing users to build agents in a seamless way might lower barriers to adoption for less technically inclined enterprises.

Extra Upside

- AI University: Anthropic is launching a chatbot plan for colleges and universities, called Claude for Education.

- Tesla Tumble: Tesla reported a 13% year-on-year drop in deliveries for the first quarter.

- What Do Deep Tech Founders, AI Engineers, And Silicon Valley Execs Have In Common? They rely on Semafor Tech, Reed Albergotti’s twice-weekly newsletter, for exclusive scoops, analysis, and insights into the ideas shaping the next decade of tech breakthroughs. Join 60,000 other tech professionals — subscribe for free.*

* Partner

CIO Upside is written by Nat Rubio-Licht. You can find them on X @natrubio__.

CIO Upside is a publication of The Daily Upside. For any questions or comments, feel free to contact us at team@cio.thedailyupside.com.