What You Need to Know About Deep Research Agents

They have the capabilities of “search on steroids,” but risks still exist.

Sign up to get cutting-edge insights and deep dives into innovation and technology trends impacting CIOs and IT leaders.

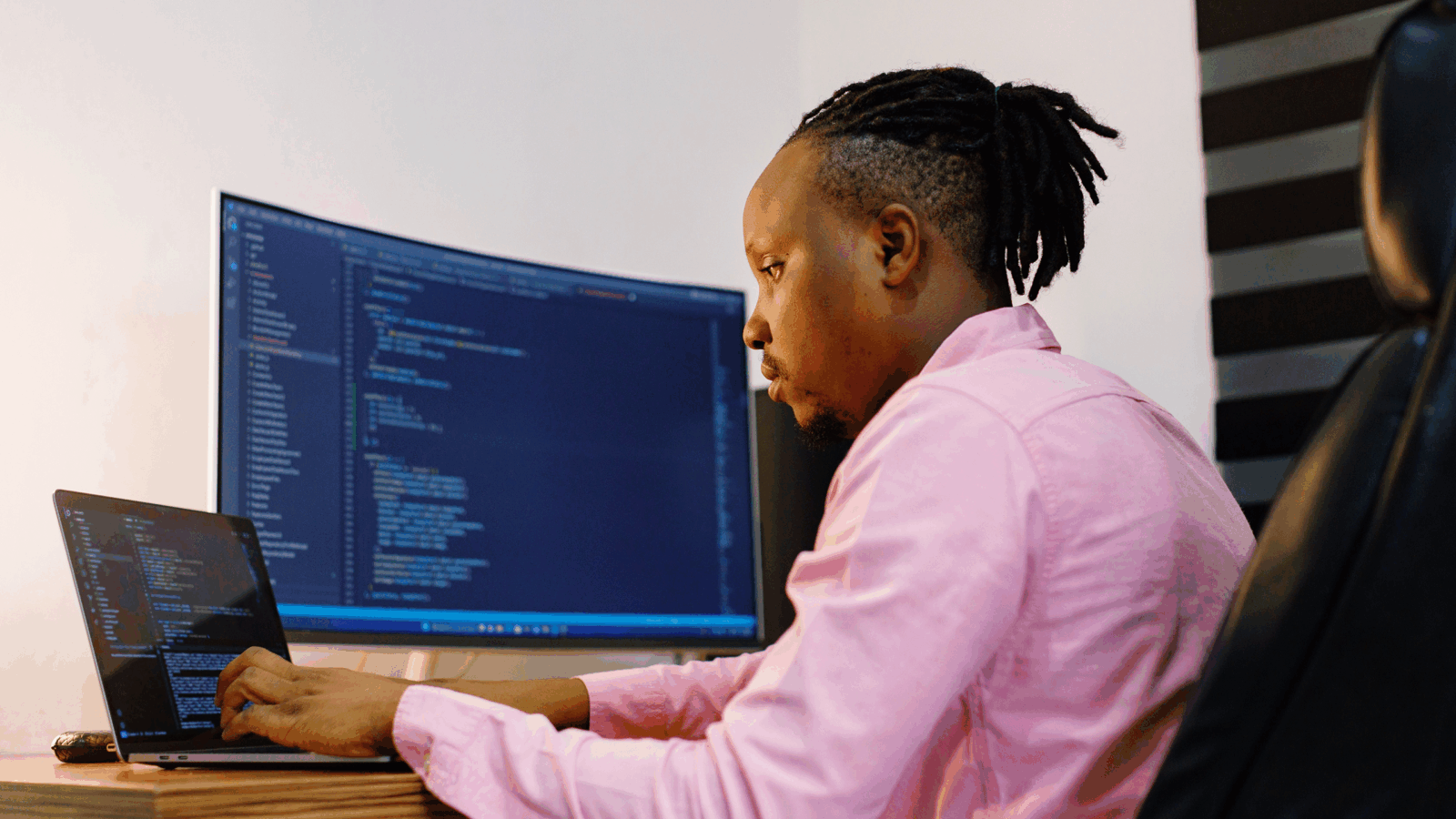

What would you do with a deep research agent?

AI heavyweights have begun prioritizing the development of agents that go above and beyond the typical chatbot to provide in-depth, complex responses to queries. And with improved accuracy, the agents could offer a more reliable assistant for enterprise workers, said Bob Rogers, chief product and technology officer of Oii.ai and co-founder of BeeKeeper AI.

“It’s a credible tool that ups people’s game around not being fooled by the first thing that a (large language model) says, which may or may not be real,” said Rogers.

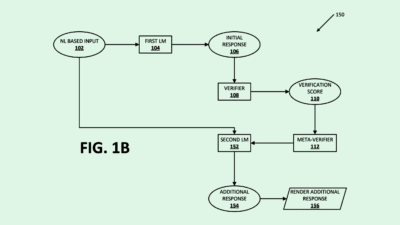

OpenAI came out of the gate in early February with its deep research offering, which is capable of complex, “multi-step research” using reinforcement learning, a type of AI training that employs rewards and penalties. The company claimed in its announcement that, when prompted, the model “accomplishes in tens of minutes what would take a human many hours.”

Google DeepMind launched its deep research offering in December, Perplexity debuted one in mid-February and Anthropic is now reportedly working on a deep research feature called Compass.

Among the factors separating deep research products from traditional large language models, Rogers said, is their ability to sift iteratively through several layers of research to retrieve, check, refine and synthesize information, reducing the probability of hallucination.

- Using reinforcement learning for training also may give these models “a material lead in the accuracy and completeness of the process,” he said.

- If you ask a large language model about how to put together a cybersecurity strategy, it may say “an appropriate backup strategy is necessary,” for example, but not provide further details that a deep research agent would offer, Rogers said.

“Classic large language models, they talk a good game, but they don’t know anything,” said Rogers. Deep research models, meanwhile, “they’re kind of like search on steroids.”

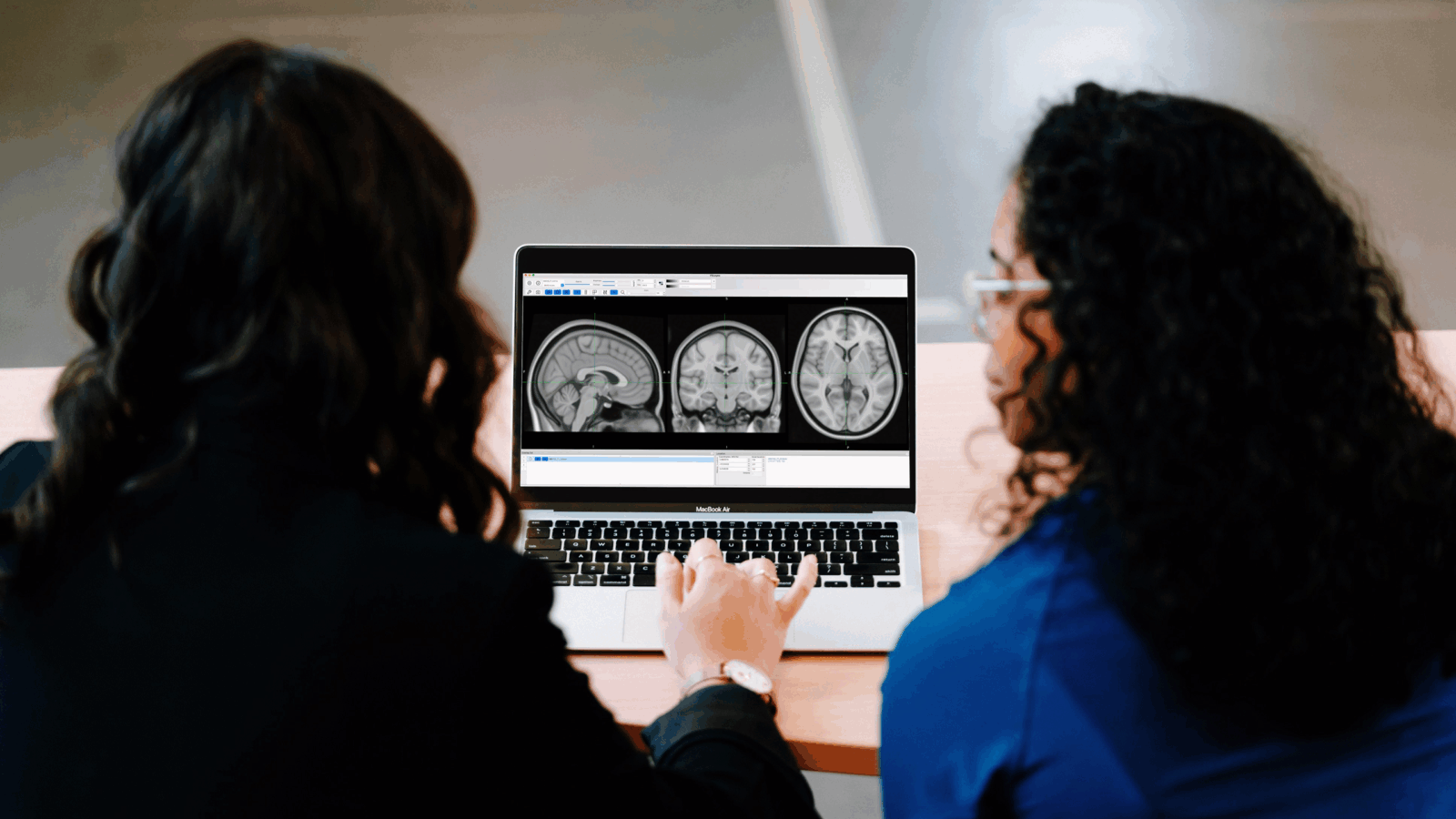

And there are many ways an enterprise could put these agents to work, Rogers said. Along with providing clear benefits for people in deeply technical roles, these models can also save time in helping create policies and guidelines for issues such as cybersecurity and regulatory compliance, said Rogers.

There also may be an opportunity for a “hybrid” of deep research and traditional language model capabilities for customer service, said Rogers, since typical customer service bots “can hallucinate and are never going deep into solving a problem.”

Even though these agents go above and beyond your average chatbot, they still face some of the same problems that plague less advanced AI. For one, while these models have a lower chance of hallucination, it’s not zero, said Rogers, and any outputs should still be double checked.

The models also come with the same security risks that any AI system has, said Rogers. “I would still be very hesitant about putting sensitive data into one of these systems.”