How to Keep Your Workforce from Overusing AI

The first step is figuring out where AI isn’t necessary.

Sign up to get cutting-edge insights and deep dives into innovation and technology trends impacting CIOs and IT leaders.

Overreliance on AI can lead to far more problems than simply making your workforce less industrious.

AI can be addictive, and with enterprises adopting more AI tools and integrating agentic capabilities, the question of where to draw the line is on the minds of many CIOs. Lacking a clear AI policy and proper workforce education could lead to the use of shadow AI, or AI tools unsanctioned by employers, exposing employers to security risks and other liabilities.

“Using AI tools on a regular basis, it can be habit-forming,” said Zac Engler, CEO of AI consultancy Bodhi AI. “There definitely is a danger of over-dependence on these systems.”

Though AI can do a lot for us, it still faces issues with data security and hallucination if used improperly. And whether or not you’re aware of it, your employees are probably using AI.

- A study of 6,000 knowledge workers by AI firm Perplexity found that 75% use AI daily, and around half are engaging in shadow AI activity. Around 46% said they’d continue to use AI even if their organizations banned it outright.

- Researchers from Carnegie Mellon University and Microsoft found that the overuse of AI could hinder critical thinking potential.

Avoiding the pitfalls of overreliance and the use of shadow AI is all about having a clear cut strategy, said Engler. Teaching employees when they should and shouldn’t use AI is critical as more of these tools are adopted. “We’re essentially shepherds of the machine,” he said. “No shepherd should ever let the sheep decide where to go.”

“The real risk isn’t necessarily the use of AI, it’s the overreliance without a strategy,” Engler added. “Overreliance tends to happen in organizations when they treat AI as the decision-maker rather than a decision-support tool.”

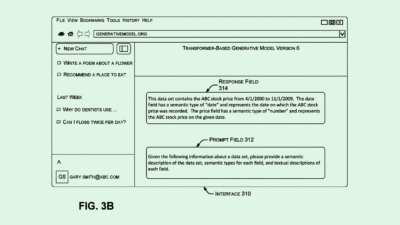

So how can you find that middle ground? The first step is figuring out where AI isn’t necessary, said Engler. Look for places where humans should remain in the loop, such as critical decision points, versus the tedious and low-stakes tasks that don’t need a human hand. If you’re worried about hallucination, asking your AI systems for “confidence thresholds,” or how confident the model is in its work, could help mitigate accuracy issues, he said.

“The sooner you can figure out how to ride that wave of this continually improving and continually evolving system, the sooner you’re going to be able to succeed,” said Engler.